Today is the fifth anniversary of the launch of the Amazon Echo, so in a talk I gave yesterday at the Web Summit in Lisbon, I looked at how far Alexa has come and where we’re heading next.

Amazon’s mission is to be the earth’s most customer-centric company. With that mission in mind and the Star Trek computer as an inspiration, on November 6, 2014, a small multidisciplinary team launched Amazon Echo, with the aspiration of revolutionizing daily convenience for our customers using artificial intelligence (AI).

Before Echo ushered in the convenience of voice-enabled ambient computing, customers were used to searches on desktops and mobile phones, where the onus was entirely on them to sift through blue links to find answers to their questions or connect to services. While app stores on phones offered “there’s an app for that” convenience, the cognitive load on customers continued to increase.

Alexa-powered Echo broke these human-machine interaction paradigms, shifting the cognitive load from customers to AI and causing a tectonic shift in how customers interact with a myriad of services, find information on the Web, control smart appliances, and connect with other people.

Enhancements in foundational components of Alexa

In order to be magical at the launch of Echo, Alexa needed to be great at four fundamental AI tasks:

- Wake word detection: On the device, detect the keyword “Alexa” to get the AI’s attention;

- Automatic speech recognition (ASR): Upon detecting the wake word, convert audio streamed to the Amazon Web Services (AWS) cloud into words;

- Natural-language understanding (NLU): Extract the meaning of the recognized words so that Alexa can take the appropriate action in response to the customer’s request; and

- Text-to-speech synthesis (TTS): Convert Alexa’s textual response to the customer’s request into spoken audio.

Over the past five years, we have continued to advance each of these foundational components. In both wake word and ASR, we’ve seen fourfold reductions in recognition errors. In NLU, the error reduction has been threefold — even though the range of utterances that NLU processes, and the range of actions Alexa can take, have both increased dramatically. And in listener studies that use the MUSHRA audio perception methodology, we’ve seen an 80% reduction in the naturalness gap between Alexa’s speech and human speech.

Our overarching strategy for Alexa’s AI has been to combine machine learning (ML) — in particular, deep learning — with the large-scale data and computational resources available through AWS. But these performance improvements are the result of research on a variety of specific topics that extend deep learning, including

- semi-supervised learning, or using a combination of unlabeled and labeled data to improve the ML system;

- active learning, or the learning strategy where the ML system selects more-informative samples to receive manual labels;

- large-scale distributed training, or parallelizing ML-based model training for efficient learning on a large corpus; and

- context-aware modeling, or using a wide variety of information — including the type of device where a request originates, skills the customer uses or has enabled, and past requests — to improve accuracy.

For more coverage of the anniversary of the Echo's launch, see "Alexa, happy birthday" on Amazon's Day One blog.

Customer impact

From Echo’s launch in November 2014 to now, we have gone from zero customer interactions with Alexa to billions per week. Customers now interact with Alexa in 15 language variants and more than 80 countries.

Through the Alexa Voice Service and the Alexa Skills Kit, we have democratized conversational AI. These self-serve APIs and toolkits let developers integrate Alexa into their devices and create custom skills. Alexa is now available on hundreds of different device types. There are more than 85,000 smart-home products that can be controlled with Alexa, from more than 9,500 unique brands, and third-party developers have built more than 100,000 custom skills.

Ongoing research in conversational AI

Alexa’s success doesn’t mean that conversational AI is a solved problem. On the contrary, we’ve just scratched the surface of what’s possible. We’re working hard to make Alexa …

1. More self-learning

Our scientists and engineers are making Alexa smarter faster by reducing reliance on supervised learning (i.e., building ML models on manually labeled data). A few months back, we announced that we’d trained a speech recognition system on a million hours of unlabeled speech using the teacher-student paradigm of deep learning. This technology is now in production for UK English, where it has improved the accuracy of Alexa’s speech recognizers, and we’re working to apply it to all language variants.

This year, we introduced a new self-learning paradigm that enables Alexa to automatically correct ASR and NLU errors without any human annotator in the loop. In this novel approach, we use ML to detect potentially unsatisfactory interactions with Alexa through signals such as the customer’s barging in on (i.e., interrupting) Alexa. Then, a graphical model trained on customers’ paraphrases of their requests automatically revises failing requests into semantically equivalent forms that work.

For example, “play Sirius XM Chill” used to fail, but from customer rephrasing, Alexa has learned that “play Sirius XM Chill” is equivalent to “play Sirius Channel 53” and automatically corrects the failing variant.

Using this implicit learning technique and occasional explicit feedback from customers — e.g., “did you want/mean … ?” — Alexa is now self-correcting millions of defects per week.

2. More natural

In 2015, when the first third-party skills began to appear, customers had to invoke them by name — e.g., “Alexa, ask Lyft to get me a ride to the airport.” However, with tens of thousands of custom skills, it can be difficult to discover skills by voice and remember their names. This is a unique challenge that Alexa faces.

To address this challenge, we have been exploring deep-learning-based name-free skill interaction to make skill discovery and invocation seamless. For several thousands of skills, customers can simply issue a request — “Alexa, get me a ride to the airport” — and Alexa uses information about the customer’s context and interaction history to decide which skill to invoke.

Another way we’ve made interacting with Alexa more natural is by enabling her to handle compound requests, such as “Alexa, turn down the lights and play music”. Among other innovations, this required more efficient techniques for training semantic parsers, which analyze both the structure of a sentence and the meanings of its parts.

Alexa’s responses are also becoming more natural. This year, we began using neural networks for text-to-speech synthesis. This not only results in more-natural-sounding speech but makes it much easier to adapt Alexa’s TTS system to different speaking styles — a newscaster style for reading the news, a DJ style for announcing songs, or even celebrity voices, like Samuel L. Jackson’s.

3. More knowledgeable

Every day, Alexa answers millions of questions that she’s never been asked before, an indication of customers’ growing confidence in Alexa’s question-answering ability.

The core of Alexa’s knowledge base is a knowledge graph, which encodes billions of facts and has grown 20-fold over the past five years. But Alexa also draws information from hundreds of other sources.

And now, customers are helping Alexa learn through Alexa Answers, an online interface that lets people add to Alexa’s knowledge. In a private beta test and the first month of public release, Alexa customers have furnished Alexa Answers with hundreds of thousands of new answers, which have been shared with customers millions of times.

4. More context-aware and proactive

Today, through an optional feature called Hunches, Alexa can learn how you interact with your smart home and suggest actions when she senses that devices such as lights, locks, switches, and plugs are not in the states that you prefer. We are currently expanding the notion of Hunches to include another Alexa feature called Routines. If you set your alarm for 6:00 a.m. every day, for example, and on waking, you immediately ask for the weather, Alexa will suggest creating a Routine that sets the weekday alarm to 6:00 and plays the weather report as soon as the alarm goes off.

Earlier this year, we launched Alexa Guard, a feature that you can activate when you leave the house. If your Echo device detects the sound of a smoke alarm, a carbon monoxide alarm, or glass breaking, Alexa Guard sends you an alert. Guard’s acoustic-event-detection model uses multitask learning, which reduces the amount of labeled data needed for training and makes the model more compact.

This fall, we will begin previewing an extended version of Alexa Guard that recognizes additional sounds associated with activity, such as footsteps, talking, coughing, or doors closing. Customers can also create Routines that include Guard — activating Guard automatically during work hours, for instance.

5. More conversational

Customers want Alexa to do more for them than complete one-shot requests like “Alexa, play Duke Ellington” or “Alexa, what’s the weather?” This year, we have improved Alexa’s ability to carry context from one request to another, the way humans do in conversation.

For instance, if an Alexa customer asks, “When is The Addams Family playing at the Bijou?” and then follows up with the question “Is there a good Mexican restaurant near there?”, Alexa needs to know that “there” refers to the Bijou. Some of our recent work in this area won one of the two best-paper awards at the Association for Computational Linguistics’ Workshop on Natural-Language Processing for Conversational AI. The key idea is to jointly model the salient entities with transformer networks that use a self-attention mechanism.

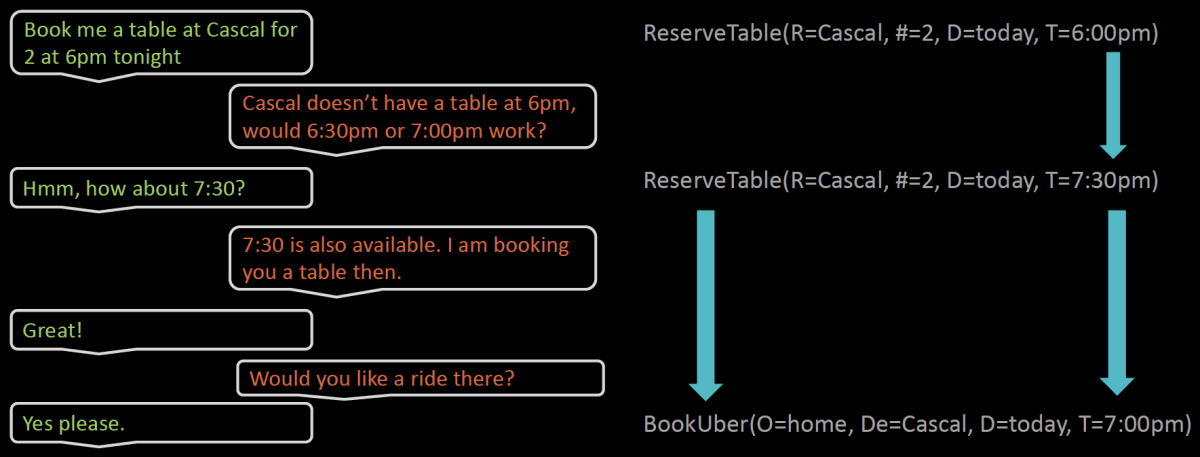

However, completing complex tasks that require back-and-forth interaction and anticipation of the customer’s latent goals is still a challenging problem. For example, a customer using Alexa to plan a night out would have to use different skills to find a movie, a restaurant near the theater, and a ride-sharing service, coordinating times and locations.

We are currently testing a new deep-learning-based technology, called Alexa Conversations, with a small group of skill developers who are using it to build high-quality multiturn experiences with minimal effort. The developer supplies Alexa Conversations with a set of sample dialogues, and a simulator expands it into 100 times as much data. Alexa Conversations then uses that data to train a bleeding-edge deep-learning model to predict dialogue actions, without the need for a priori hand-authored rules.

At re:MARS, we demonstrated a new Night Out planning experience that uses Alexa Conversations technology and novel skill-transitioning algorithms to automatically coordinate conversational planning tasks across multiple skills.

We’re also adapting Alexa Conversations technology to the new concierge feature for Ring video doorbells. With this technology, the doorbell can engage in short conversations on your behalf, taking messages or telling a delivery person where to leave a package. We’re working hard to bring both of these experiences to customers.

What will the next five years look like?

Five years ago, it was inconceivable to us that customers would be interacting with Alexa billions of times per week and that developers would, on their own, build 100,000-plus skills. Such adoption is inspiring our teams to invent at an even faster pace, creating novel experiences that will increase utility and further delight our customers.

1. Alexa everywhere

The Echo family of devices and Alexa’s integration into third-party products has made Alexa a part of millions of homes worldwide. We have been working arduously on bringing the convenience of Alexa, which revolutionized daily convenience in homes, to our customers on the go. Echo Buds, Echo Auto, and the Day 1 Editions of Echo Loop and Echo Frames are already demonstrating that Alexa-on-the-go can simplify our lives even further.

With greater portability comes greater risk of slow or lost Internet connections. Echo devices with built-in smart-home hubs already have a hybrid mode, which allows them to do some spoken-language processing when they can’t rely on Alexa’s cloud-based models. This is an important area of ongoing research for us. For instance, we are investigating new techniques for compressing Alexa’s machine learning models so that they can run on-device.

The new on-the-go hardware isn’t the only way that Alexa is becoming more portable. The new Guest Connect experience allows you to log into your Alexa account from any Echo device — even ones you don’t own — and play your music or preferred news.

2. Moving up the AI stack

Alexa’s unparalleled customer and developer adoption provides new challenges for AI research. In particular, to further shift the cognitive load from customers to AI, we must move up the AI stack, from predictions (e.g., extracting customers’ intents) to more contextual reasoning.

One of our goals is to seamlessly connect disparate skills to increase convenience for our customers. Alexa Conversations and the Night Out experience are the first steps in that direction, completing complex tasks across multiple services and skills.

To enable the same kind of interoperability across different AIs, we helped found the Voice Interoperability Initiative, a consortium of dozens of tech companies uniting to promote customer choice by supporting multiple, interoperable voice services on a single device.

Alexa will also make better decisions by factoring in more information about the customer’s context and history. For instance, when a customer asks an Alexa-enabled device in a hotel room “Alexa, what are the pool hours?”, Alexa needs to respond with the hours for the hotel pool and not the community pool.

We are inspired by the success of learning directly from customers through the self-learning techniques I described earlier. This is an important area where we will continue to incorporate new signals, such as vocal frustration with Alexa, and learn from direct and indirect feedback to make Alexa more accurate.

3. Alexa for everyone

As AI systems like Alexa become an indispensable part of our social fabric, bias mitigation and fairness in AI will require even deeper attention. Our goal is for Alexa to work equally well for all our customers. In addition to our own research, we’ve entered into a three-year collaboration with the National Science Foundation to fund research on fairness in AI.

We envision a future where anyone can create conversational-AI systems. With the Alexa Skills Kit and Alexa Voice Service, we made it easy for developers to innovate using Alexa’s AI. Even end users can build personal skills within minutes using Alexa Skill Blueprints.

We are also thrilled with the Alexa Prize competition, which is democratizing conversational AI by letting university students perform state-of-the-art research at scale. University teams are working on the ultimate conversational-AI challenge of creating “socialbots that can converse coherently and engagingly for 20 minutes with humans on a range of current events and popular topics”.

The third instance of the challenge is under way, and we are confident that the university teams will continue to push boundaries — perhaps even give their socialbots an original sense of humor, by far one of the hardest AI challenges.

Together with developers and academic researchers, we’ve made great strides in conversational AI. But there’s so much more to be accomplished. While the future is difficult to predict, one thing I am sure of is that the Alexa team will continue to invent on behalf of our customers.