Earlier this year, at Amazon’s re:MARS conference, Alexa head scientist Rohit Prasad unveiled Alexa Conversations, a new service that allows Alexa skill developers to more easily integrate conversational elements into their skills.

The announcement is an indicator of the next stage in Alexa’s evolution: more-natural, dialogue-based engagements that enable Alexa to aggregate data and refine requests to better meet customer needs.

At this year’s Interspeech, our group has a pair of papers that describe some of the ways that we are continuing to improve Alexa’s task-oriented dialogue systems, whose goal is to identify and fulfill customer requests.

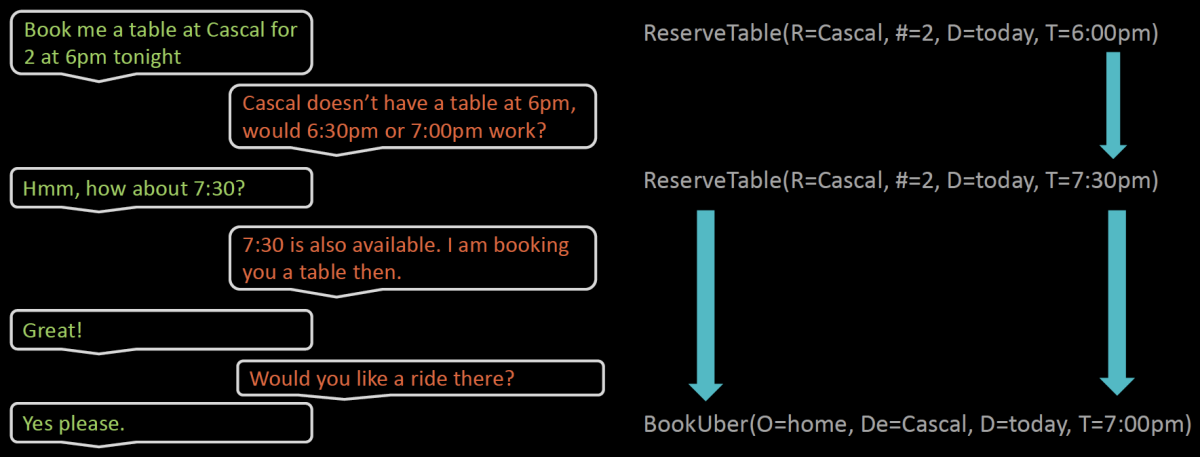

Two central functions of task-oriented dialogue systems are language understanding and dialogue state tracking, or determining the goal of a conversation and gauging progress toward it. Language understanding involves classifying utterances as dialogue acts, such as requesting, informing, denying, repeating, confirming, and so on. State tracking involves tracking the status of slots, or entities mentioned during the dialogue; a restaurant-finding service, for instance, might include slots like Cuisine_Type, Restaurant_Location, Price_Range, and so on. Each of these functions is the subject of one of our Interspeech papers.

One of the goals of state tracking is to assign values to slot types. If, for instance, a user requests a reservation at an Indian restaurant on the south side of a city, the state tracker might fill the slots Cuisine_Type and Restaurant_Location with the values “Indian” and “south”.

If all the Indian restaurants on the south side are booked, the customer might expand the search area to the central part of the city. The Cuisine_Type slot would keep the value “Indian”, but “Restaurant_Location” would get updated to “center”.

Traditionally, state trackers have been machine learning systems that produce probability distributions over all the possible values for a particular slot. After each dialogue turn, for instance, the state tracker might assign different probabilities to the possible Restaurant_Location values north, south, east, west, and center.

This approach, however, runs into obvious problems in real-world applications like Alexa. With Alexa’s music service, for instance, the slot Song_Name could have millions of valid values. Calculating a distribution over all of them would be prohibitively time consuming.

In work we presented last year, we described a state tracker that selects candidate slots and slot values from the dialogue history, so it doesn’t need to compute millions of probabilities at each turn. But while this approach scales more effectively, it tends to yield less accurate results.

In our Interspeech paper, we report a new hybrid system that we trained to decide between the two approaches — the full-distribution or the historical-candidate approach — based on the input.

We tested it on a data set that featured 37 slots, nine of which could take on more than 100 values each. For eight of those slots, the model that extracted candidates from the conversational context yielded better results. For 27 of the remaining 28 slots, the model that produced full distributions fared better.

Allowing the system to decide on the fly which approach to adopt yielded a 24% improvement in state-tracking accuracy versus the previous state-of-the-art system.

Our paper on dialogue acts is geared toward automatically annotating conversations between human beings. After all, people have been talking to each other far longer than they’ve been talking to machines, and their conversations would be a plentiful source of data for training state trackers. Our three-step plan is to (1) use existing data sets with labeled dialogue acts to train a classifier; (2) use that classifier to label a large body of human-human interactions; (3) use the labeled human-human dialogues to train dialogue policies.

The problem with the first step is that existing data sets use different dialogue act tags: one, for instance, might use the tag “welcome”, while another uses “greet”; one might use the tag “recommend”, while another uses “offer”. Much of our Interspeech paper on dialogue acts concerns the development of a universal tagging scheme.

We began by manually aligning tags from three different human-machine data sets. Using a single, reconciled set of tags, we then re-tagged the data in all three sets. Next, we trained a classifier on each of the three data sets and used it to predict tags in the other two. This enabled us to identify cases in which our provisional scheme failed to generalize across data sets.

On the basis of those results, we made several further modifications to the tagging scheme, sometimes combining separate tags into one, sometimes splitting single tags into two. This enabled us to squeeze out another 1% to 3% in tag prediction accuracy.

To test the applicability of our universal scheme, we used a set of annotated human-human interaction data. First, we trained a dialogue act classifier on our three human-machine data sets, re-tagged according to our universal scheme. Then we stripped the tags out of the human-human data set and used the classifier to re-annotate it. Finally, we used both the re-tagged human-human data and the original human-human data to train two new classifiers.

We found that we required about 1,700 hand-annotated examples from the original data set to produce a dialogue act classifier that was as accurate as one trained on our machine-annotated data. In other words, we got for free what had previously required human annotators to manually tag 1,700 rounds of dialogue.

Acknowledgments: Rahul Goel, Shachi Paul, Karthik Gopalakrishnan, Behnam Hedayatnia, Qinlang Chen, Anna Gottardi, Sanjeev Kwatra, Anu Venkatesh, Raefer Gabriel