The first draft of this blog post was generated by Amazon Nova Pro, based on detailed instructions from Amazon Science editors and multiple examples of prior posts.

In a paper we're presenting at the 2025 Conference on Computer Vision and Pattern Recognition (CVPR), we introduce a new approach to image segmentation that scales across diverse datasets and tasks. Traditional segmentation models, while effective on isolated tasks, often struggle as the number of new tasks or unfamiliar scenarios grows. Our proposed method, which uses a model we call a mixed-query transformer (MQ-former), aims to enable joint training and evaluation across multiple tasks and datasets.

Scalable segmentation

Image segmentation is a computer vision task that involves partitioning an image into distinct regions or segments. Each segment corresponds to a different object or part of the scene. There are several types of segmentation tasks, including foreground/background segmentation (distinguishing objects at different distances), semantic segmentation (labeling each pixel as belonging to a particular object class), and instance segmentation (identifying each pixel as belonging to a particular instance of an object class).

“Scalability” means that a segmentation model can effectively improve with an increase in the size of its training dataset, in the diversity of the tasks it performs, or both. Most prior research has focused on one or the other — data or task diversity. We address both at once.

A tale of two queries

In our paper, we show that one issue preventing effective scalability in segmentation models is the design of object queries. An object query is a way of representing a hypothesis about objects in a scene — a hypothesis that can be tested against images.

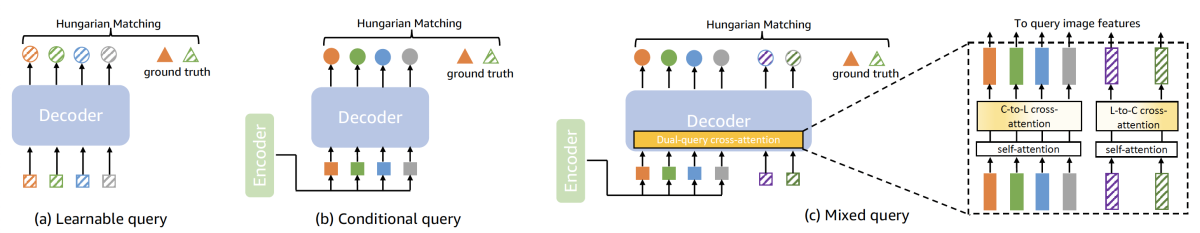

There are two main types of object queries. The first, which we refer to as “learnable queries”, are learned vectors that interact with image features and encode information about location and object class. Learnable queries tend to perform well on semantic segmentation as the they do not contain object-specific priors.

The second type of object query, which we refer to as a conditional query, is akin to two-stage object detection: region proposals are generated by a Transformer encoder, and then high-confidence proposals are fed into the Transformer decoder as queries to generate the final prediction. Conditional queries are closely aligned with the object classes and excel at object detection and instance segmentation on semantically well-defined objects.

Our approach is to combine both types of queries, which improves the model’s ability to transfer across tasks. Our MQ-Former model represents inputs using both learnable queries and conditional queries, and every layer of the decoder has a cross-attention mechanism, so that the processing of the learnable queries can factor in information from the conditional-query processing, and vice versa.

Leveraging synthetic data

Mixed queries aid scalability across segmentation tasks, but the other aspect of scalability in segmentation models is dataset size. One of the key challenges in scaling up segmentation models is the scarcity of high-quality, annotated data. To overcome this limitation, we propose leveraging synthetic data.

While segmentation data is scarce, object recognition data is plentiful. Object recognition datasets typically include bounding boxes, or rectangles that identify the image regions in which labeled objects can be found.

Asking a trained segmentation model to segment only the object within a bounding box significantly improves performance; we are thus able to use weaker segmentation models to convert object recognition datasets into segmentation datasets that can be used to train stronger segmentation models.

Bounding boxes can also focus automatic captioning models on regions of interest in an image, to provide the type of object classifications necessary to train semantic-segmentation and instance segmentation models.

Experimental results

We evaluated our approach using 15 datasets covering a range of segmentation tasks and found that, with MQ-Former, scaling up both the volume of training data and the diversity of tasks consistently enhances the model’s segmentation capabilities.

For example, on the SeginW benchmark, which includes 25 datasets used for open-vocabulary in-the-wild segmentation evaluation, scaling the data and tasks from 100,000 samples to 600,000 boosted performance 16%, as measured by average precision of object masking. Incorporating synthetic data improved performance by another 14%, establishing a new state of the art.