Time series forecasting is crucial to numerous applications in business, science, and engineering. Recently, foundation models have led to a paradigm shift in time series forecasting. Unlike statistical models, which extrapolate from a single time series, or earlier deep-learning models, which were trained on specific tasks, time series foundation models (TSFMs) are trained once on large-scale time series data and then applied across forecasting problems.

Since their initial release, Amazon’s TSFMs, Chronos and Chronos-Bolt, have been collectively downloaded over 600 million times from Hugging Face, demonstrating the popularity of TSFMs and their applicability to arbitrary forecasting scenarios.

Despite their success, existing TSFMs have a key limitation: they support only univariate forecasting, predicting a single time series at a time. Although univariate forecasting is important, many scenarios require additional capabilities. Real-world forecasting problems often involve predicting multiple coevolving time series simultaneously (multivariate forecasting) or incorporating external factors that influence outcomes (covariate-informed forecasting). For example, cloud infrastructure metrics such as CPU usage, memory consumption, and storage I/O evolve together and benefit from joint modeling. Likewise, retail demand is heavily influenced by promotional activities, while energy consumption patterns are driven by weather conditions.

To address this limitation, we are introducing Chronos-2, a foundation model designed to handle arbitrary forecasting tasks — univariate, multivariate, and covariate informed — in a zero-shot manner. Chronos-2 leverages in-context learning (ICL) to enable these capabilities without additional training.

For multivariate forecasting, Chronos-2 can jointly predict multiple coevolving time series, capturing dependencies that improve overall accuracy. For instance, cloud operations teams can jointly forecast CPU usage, memory consumption, and storage I/O to anticipate resource bottlenecks before they occur.

For covariate-informed forecasting, Chronos-2 can incorporate external factors that influence predictions. The model supports past-only covariates (like historical traffic volume that signals upcoming trends) and known future covariates (like scheduled promotions or weather forecasts). It also handles categorical covariates, such as specific holidays or promotion types. For example, a retailer can forecast demand while accounting for planned promotional campaigns and holiday schedules to optimize inventory levels.

Chronos-2’s enhanced ICL capabilities also improve univariate forecasting by enabling cross-learning, where the model shares information across univariate time series, leading to more accurate predictions. This is particularly valuable for cold-start scenarios: a logistics company opening a new distribution center can leverage patterns from existing facilities to generate accurate forecasts, even with minimal operational history.

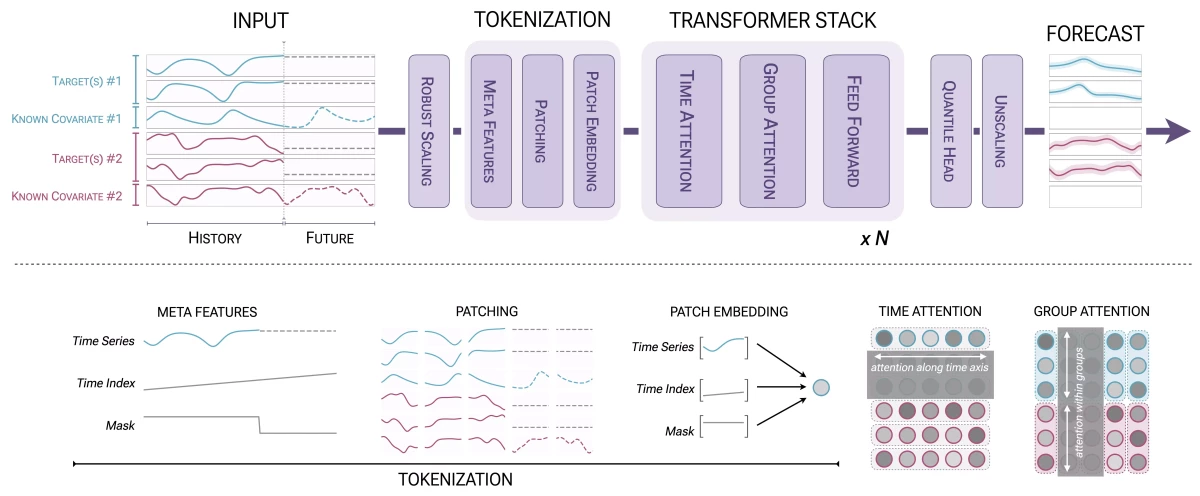

Building a universal TSFM like Chronos-2 required innovation on two fronts: model architecture and training data. Downstream forecasting tasks differ in their number of dimensions and their semantic content. Since it is impossible to know a priori how the variables will interact in an unseen task, the model must infer these interactions from the available context.

Our group attention mechanism accounts for such interactions through information exchange within arbitrary-sized groups of time series. For example, if Chronos-2 is forecasting cloud metrics, CPU usage patterns can inform memory consumption predictions. Group attention can also factor in covariates, using, say, information from promotional schedules to help predict demand.

The training corpus is as critical as the architectural innovations. A universal TSFM must be trained on heterogeneous time series tasks, but high-quality pretraining data with multivariate dependencies and informative covariates is scarce. To address this issue, we rely on synthetic time series data generated by imposing a multivariate structure on time series sampled from base univariate generators.

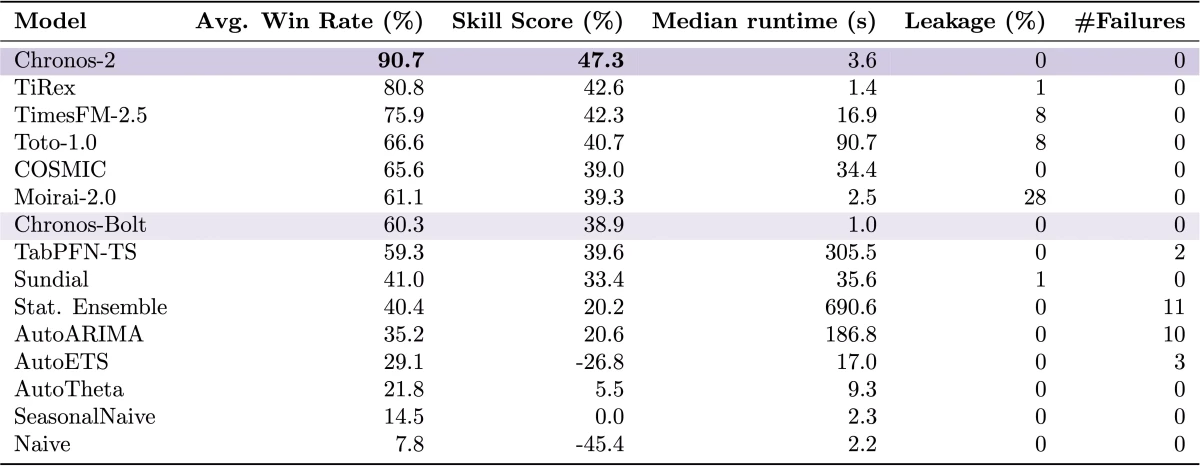

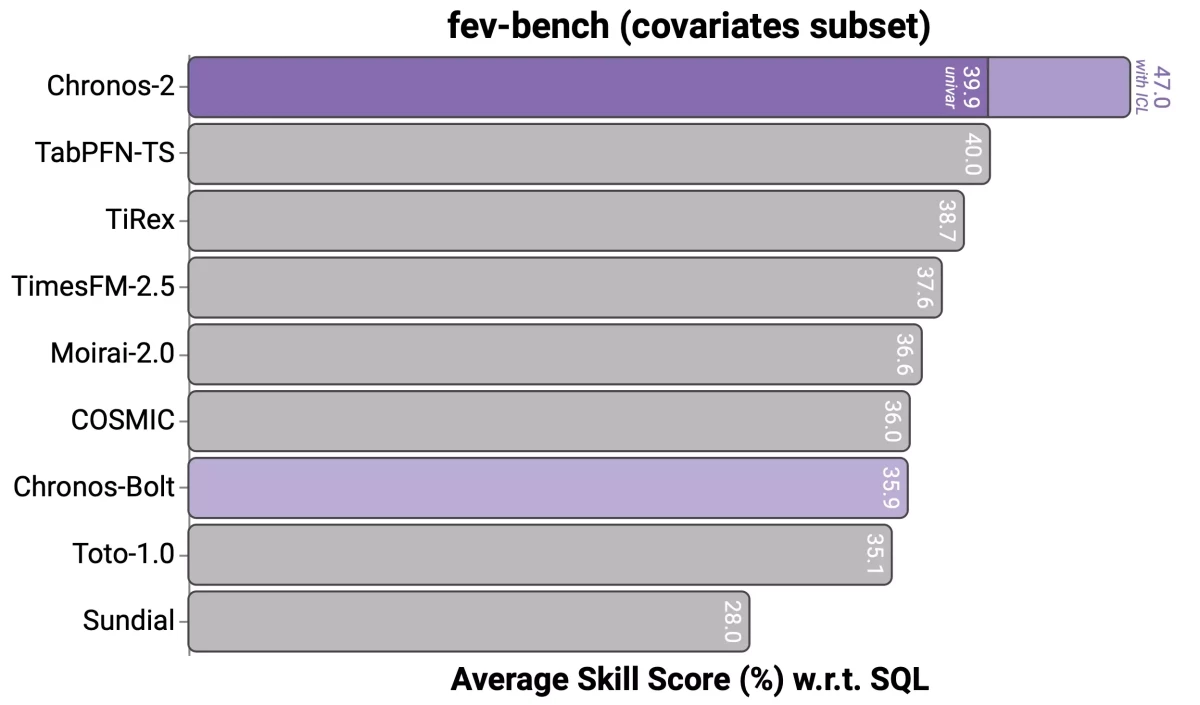

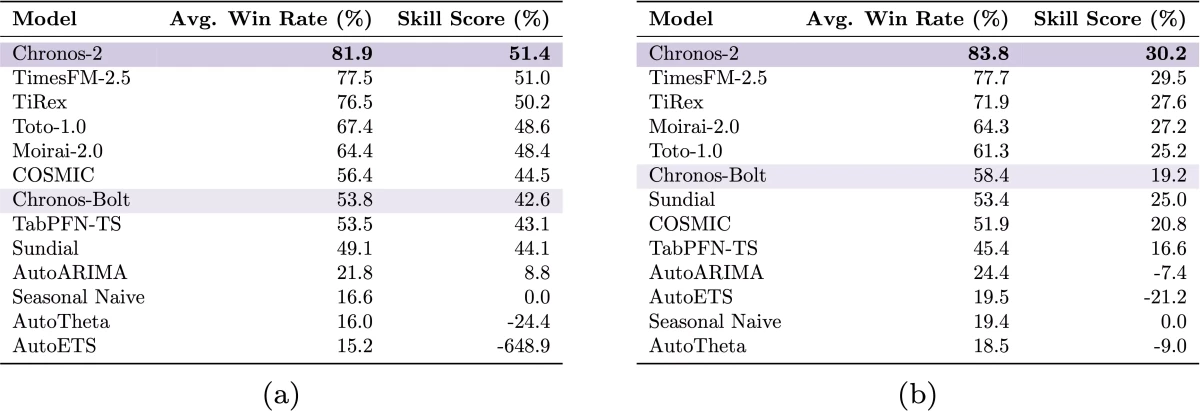

Empirical evaluation confirms that Chronos-2 represents a leap in the capabilities of TSFMs. On the comprehensive time series benchmark fev-bench, which spans a wide range of forecasting tasks — univariate, multivariate, and covariate-informed — Chronos-2 outperforms existing TSFMs by a large margin. We see the largest gains on covariate-informed tasks, demonstrating Chronos-2’s strength in this practically important setting. On the GIFT-Eval benchmark, Chronos-2 ranks first among pretrained models. Chronos-2 significantly outperforms its predecessor, Chronos-Bolt, achieving a win rate of over 90% in head-to-head comparisons.

The ICL capabilities of Chronos-2 position it as a viable general-purpose forecasting model that can be used “as is” in production pipelines, significantly simplifying them. Chronos-2 is now available open source (links below). We invite researchers and practitioners to engage with Chronos-2 and join the research frontier on time series foundation models.

Learn more:

Chronos-2 model card

Deploy Chronos-2 on Amazon SageMaker

Chronos-2 technical report

Chronos GitHub Repository