Customer-obsessed science

Research areas

-

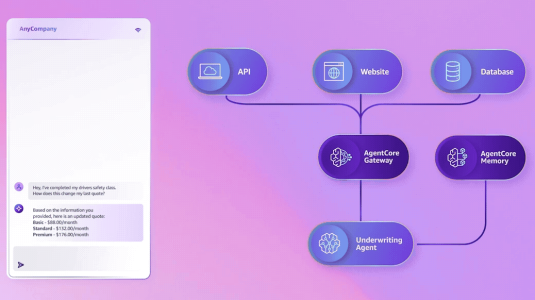

October 16, 2025Amazon vice president and distinguished engineer Marc Brooker explains how agentic systems work under the hood — and how AWS’s new AgentCore framework implements their essential components.

-

Featured news

-

2025Large language models (LLMs) have recently revolutionized natural language processing. These models, however, often suffer from instability or lack of coherence, that is the ability of the models to generate semantically equivalent outputs when receiving diverse yet semantically equivalent input variations. In this work, we analyze the behavior of multiple LLMs, including Mixtral-8x7B, Llama2-70b, Smaug

-

2025When aligning large language models (LLMs), their performance on various tasks (such as being helpful, harmless, and honest) depends heavily on the composition of their training data. However, selecting a data mixture that achieves strong performance across all tasks is challenging. Existing approaches rely on large ablation studies, heuristics, or human intuition, but these can be prohibitively expensive

-

ECAI 2025 Workshop on Trustworthy AI2025Text classification has become increasingly important with the exponential growth of digital text data, finding applications in sentiment analysis, spam detection, topic categorization, and content moderation across various domains. Our research introduced a novel approach that integrates reinforcement learning with a specialized reasoning path. This methodology enabled smaller 7B parameter language models

-

VLDB 20252025NL2SQL (natural language to SQL) translates natural language questions into SQL queries, thereby making structured data accessible to non-technical users, serving as the foundation for intelligent data applications. State-of-the-art NL2SQL techniques typically perform translation by retrieving database-specific information, such as the database schema, and invoking a pre-trained large language model (LLM

-

2025Existing Reward Models (RMs), typically trained on general preference data, struggle in Retrieval Augmented Generation (RAG) settings, which require judging responses for faithfulness to retrieved context, relevance to the user query, appropriate refusals when context is insufficient, completeness and conciseness of information. To address the lack of publicly available RAG-centric preference datasets and

Conferences

Collaborations

View allWhether you're a faculty member or student, there are number of ways you can engage with Amazon.

View all