At Amazon, we're constantly working to improve our logistics operations through cutting-edge AI and computer vision. Today, we're excited to announce the public release of Kaputt, a large-scale dataset for visual defect detection in retail logistics. This dataset, which will be presented at the International Conference on Computer Vision (ICCV) 2025, represents a major step forward in our efforts to automate defect detection.

The Kaputt dataset contains 238,421 high-resolution images of 48,376 unique items, including 29,316 defective instances, making it 40 times as large as current state-of-the-art benchmark datasets. It captures the real-world complexities of detecting defects and damage across a vast range of products — minor creases, major spills, and everything in between.

The challenge of automated defect detection

Developing robust visual defect detection systems for retail logistics presents significant challenges that existing research hasn't fully addressed. Existing benchmarks mostly focus on manufacturing and have reached saturation, achieving near-perfect performance with more than 99.9% AUROC (area under the receiver-operating-characteristic curve, which measures the balance between true-positive and false-positive rates). Unlike manufacturing settings, which commonly focus on highly standardized item poses and restricted numbers of distinct items, retail logistics handles millions of unique products, most of which have been seen only a handful of times. Without adequate data, it’s extremely difficult for AI systems to learn what constitutes “normal” versus “defective” across such diverse items.

A novel dataset for real-world applications

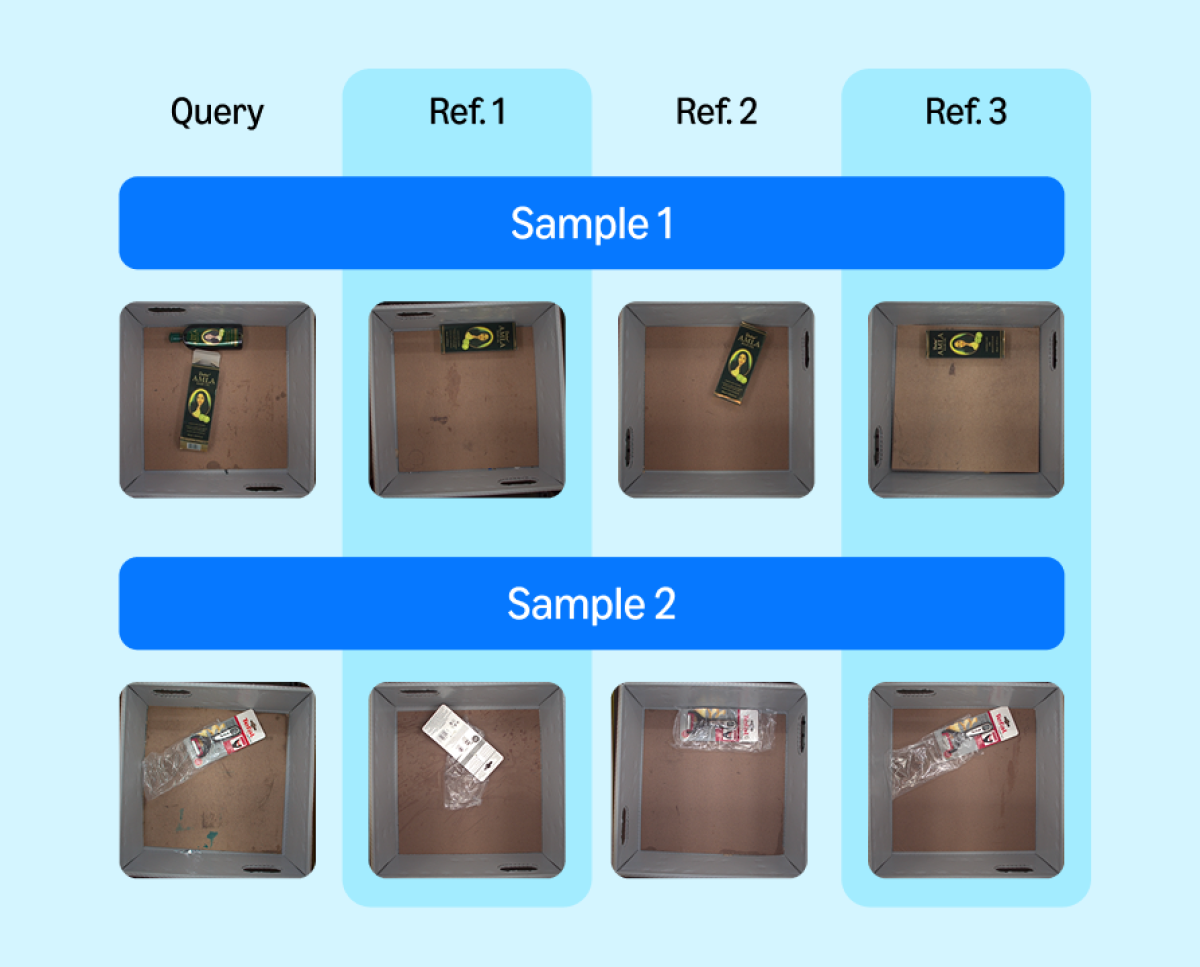

Our dataset's structure reflects these real-world challenges and opportunities. For each query image, we provide up to three reference images showing the item in “normal” (meaning more than 99% likely to be defect free — but not 100%) condition, mirroring how human inspectors might compare items to determine defects. We've also included detailed annotations for seven distinct types of defects and their severity levels, acknowledging the subjective nature of defect assessment.

Understanding model performance

Our comprehensive evaluation of multiple leading methodologies reveals both the complexity of the task and current technological limitations. We tested four distinct approaches: zero-shot methods using general-purpose vision models, few-shot approaches leveraging reference images, supervised learning, and hybrid methods combining multiple techniques.

The results are striking: while supervised models with access to the full dataset achieve 94.27% AUROC on defect detection, their performance drops to 74.4% in more-realistic scenarios with a limited number of defective samples available for training. State-of-the-art zero-shot methods perform even worse, none exceeding 56.96% AUROC — not much better than random guessing.

Through qualitative analysis, we identified several key challenges for these methods: models struggle with subtle anomalies, rare defect types, and reference-dependent defects like missing units, and they often misclassify deformable items or items with damage-like designs. Vision-language models can detect obvious defects but fail to capture subtle defects in deformable items or minor anomalies like stickers and dirt.

Overall, these results stand in sharp contrast to the near-perfect performance that state-of-the-art defect detection methods achieve in manufacturing settings, highlighting the unique challenges of retail logistics addressed by our dataset.

Impact beyond retail operations

The impact of improving visual defect detection extends far beyond operational efficiency. Early detection of defective items helps reduce waste, labor, and resource consumption by preventing defective products from moving further through the supply chain, ultimately supporting sustainability goals. It also helps ensure that customers receive their orders in perfect condition, reducing returns and reshipments — which in turn reduces carbon emissions from transportation.

But potential applications extend beyond retail. The challenges addressed by this dataset — handling diverse objects, dealing with limited data per instance, and managing significant pose variations — are relevant to quality control in vehicle damage, inspection of infrastructure, and even medical imaging. By sharing this dataset, we hope to accelerate progress across these domains.

The Kaputt dataset is now available for download. We encourage computer vision researchers to leverage this resource in developing novel approaches to this challenging problem. We look forward to engaging with the research community and seeing the innovative solutions that emerge from this work.