Agents are the trendiest topic in AI today, and with good reason. AI agents act on their users’ behalf, autonomously doing things like making online purchases, building software, researching business trends, or booking travel. By taking generative AI out of the sandbox of the chat interface and allowing it to act directly on the world, agentic AI represents a leap forward in the power and utility of AI.

Agentic AI has been moving really fast: for example, one of the core building blocks of today’s agents, the model context protocol (MCP), is only a year old! As in any fast-moving field, there are many competing definitions, hot takes, and misleading opinions.

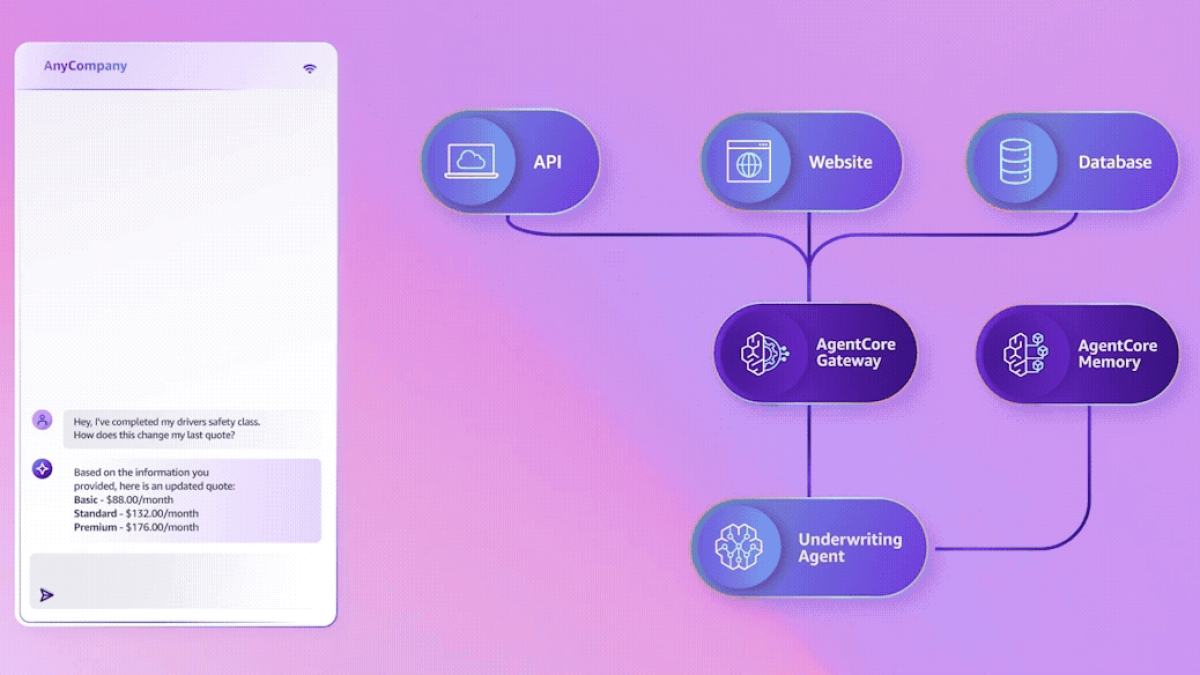

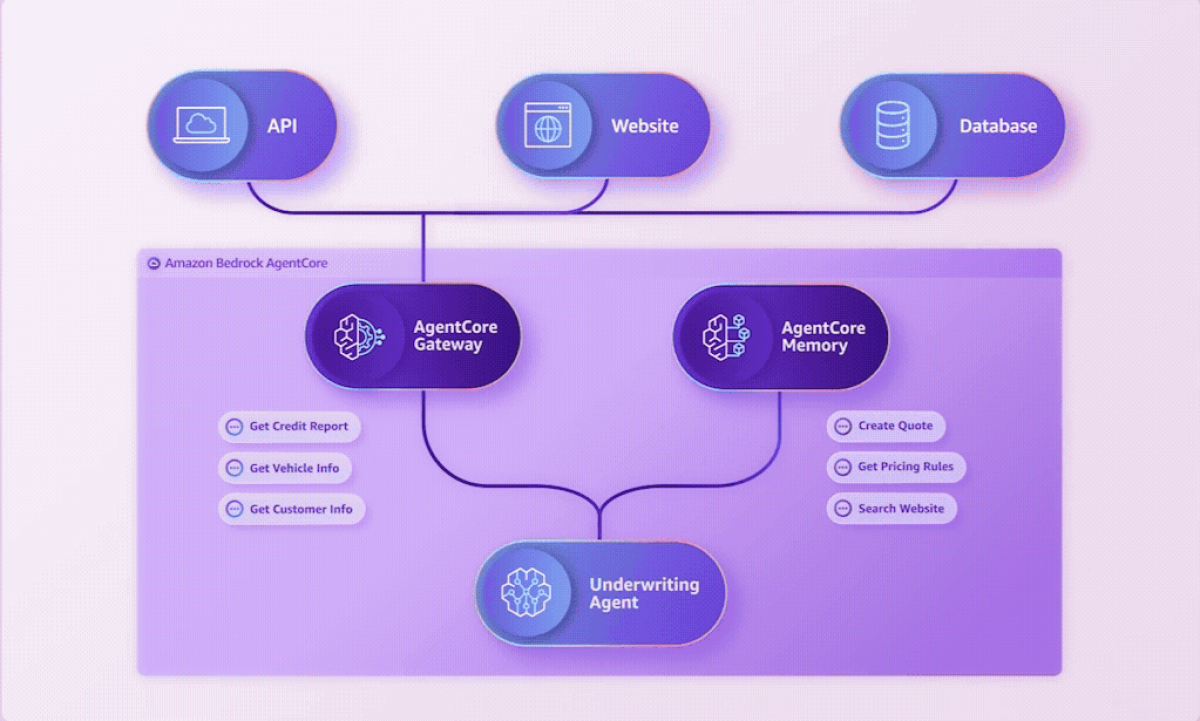

To cut through the noise, I’d like to describe the core components of an agentic AI system and how they fit together. Hopefully, when you’ve finished reading this post, agents won’t seem as mysterious. You’ll also understand why we made the choices we did in designing Amazon Web Services’ Bedrock AgentCore, a set of services and tools that lets customers quickly and easily design and build their own agentic AI systems.

The agentic ecosystem

Definitions of the word “agent” abound, but I like a slight variation on the British programmer Simon Willison’s minimalist take: An agent runs models and tools in a loop to achieve a goal.

The user prompts an AI model (typically a large language model, or LLM) with the goal to be attained — say, booking a table at a restaurant near the theater where a movie is playing. Along with the goal, the model receives a list of the tools available to it, such as a database of restaurant locations or a record of the user’s food preferences. The model then plans how to achieve the goal and takes a first step by calling one of the tools. The tool provides a response, and based on that, the model calls a new tool. Through repetitions of this process, the agent ratchets toward accomplishment of the goal. In some cases, the model’s orchestration and planning choices are complemented or enhanced by combining them with imperative code.

That seems simple enough. But what kind of infrastructure does it take to realize this approach? An agentic system needs a few core components:

- A way to build the agent. When you deploy an agent, you don’t want to have to code it from scratch. There are several agent development frameworks out there, but I’m partial to Amazon Web Services’ own Strands Agents.

- Somewhere to run the AI model. A seasoned AI developer can download an open-weight LLM, but it takes expertise to do that right. It also takes expensive hardware that’s going to be poorly utilized for the average user.

- Somewhere to run the agentic code. With frameworks like Strands, the user creates code for an agent object with a defined set of functions. Most of those functions involve sending prompts to an AI model, but the code needs to run somewhere. In practice, most agents will run in the cloud, because we want them to keep running when our laptops are closed, and we want them to scale up and out to do their work.

- A mechanism for translating between the text-based LLM and tool calls.

- A short-term memory for tracking the content of agentic interactions.

- A long-term memory for tracking the user’s preferences and affinities across sessions.

- A way to trace the system’s execution, to evaluate the agent’s performance.

In what follows I’ll go into more detail about each of these components and explain how AgentCore implements them.

Building an agent

It’s well known that asking an LLM to explain how it plans to approach a task improves its performance on that task. Such “chain-of-thought reasoning” is now ubiquitous in AI.

The analogue in agentic systems is the ReAct (reasoning + action) model, in which the agent has a thought (“I’ll use the map function to locate nearby restaurants”), performs an action (issuing an API call to the map function), and then makes an observation (“There are two pizza places and one Indian restaurant within two blocks of the movie theater”).

ReAct isn’t the only way to build agents, but it is at the core of most successful agentic systems. Today, agents are commonly loops over the thought-action-observation sequence.

The tools available to the agent can include local tools and remote tools such as databases, microservices, and software as a service. A tool’s specification includes a natural-language explanation of how and when it’s used and the syntax of its API calls.

The developer can also tell the agent to, essentially, build its own tools on the fly. Say that a tool retrieves a table stored as comma-separated text, and to fulfill its goal, the agent needs to sort the table.

Sorting a table by repeatedly sending it through an LLM and evaluating the results would be a colossal waste of resources — and it’s not even guaranteed to give the right result. Instead, the developer can simply instruct the agent to generate its own Python code when it encounters a simple but repetitive task. These snippets of code can run locally alongside the agent or in a dedicated secure code interpreter tool like AgentCore’s Code Interpreter.

One of the things I like about Strands is the flexibility it offers in dividing responsibility between the LLM and the developer. Once the tools available to the agent have been specified, the developer can simply tell the agent to use the appropriate tool when necessary. Or the developer can specify which tool to use for which types of data and even which data items to use as arguments during which function calls.

Similarly, the developer can simply tell the agent to generate Python code when necessary to automate repetitive tasks or, alternatively, tell it which algorithms to use for which data types and even provide pseudocode. The approach can vary from agent to agent.

Strands is open source and can be used by developers deploying agents in any context; conversely, AgentCore customers can build their agents using any development tools they choose.

Runtime

Historically, there were two main ways to isolate code running on shared servers: containerization, which was efficient but offered lower security, and virtual machines (VMs), which were secure but came with a lot of computational overhead.

In 2018, Amazon Web Services’ (AWS’s) Lambda serverless-computing service deployed Firecracker, a new paradigm in server isolation that offered the best of both worlds. Firecracker creates “microVMs”, complete with hardware isolation and their own Linux kernels but with reduced overhead (as low as a few megabytes) and startup times (as low as a few milliseconds). The low overhead means that each function executed on a Lambda server can have its own microVM.

However, because instantiating an agent requires deploying an LLM, together with the memory resources to track the LLM’s inputs and outputs, the per-function isolation model is impractical. So AgentCore uses session-based isolation, where every session with an agent is assigned its own Firecracker microVM. When the session finishes, the LLM’s state information is copied to long-term memory, and the microVM is destroyed. This ensures secure and efficient deployment of hosts of agents across AWS servers.

Tool calls

Just as there are several existing development frameworks for agent creation, there are several existing standards for communication between agents and tools, the most popular of which is MCP. MCP establishes a standard format for passing data between the LLM and its server and a way for servers to describe to the agent what tools and data they have available.

In AgentCore, tool calls are handled by the AgentCore Gateway service. Gateway uses MCP by default, but like most of the other AgentCore components, it’s configurable, and it will support a growing set of protocols over time.

Sometimes, however, the necessary tool is one without a public API. In such cases, the only way to retrieve data or perform an action is by pointing and clicking on a website. There are a number of services available to perform such computer use, including Amazon’s own Nova Act, which can be used with AgentCore’s secure Browser tool. Computer use makes any website a potential tool for agents, opening up decades of content and valuable services that aren’t yet available directly through APIs.

I mentioned before that code generated by the agent is executed by AgentCore Code Interpreter, but Gateway, again, manages the translation between the LLM’s output and Code Interpreter’s input specs.

Memory

Short-term memory

LLMs are next-word prediction engines. What makes them so astoundingly versatile is that their predictions are based on long sequences of words they’ve already seen, known as context. Context is, in itself, a kind of memory. But it’s not the only kind an agentic system needs.

Suppose, again, that an agent is trying to book a restaurant near a movie theater, and from a map tool, it’s retrieved a couple dozen restaurants within a mile radius. It doesn’t want to dump information about all those restaurants into the LLM’s context: that could wreak havoc with next-word probabilities.

Instead, it can store the complete list in short-term memory and retrieve one or two records at a time, based on, say, the user’s price and cuisine preferences and proximity to the theater. If none of those restaurants pans out, the agent can dip back into short-term memory, rather than having to execute another tool call.

Long-term memory

Agents also need to remember their prior interactions with their clients. If last week I told the restaurant booking agent what type of food I like, I don’t want to have to tell it again this week. The same goes for my price tolerance, the sort of ambiance I’m looking for, and so on.

Long-term memory allows the agent to look up what it needs to know about prior conversations with the user. Agents don’t typically create long-term memories themselves, however. Instead, after a session is complete, the whole conversation passes to a separate AI model, which creates new long-term memories or updates existing ones.

With AgentCore, memory creation can involve LLM summarization and “chunking”, in which documents are split into sections grouped according to topic for ease of retrieval during subsequent sessions. AgentCore lets the user select strategies and algorithms for summarization, chunking, and other information extraction techniques.

Observability

Agents are a new kind of software system, and they require new ways to think about observing, monitoring, and auditing their behavior. Some of the questions we ask will look familiar: whether the agents are running fast enough, how much they’re costing, how many tool calls they’re making, and whether users are happy. But new questions will arise, too, and we can’t necessarily predict what data we’ll need to answer them.

In AgentCore Observability, traces provide an end-to-end view of the execution of a session with an agent, breaking down step-by-step which actions were taken and why. For the agent builder, these traces are key to understanding how well agents are working — and providing the data to make them work better.

I hope that this explanation has demystified agentic AI enough that you’re ready to try building your own agents. You can find all the tools you’ll need at the AgentCore website.