At Amazon, we always look to invent new technology for improving customer experience. One technology we have been working on at Alexa is on-device speech processing, which has multiple benefits: a reduction in latency, or the time it takes Alexa to respond to queries; lowered bandwidth consumption, which is important on portable devices; and increased availability in in-car units and other applications where Internet connectivity is intermittent. On-device processing also enables the fusion of the speech signal with other modalities, like vision, for features such as Alexa’s natural turn-taking.

In the last year, we’ve continued to build upon Alexa’s on-device speech-processing capabilities. As a result of these inventions, we are launching a new setting that gives customers the option of having the audio of their Alexa voice requests processed locally, without being sent to the cloud.

In the cloud, storage space and computational capacity are effectively unconstrained. To ensure accuracy, our cloud models can be large and computationally demanding. Executing the same functions on-device means compressing our models into less than 1% as much space — with minimal loss in accuracy.

Moreover, in the cloud, the separate components of Alexa’s speech-processing stack — automatic speech recognition (ASR), whisper detection, and speaker identification — run on separate server nodes with their own powerful processors. On-device, those functions have to share hardware not only with each other but with Alexa’s other core device functions, such as music playback.

Re-creating Alexa’s speech-processing stack on-device was a massive undertaking. New methods for training small-footprint ASR models were part of the solution, but so were innovations in system design and hardware-software codesign. It was a joint effort across science and engineering teams over a span of years. Here’s a quick overview of how it works.

System architecture

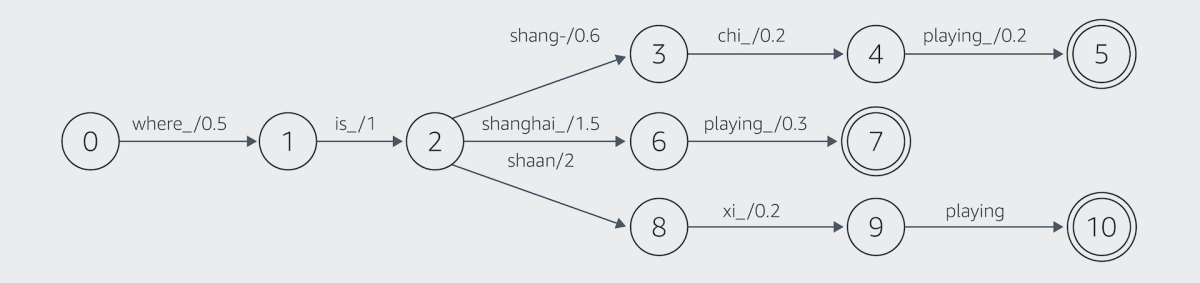

Our on-device ASR model takes in an acoustic speech signal and outputs a set of hypotheses about what the speaker said, ranked according to probability. We represent those hypotheses as a lattice — a graph whose edges represent recognized words and the probability that a given word follows from the previous one.

With cloud-based ASR, encrypted audio streams to the cloud in small snippets called “frames”. With on-device ASR, only the lattice is sent to the cloud, where a large and powerful neural language model reranks the hypotheses. The lattice can’t be sent until the customer has finished speaking, as words later in a sequence can dramatically change the overall probability of a hypothesis.

The model that determines when the customer has finished speaking is called an end-pointer. End-pointers offer a natural trade-off between accuracy and latency: an aggressive end-pointer will initiate speech processing earlier, but it might cut the speaker off prematurely, resulting in a poor customer experience.

On the device, we in fact run two end-pointers: One is a speculative end-pointer that we have tuned to be about 200 milliseconds faster than the final end-pointer, so we can initiate downstream processing — such as natural-language understanding (NLU) — ahead of the final end-pointed ASR result. In exchange for speed, however, we trade off a little accuracy.

The final end-pointer takes longer to make a decision but is more accurate. In cases in which the first end-pointer cuts speech off too early, the final end-pointer sends a revised lattice and the instruction to reset downstream processing. In the large majority of cases, however, the aggressive end-pointer is correct, which reduces user-perceived latency, since downstream tasks are initiated earlier.

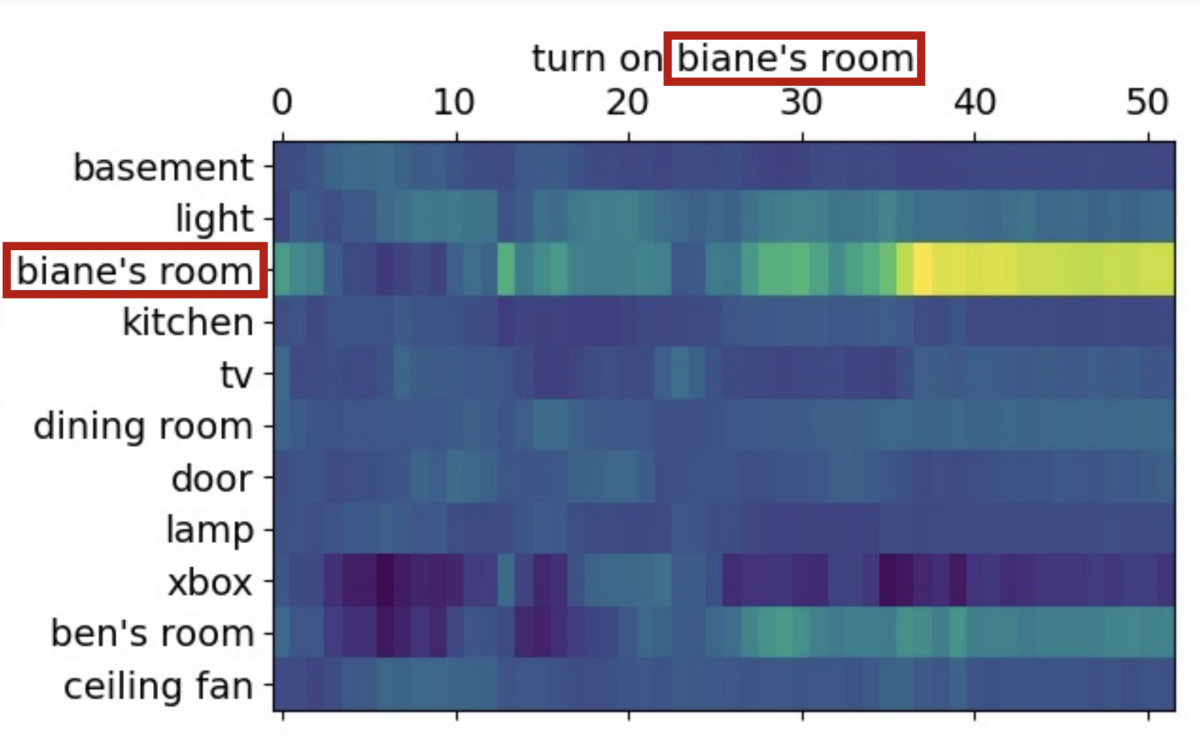

Another aspect of ASR that had to move on-device is context awareness. When computing the probabilities in a lattice, the ASR model should, for instance, give added weight to otherwise uncommon names that happen to be in the customer’s address book or the names the customer has assigned to household devices.

Context awareness can’t wait for the cloud because the lattice, though it encodes multiple hypotheses, doesn’t come close to encoding all possible hypotheses. When constructing the lattice, the ASR system has to prune a lot of low-probability hypotheses. If context awareness isn’t built into the on-device model, names of contacts or linked skills might end up getting pruned.

Initially, we use a so-called shallow-fusion model to add context and personalize content on-device. When the system is building the lattice, it boosts the probabilities of contextually relevant words such as contact or appliance names.

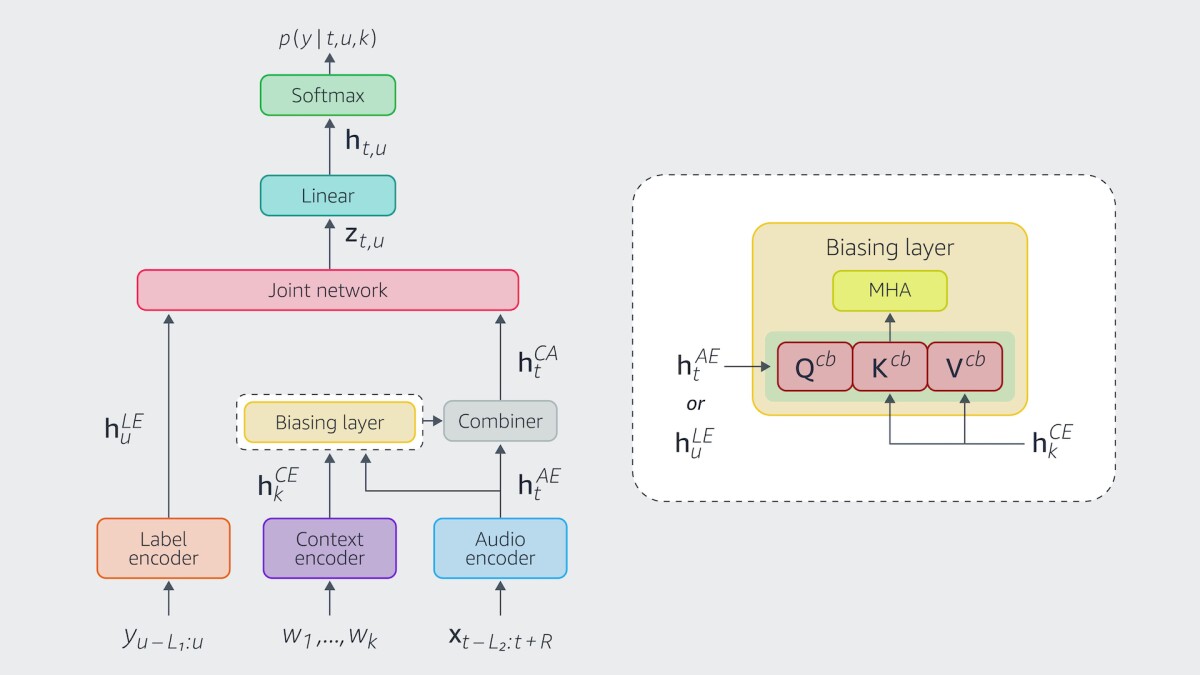

The probability boosts are heuristic, however — they’re not learned jointly with the core ASR model. To achieve even better accuracy on personalized and long-tail content, we have developed a multihead attention-based context-biasing mechanism that is jointly trained with the rest of the ASR subnetworks.

Model training

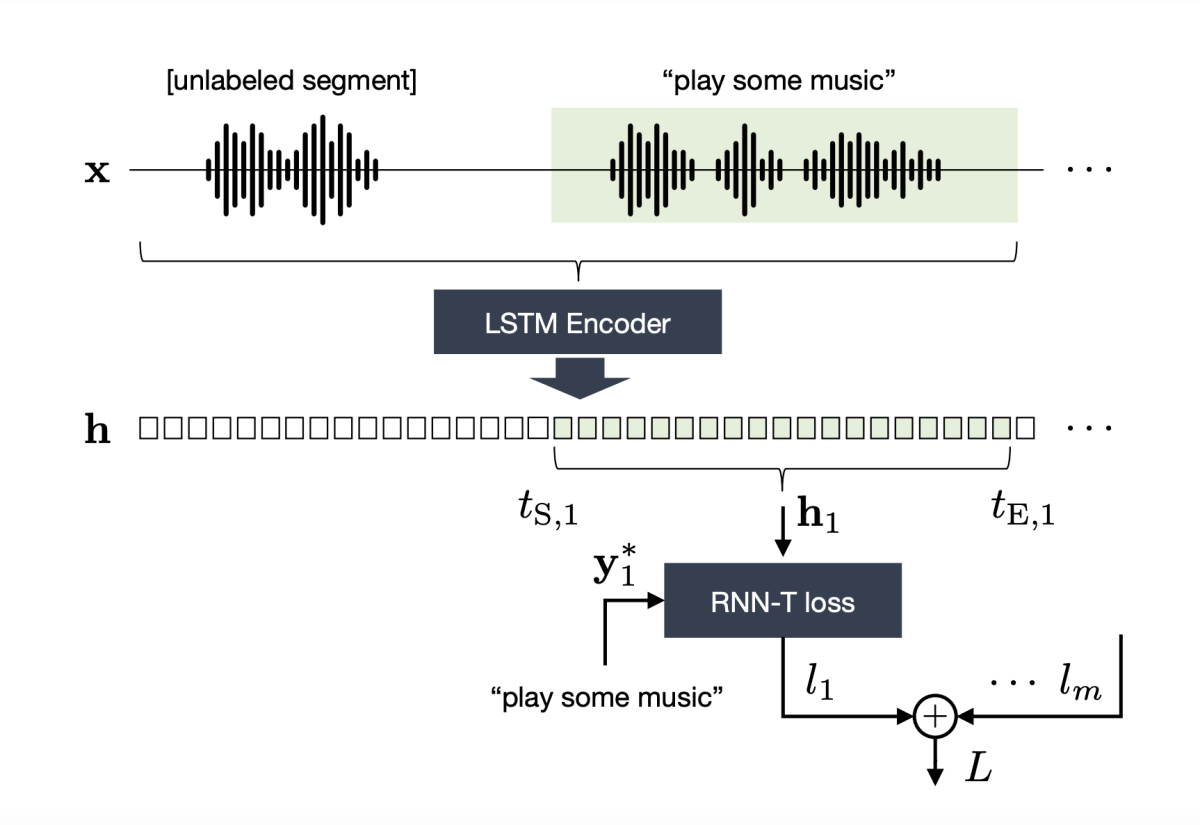

On-device ASR required us to build a new model from the ground up, an end-to-end recurrent neural network-transducer (RNN-T) model that directly maps the input speech signal to an output sequence of words. Using a single neural network results in a significantly reduced memory footprint. But we had to develop new techniques, both for inference and for training, to achieve the degree of accuracy and compression that would let this technology handle utterances on-device.

Previously on Amazon Science, we’ve discussed some of the techniques we used to increase the accuracy of small-footprint end-to-end ASR models. With teacher-student training, for instance, we teach a small, lean model to match the outputs of a more-powerful but slower model. We developed a training methodology that made it possible to do teacher-student training efficiently with a million hours of unannotated speech.

To further boost the accuracy of on-device RNN-T ASR, we developed techniques that allow the neural network to learn and exploit audio context within a stream. For example, for a stream comprising two utterances, “Alexa” and “Play a song”, the audio context from the keyword segment (“Alexa”) helps the model focus on the foreground speech and speaker. Separately, we implemented a novel discriminative-loss and training algorithm that aims at directly minimizing the word error rate (WER) of RNN-T ASR.

On top of these innovations, however, we still had to develop some new compression techniques to get the RNN-T to run efficiently on-device. A neural network consists of simple processing nodes each of which is connected to several others. The connections between nodes have associated weights, which determine how much one node’s output contributes to the computation performed by the next node.

One way to shrink a neural network’s memory footprint is to quantize its weights — to divide the total range of weights into a small set of intervals and use a single value to represent all the weights in each interval. So, for instance, the weights 0.70, 0.76, and 0.79 might all get quantized to the single value 0.75. Specifying an interval requires fewer bits than specifying several different floating-point values.

If quantization is done after a network has been trained, performance can suffer. We developed a method of <i class="rte2-style-italic">quantization-aware</i> training that imposes a probability distribution on the network weights during training, so that they can be easily quantized with little effect on performance. Unlike previous quantization-aware training methods, which mostly take quantization into account in the forward pass, ours accounts for quantization in the backward direction, during weight updates, through network loss regularization. And it does that efficiently.

A way to make neural networks run more efficiently — also a vital concern on resource-constrained devices — is to reduce low weights to zero. Computations involving zero weights can be discarded, reducing the computational burden.

But again, doing that reduction after the network is trained can compromise performance. We developed a <i class="rte2-style-italic">sparsification</i> method that enables the gradual reduction of low-value weights during training, so the network learns a model amenable to weight pruning.

Neural networks are typically trained on multiple passes through the same set of training data, or epochs. During each epoch, we force the network weights to diverge more and more, so that at the end of the final epoch, a fixed number of weights — say, half — are effectively zero. They can be safely discarded.

To improve on-device efficiency, we also developed a branching encoder network that uses two different neural networks to convert speech inputs into numeric representations suitable for speech classification. One network is complex, one simple, and the ASR model decides on the fly whether it can get away with passing an input frame to the simple model, saving computational cost and time. We described this work in more detail in an earlier Amazon Science blog post.

Hardware-software codesign

Quantization and sparsification make no difference to performance if the underlying hardware can’t take advantage of them. Another key to getting ASR to run on-device was the design of Amazon’s AZ family of neural edge processors, which are optimized for our specific approach to compression.

For one thing, where a typical processor might represent data using 16 or 32 bits, for certain core operations, the AZ processors accelerate computation by using an 8-bit or even lower-bit representation, because that’s all we need to handle quantized values.

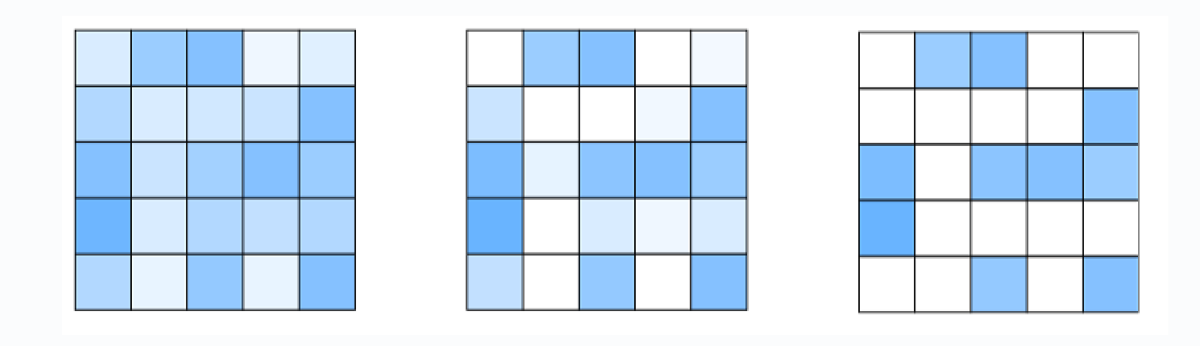

The weights of a neural network are typically represented using a matrix — a big grid of numbers. A matrix half of whose values are zeroes takes up as much space as a matrix that’s all nonzero.

On computer chips, transferring data tends to be much more time consuming than executing computations. So when we load our matrix into memory, we use a compression scheme that takes advantage of low-bit quantization and zero values. The circuitry for decoding the compressed representation is built into the chip.

In the neural processor’s memory, the matrix is reconstituted: the zeroes are filled back in. But the processor’s circuitry is designed to recognize zero values and discard computations involving them. So the time savings from sparsification are realized in the hardware itself.

Moving speech recognition on device entails a number of innovations in other areas, such as reduction in the bandwidth required for model updates and compression of NLU models, to ensure basic functionality on devices with intermittent Internet connectivity. And we’re also hard at work on multilingual on-device ASR models for dynamic language switching, or automatically recognizing which of two languages a customer is speaking and responding in kind.

The launch of on-device speech processing is a huge step in bringing the benefits of “processing on the edge” to our customers, and we will continue to invent on their behalf in this area.