Foundation models (FMs) such as large language models and vision-language models are growing in popularity, but their energy inefficiency and computational cost remain an obstacle to broader deployment.

To address those challenges, we propose a new architecture that, in our experiments, reduced an FM’s inference time by 30% while maintaining its accuracy. Our architecture overcomes challenges in prior approaches to improving efficiency by maintaining both the model’s adaptability and its structural integrity.

With the traditional architecture, when an FM is presented with a new task, data passes through all of its processing nodes, or neurons — even if they’re irrelevant to the current task. Unfortunately, this all-hands-on-deck approach leads to high computational demands and increased costs.

Our goal was to build a model that can select the appropriate subset of neurons on the fly, depending on the task; this is similar to, for instance, the way the brain relies on clumps of specialized neurons in the visual or auditory cortex to see or hear. Such an FM could adapt to multiple kinds of inputs, such as speech and text, over a range of languages, and produce multiple kinds of outputs.

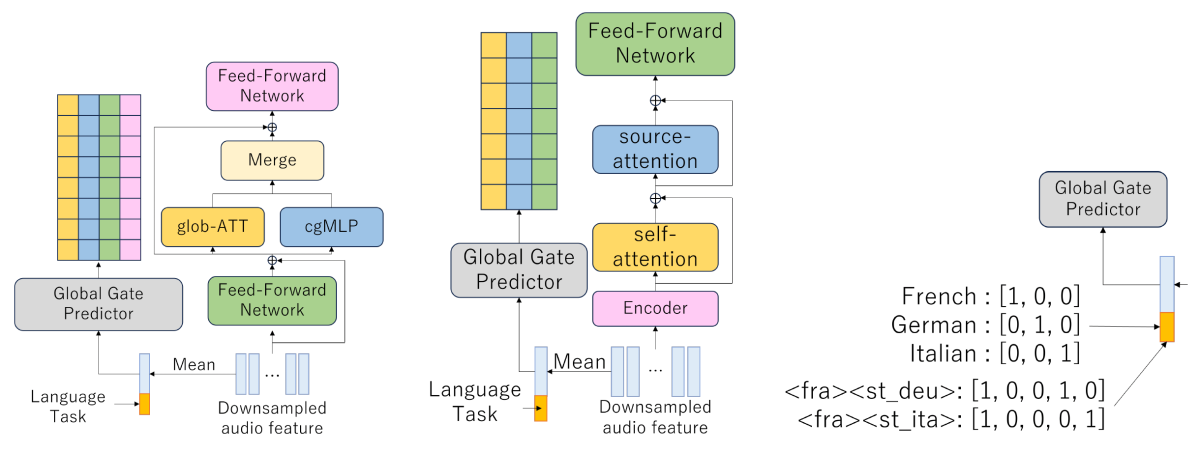

In a paper we presented at this year’s International Conference on Learning Representations (ICLR), we propose a novel context-aware FM for multilingual speech recognition, translation, and language identification. Rather than activating the whole network, this model selects bundles of neurons — or modules — to activate, depending on the input context. The input context includes characteristics such as what language the input is in, speech features of particular languages, and whether the task is speech translation, speech recognition, or language identification.

Once the model identifies the context, it predicts the likelihood of activating each of the modules. We call those likelihoods gate probabilities, and each one constitutes a filter that we call a gate predictor. If a gate probability exceeds some threshold, the corresponding module is activated.

For instance, based on a few words of spoken German the model might predict, with a likelihood that crosses the gate threshold, that the context is “German audio.” That prediction opens up a subset of appropriate pathways, shutting down others.

Prior approaches to pruning have focused on fine-grained pruning of model layers and of convolutional kernels. Layer pruning, however, can impair a model’s structural integrity, while fine-grained kernel pruning can inhibit a model’s ability to adapt to different kinds of inputs.

Module-wise pruning lets us balance between structural flexibility and ability to interpret different contexts. The model is trained to dynamically prune irrelevant modules at runtime, which encourages each module to specialize in a different task.

In experiments, our model demonstrated performance comparable to that of a traditional model but with 30% fewer GPUs, reducing costs and increasing speed.

In addition to saving computational resources, our approach also lets us observe how the model processes linguistic information during training. For each component of a task, we can see the probability distributions for the use of various modules. For instance, if we ask the model to transcribe German speech to text, only the modules for German language and spoken language are activated.

This work focused on FMs that specialize in speech tasks. In the future, we aim to explore how this method could generalize to FMs that process even more inputs, including vision, speech, audio, and text.

Acknowledgements: We want thank Shinji Watanabe, Masao Someki, Nathan Susanj, Jimmy Kunzmann, Ariya Rastrow, Ehry MacRostie, Markus Mueller, Yifan Peng, Siddhant Arora, Thanasis Mouchtaris, Rupak Swaminathan, Rajiv Dhawan, Xuandi Fu, Aram Galstyan, Denis Filimonov, and Sravan Bodapati for the helpful discussions.