Customer-obsessed science

Research areas

-

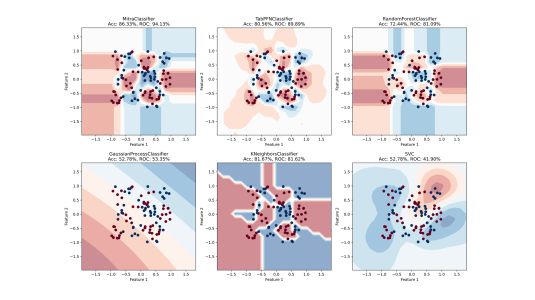

July 22, 2025Generating diverse synthetic prior distributions leads to a tabular foundation model that outperforms task-specific baselines.

Featured news

-

IEEE Symposium on Security and Privacy 20252025We propose plausible post-quantum (PQ) oblivious pseudorandom functions (OPRFs) based on the Power-Residue PRF (Damgård CRYPTO’88), a generalization of the Legendre PRF. For security parameter λ, we consider the PRF Goldk(x) that maps an integer x modulo a public prime p = 2λ ·g+ 1 to the element (k + x) g mod p, where g is public and log g ≈ 2λ. At the core of our constructions are efficient novel methods

-

CVPR 2025 Workshop on Efficient Large Vision Models2025Diffusion models enables high-quality virtual try-on (VTO) with their established image synthesis abilities. Despite the extensive end-to-end training of large pre-trained models involved in current VTO methods, real-world applications often prioritize limited training and inferencing/serving/deployment budgets for VTO. To solve this obstacle, we apply Doob’s h-transform efficient fine-tuning (DEFT) for

-

EMNLP 2024 Workshop on Customizable NLP, Transactions on Machine Learning Research2025Precise estimation of downstream performance in large language models (LLMs) prior to training is essential for guiding their development process. Scaling laws analysis utilizes the statistics of a series of significantly smaller sampling language models (LMs) to predict the performance of the target LLM. For downstream performance prediction, the critical challenge lies in the emergent abilities in LLMs

-

RSS 2025, ICRA 20252025We consider the problem of designing a smooth trajectory that traverses a sequence of convex sets in minimum time, while satisfying given velocity and acceleration constraints. This problem is naturally formulated as a nonconvex program. To solve it, we propose a biconvex method that quickly produces an initial trajectory and iteratively refines it by solving two convex subproblems in alternation. This

-

2025With increasing demands for efficiency, information retrieval has developed a branch of sparse retrieval, further advancing towards inference-free retrieval where the documents are encoded during indexing time and there is no model-inference for queries. Existing sparse retrieval models rely on FLOPS regularization for sparsification, while this mechanism was originally designed for Siamese encoders, it

Academia

View allWhether you're a faculty member or student, there are number of ways you can engage with Amazon.

View all