In many of today’s industrial and online applications, identifying anomalies — rare, unexpected events — in real-time data streams is essential. Anomalies can indicate manufacturing defects, system failures, security breaches, or other significant events.

The typical machine-learning-based anomaly detection system is trained in a supervised manner, using labeled examples. But in many online settings, the data is so diverse, and its distribution changes so constantly, that collecting and labeling data is prohibitively expensive.

Moreover, no single anomaly detection (AD) model works best across all data types. For instance, we observed that certain AD models worked well for one type of customer, while different models worked well for another type of customer. But it is not a priori obvious which model to deploy for a given customer, since customer workloads often change over time, and, consequently, so does the best-performing AD model.

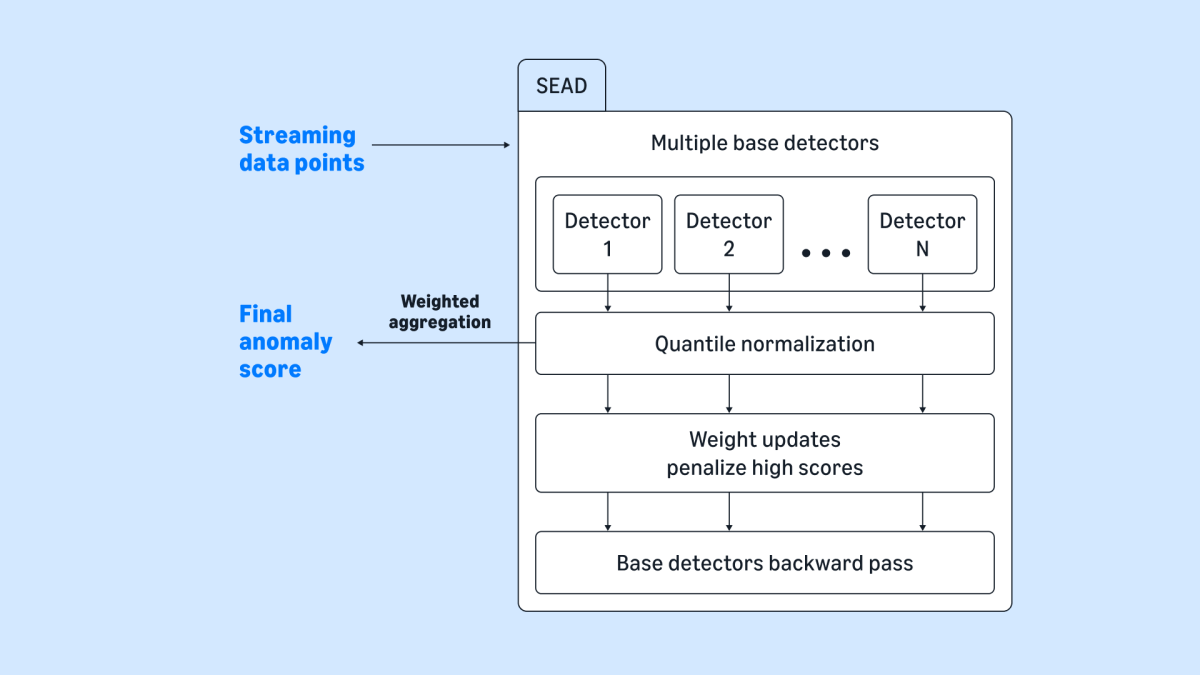

In a paper we’re presenting at the 2025 International Conference on Machine Learning (ICML), we attempt to address these problems with an approach we call SEAD, for streaming ensemble of anomaly detectors. SEAD uses an ensemble of anomaly detection models, so it always has recourse to the best model for each data type, and it operates in an unsupervised manner, so it doesn't need labeled anomaly data during training. It works efficiently in an online setting, processing data as it streams in, and it adapts dynamically to changes in the data.

To evaluate SEAD, we compared it to three previous anomaly detection models, each with four hyperparameter settings, and a rule-based method, for a total of 13 baselines. On 15 different tasks, SEAD had the highest average ranking (5.07) and the lowest variance (6.64).

Rewarding reticence

The fundamental insight behind SEAD is that anomalies are rare. SEAD thus assigns higher weights to the models — or “base detectors” — in the ensemble that consistently produce lower anomaly scores. Since the different base detectors use different scoring systems, SEAD normalizes their scores by assigning them to different quantiles, according to the distribution of past scores.

To compute the weights, we use the multiplicative weights update (MWU) mechanism, a standard method in expert systems. With MWU, each base detector is initialized with a starting weight. At the end of each round, each base detector’s new weight is the product of its old weight and a negative exponential of the learning rate times the normalized anomaly score that it output during that round.

After all the base detectors have been updated in this way, their weights are normalized so that they sum to 1. Through this process, detectors that consistently output larger scores will start getting lower weights. The technical insight of our work is to apply this classical MWU idea, originally proposed for the supervised setting, to the unsupervised setting of anomaly detection.

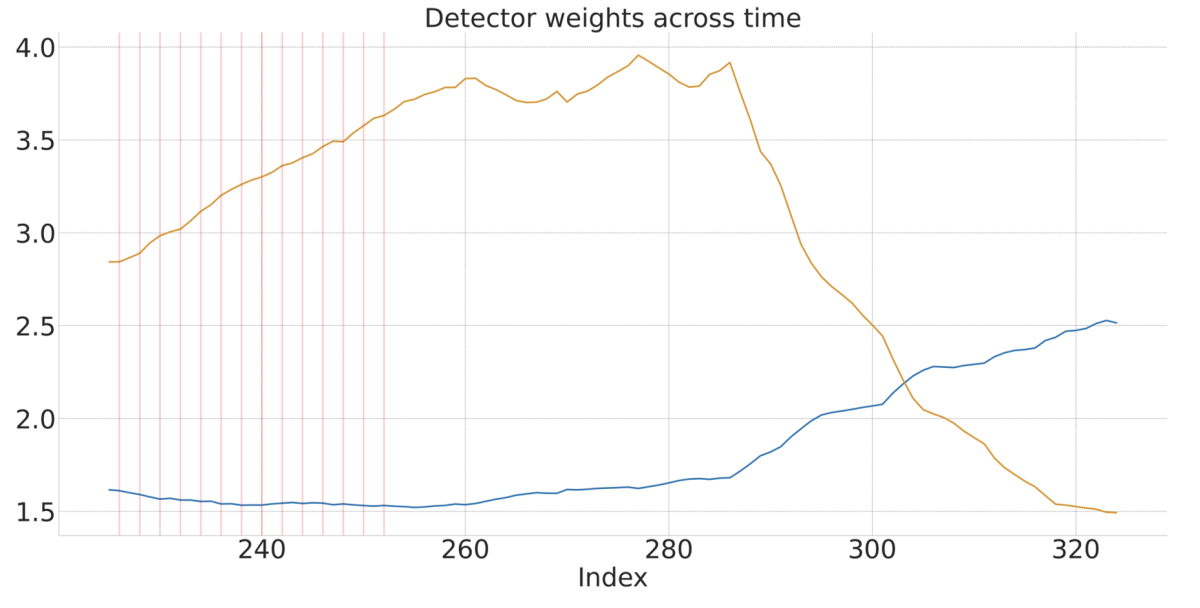

During the model evaluation, we were able to see the algorithm reweight base detectors on the basis of the input data. On one dataset, SEAD assigned high weights to two different models, both of which consistently identified anomalies during a phase of the testing that involved truly anomalous data. After that phase, however, on clean data, one of the models continued firing, and SEAD quickly reduced its weight.

To further investigate SEAD’s ability to weight models appropriately, we augmented the 13 models in our ensemble with 13 additional algorithms, which simply generated scores at random. On our test set, SEAD’s accuracy dropped by only 0.88%, indicating that our update algorithm did a good job of quickly weeding out the unreliable models.

Computational efficiency

One drawback to ensemble approaches like SEAD is that running multiple models at once incurs computational overhead. To address this, we experimented with an approach, dubbed SEAD++, that randomly sampled a subset of the ensemble models, with a probability proportional to their weights. This resulted in a roughly a twofold speedup relative to the original SEAD, with minimal accuracy trade-offs. SEAD++ is thus a promising alternative in use cases where computational resources are at a premium.

SEAD represents a significant advancement in the field of anomaly detection for streaming data. By intelligently selecting the best-performing model from a pool of candidates in real time, it ensures reliable and efficient anomaly detection. Its unsupervised, online nature, combined with its adaptability, makes it a valuable tool for a range of applications, setting a new standard for anomaly detection in streaming environments.