For a human to drive successfully around an urban environment, they must be able to trust their eyes and other senses, know where they are, understand the permissible ways to move their vehicle safely, and of course know how to reach their destination.

Building these abilities, and so many more, into an autonomous electric vehicle designed to transport customers smoothly and safely around densely populated cities takes an astonishing amount of technological innovation. Since its founding in 2014, Zoox has been developing autonomous ride-hailing vehicles, and the systems that support them, from the ground up. The company, which is based in Foster City, California, became an independent subsidiary of Amazon in 2020.

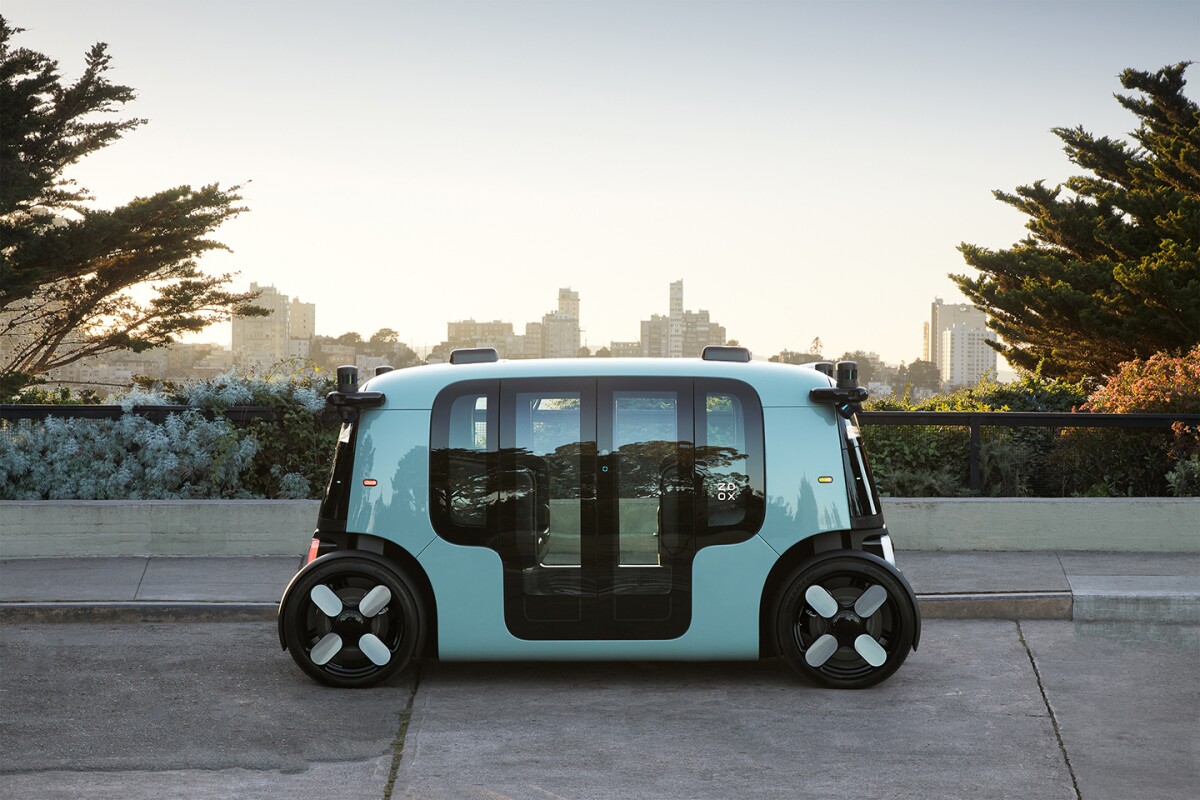

The Zoox purpose-built robot is an autonomous, pod-like electric vehicle that can carry four passengers in comfort. It has no forward or backward, being equally happy to drive in either direction, at up to 75 miles per hour, and can move all four wheels independently. There are no manual driving controls inside the vehicle.

Zoox has already done a great deal of testing of its autonomous driving systems using a fleet of retrofitted Toyota Highlander vehicles — with a human driver at the wheel, ready to take over if needed — in San Francisco, Las Vegas, Foster City, and Seattle.

Central to the Zoox navigation system is a cluster of capabilities called calibration, localization, and mapping. Only through this combination of abilities can Zoox vehicles understand their environment with exquisite precision, know where they are in relation to everything in their vicinity and beyond, and know exactly where they are going.

This is the domain of Zoox’s CLAMS (Calibration, Localization, and Mapping Simultaneously) and Zoox Road Network (ZRN) teams, which together enable the vehicle to meaningfully understand its location and process its surroundings. To get an idea of how these elements work in concert, Amazon Science spoke to several members of these teams.

In terms of awareness of its environment, the Zoox vehicle can fairly be likened to an all-seeing eye. Its state-of-the-art sensor architecture is made up of LiDARs (Light Detection and Ranging), radars, visual cameras, and longwave-infrared cameras. These are arrayed symmetrically around the outside of the vehicle, providing an overlapping, 360-degree field of view.

With this many sensors in play, it is critical that their input is stitched together accurately to create a true and self-consistent picture of everything happening all around the vehicle, moment to moment. To do that, the vehicle needs to know exactly where its sensors are in relation to each other, and with sensors of such high resolution, it’s not enough simply to know where the sensors were attached to the vehicle in the first place.

“To a very minor but still important degree, every vehicle is a special snowflake in some way,” says Taylor Arnicar, staff technical program manager, who oversees the CLAMS and ZRN teams. “And the other reality is we’re exposing these vehicles to rather harsh real-world conditions. There’s shock and vibe, thermal events, and all these things can cause very slight changes in sensor positioning.” Were such changes to be ignored, it could result in unacceptably “blurry” vision, Arnicar says.

In other autonomous-robotics applications, sensor calibration typically entails the robot looking at a specific calibration target, displayed on surrounding infrastructure, such as a wall. With the Zoox vehicle destined for the ever-changing urban environment, the Zoox team is pioneering infrastructure-free calibration.

“That means we rely on the natural environment — whatever objects, shapes, and colors are in the world around the vehicle as it drives,” says Arnicar. One way the team does this is by automatically identifying image gradients — such as the edges of buildings or the trunks of trees — from the vehicle’s color camera data and aligning those with depth edges in the LiDAR data.

It is worth emphasizing that a superpower of the Zoox vehicle is seeing its surroundings with superhuman perception. With so many sensors mounted externally, in pods on the corners of the vehicles, it can see what’s coming around every corner before a human driver would. Its LiDARs and visual cameras mean it knows what lies in every direction with high precision. It even boasts a kind of X-ray vision: “Certain materials don’t obstruct the radar,” says Elena Strumm, Zoox’s engineering manager for mapping algorithms. “When a bicyclist is cycling behind a bush, for example, we might get a really clear radar signature on them, even if that bush has occluded the LiDAR and visual cameras.”

Now that the vehicle can rely on what it senses, it needs a map. The Zoox team gathers its map data first-hand by driving around the cities in which it will operate in Toyota Highlanders retrofitted with the full Zoox sensor architecture. LiDAR data and visual images collected in this way can be made into high-definition maps by the CLAMS team. But first, all the people, cars, and other ephemeral aspects of the urban landscape must be removed from the LiDAR data. For this, machine learning is required.

When the Zoox vehicle is in normal urban operations, it is fundamental that its perception system recognizes the aspects of incoming LiDAR data that represent pedestrians, cyclists, cars or trucks — or indeed anything that may move in ways that need to be anticipated. LiDARs create enormous amounts of information about the dynamic 3D environment around the vehicle in the form of “point clouds” — sets of points that describe the objects and surfaces visible to the LiDAR. Using machine learning to instantly identify people in a fast-moving, dynamic environment is a challenge, particularly as people may be moving, static, partly occluded, in a wheelchair, only visible from the knees down, or any number of possibilities.

“Machine-learned AI systems excel at this kind of pattern-matching problem. You feed millions of examples of something and then they can do a great good job of recognizing that thing in the abstract,” Arnicar explains.

In a beautiful piece of synergy, the Zoox mapping team benefits from this safety-critical application of machine learning because they require the reverse information — they want to take the people and cars out of the data so that they can create 3D maps of the road landscape and infrastructure alone.

“Once these elements are identified and removed from the mapping data, it becomes possible to combine LiDAR-based point clouds from overlapping locations to create high resolution 3D maps,” says Strumm.

But maps are not useful to the vehicle without meaning. To create a “semantic map,” the ZRN team adds layers of information to the 3D map that encode everything static that the vehicle needs to navigate the road safely, including speed limits, traffic light locations, one-way streets, keep-clear zones and more.

The final core piece of the CLAMS team’s work is localization. Zoox’s localization technology allows each vehicle to know where it is in the world — and on its map — to within a few centimeters, and its direction to within a fraction of a degree. The vehicle does this not only by comparing its visual inputs with its map, but also by utilizing GPS, accelerometers, wheel speeds, gyroscopes, and more. It can therefore check its precise location and velocity hundreds of times per second. Armed with a combination of the physical and semantic maps, and always aware of its place in relation to every object or person in its vicinity, the vehicle can navigate safely to its destination.

Part of the localization challenge is that any map will become dated over time, Arnicar explains. “Once you build the map — from the moment the data is collected — you need to consider that it could be out of date.” This is because the world can change anytime, anywhere, without notice. “On one occasion one of our Toyota Highlanders was driving down the street collecting data, and right in front of us was a construction truck with a guy hanging off the back, repainting the lane line in a different place as they drove along. No amount of fast mapping can catch up with these sorts of scenarios.” In practice, this means the map needs to be treated as a guidebook for the vehicle, not as gospel.

“This changeability of the real world led us to create the ZRN Monitor, a system on the vehicle that determines whether the actual road environment has differed from our semantic map data,” says Chris Gibson, engineering manager for the Zoox Road Network team. “For example, if lane markings have changed and now the double yellow lines have moved, then if we don’t detect that dynamically, we could potentially end up driving into opposing traffic. From a safety perspective, we must make absolutely certain that the vehicle does not drive into such areas.” The ZRN Monitor’s role is to identify and, to an extent, evaluate the safety implications of such unanticipated environmental modifications. These notifications are also an indication that it may be time to update the map for that area with more recent sensor data.

In the uncommon situation in which the vehicle encounters a challenging driving situation and it isn’t highly confident of a safe way to proceed, it can request “TeleGuidance” — a human operator located in a dedicated service center is provided with the full 3D understanding of the vehicle’s environment, as well as live-streamed sensor data.

“Imagine a construction zone. The Zoox vehicle might need to be directed to drive on the other side of the road, which would normally carry oncoming traffic. That’s a rule that under most circumstances you shouldn’t break, but in this instance, a TeleGuidance tactician might provide the robot with waypoints to ensure it knows where it needs to go in that moment,” says Gibson. The vehicle remains responsible for the safety of its passengers, however, and continues to drive autonomously at all times while acting on the TeleGuidance information.

Before paying customers will be able to use their smartphones to hail a Zoox vehicle, more on-road testing first needs to be done. Zoox has built dozens of its purpose-built vehicles and is testing them on “semi-private courses” in California, according to Zoox’s co-founder and chief technology officer, Jesse Levinson. Next on the agenda is full testing on public roads, says Levinson, who promises that is “really not that far away. We’re not talking about years.”

So, what does it feel like to be transported in a Zoox vehicle?

“I’ve ridden in a Zoox vehicle, with no safety driver, no steering wheel, no anything — just me in the vehicle,” says Arnicar. “And it is magical. It’s what I’ve been working at Zoox seven years to experience. I’ve seen Zoox go from sketches on a napkin to something I can ride in. That's pretty amazing.”

When an autonomous Zoox vehicle ultimately comes around a corner near you, know this for a fact: no matter how striking and novel it looks, it will see you before you see it.