We as humans take for granted our ability to operate in ever-changing home environments. Every morning, we can get from our bed to the kitchen, even after we change our furniture arrangement or move chairs around or when family members leave their shoes and bags in the middle of the hallway.

This is because humans develop a deep contextual understanding of their environments that is invariant to a variety of changes. That understanding is enabled by superior sensors (eyes, ears, and touch), a powerful computer (the brain), and vast memory. However, for a robot that has finite sensors, computational power, and memory, dealing with a challenging dynamic environment requires innovative new algorithms and representations.

At Amazon, scientists and engineers have been investigating ways to help Astro know where it is at all times in a customer's home with few to no assumptions about the environment. Astro’s Intelligent Motion system relies on visual simultaneous localization and mapping, or V-SLAM, which enables a robot to use visual data to simultaneously construct a map of its environment and determine its position on that map.

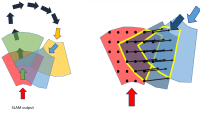

A V-SLAM system typically consists of a visual odometry tracker, a nonlinear optimizer, a loop-closure detector, and mapping components. The front end of Astro’s system performs visual odometry by extracting visual features from sensor data, establishing correspondences between features from different sensor feeds, and tracking the features from frame to frame in order to estimate sensor movement.

Loop-closure detection tries to match the features in the current frame with those previously seen to correct for accumulated inaccuracies in visual odometry. Astro then processes the visual features, estimated sensor poses, and loop-closure information and optimizes it to obtain a global motion trajectory and map.

State-of-the art research on V-SLAM assumes that the robot’s environment is mostly static and rarely changes. But those assumptions can’t be expected to hold in customers’ homes.

For Astro to localize robustly in home environments, we had to overcome a number of challenges, which we discuss in the following sections.

Environmental dynamics

Changes in the home happen at varying time scales: short-term changes, such as the presence of pets and people; medium-term changes, such as the appearance of objects like boxes, bags, or chairs that have been moved around; and long-term changes, such as holiday decorations, large-furniture rearrangements, or even structural changes to walls during renovations.

In addition, the lighting inside homes changes constantly as the sun moves and indoor lights are turned on and off, shading and illuminating rooms and furniture in ways that can make the same scene look very different at different times. Astro must be able to operate across all lighting conditions, including total darkness.

While industrial robots can function in controlled environments whose variations are precoded as rules in software programs, adapting to unscripted environmental changes is one of the fundamental challenges the Astro team had to solve. The Intelligent Motion system needs a high-level visual understanding of its environment, such that invariant visual cues can be extracted and described programmatically.

Astro uses deep-learning algorithms trained with millions of image pairs, both captured and synthesized, that depict similar scenes at different times of day. Those images mimic a variety of possible scenarios Astro may face in a real customer’s home, such as different scene layouts, lighting and perspective changes, occlusions, object movements, and decorations.

Astro’s algorithms also enable it to adapt to an environment that it has never seen before (like a new customer’s home). The development of those algorithms required a highly accurate and scalable ground-truth mechanism that can be conveniently deployed to homes and allows the team to test and improve the robustness of the V-SLAM system.

In the figure below, for instance, a floor plan of the home was acquired ahead of time, and device motion was then estimated from sensor data at centimeter-level accuracy.

Using sensor fusion to improve localization

In order to improve the accuracy and robustness of localization, Astro fuses data from its navigation sensors with that of wheel encoders and an inertial measurement unit (IMU), which uses gyroscopes and accelerometers to gauge motion. Each of these sensors has limitations that can affect Astro's ability to localize, and to determine which sensors can be trusted at a given time, it is important to understand their noise characteristics and failure modes.

For example, when Astro drives over a threshold, the IMU sensor can saturate and give an erroneous reading. Or if Astro drives over a flooring surface where its wheels slip, its wheel encoders can give an inaccurate reading. Visual factors such as illumination and motion blur can also impact sensor readings.

The Astro team also had to account for a variety of use cases that would predictably cause sensor errors. For example, the team had to ensure that when Astro is lifted off the floor, the wheel encoder data is handled appropriately, and when the device enters low-power mode, certain sensor data is not processed.

Computational and memory limitations

Astro has finite onboard computational capacity and memory, which need to be shared among several critical systems. The Astro team developed a nonlinear optimization technique for “bundle adjustment”, the simultaneous refinement of the 3-D coordinates of the scene, the estimation of the robot’s relative motion, and optical characteristics of the camera, which is computationally efficient enough to generate six-degree-of-freedom pose information multiple times per second.

Because Astro’s map of the home is constantly updated to accommodate changes in the environment, its memory footprint steadily grows, necessitating compression and pruning techniques that preserve the map’s utility while staying within on-device memory limits.

To that end, the Astro team designed a long-term-mapping system with multiple layers of contextual knowledge, from higher-level understanding — such as which rooms Astro can visit — to lower-level understanding — such as differentiating the appearance of objects lying on the floor. This multilayer approach helps Astro efficiently recognize any major changes to its operating environment while being robust enough to disregard minor changes.

All these updates happen on-device, without any cloud processing. A constantly updated representation of the customer’s home allows Astro to robustly and effectively localize itself over months.

In creating this new category of home robot, the Astro team used deep learning and built on state-of-the-art computational-geometry techniques to give Astro spatial intelligence far beyond that of simpler home robots. The Astro team will continue innovating to ensure that Astro learns new ways to adapt to more homes, helping customers save time in their busy lives.