Joe Tighe, senior manager for computer vision at Amazon Web Services, is a coauthor on two papers being presented at this year’s Winter Conference on Applications of Computer Vision (WACV), and as he prepares to attend the conference, he sees two major trends in the field of computer vision.

“One is Transformers and what they can do, and the other is self-supervised or unsupervised learning and how we can apply that,” Tighe says.

The Transformer is a neural-network architecture that uses attention mechanisms to improve performance on machine learning tasks. When processing part of a stream of input data, the Transformer attends to data from other parts of the stream, which influences its handling of the data at hand. Transformers have enabled state-of-the-art performance on natural-language-processing tasks because of their ability to model long-range correlations — recognizing, for instance, that the name at the start of a sentence might be the referent of a pronoun at the sentence’s end.

In visual data, on the other hand, locality tends to matter more: usually, the value of a pixel is more strongly correlated with those of the pixels around it than with pixels that are farther away. Computer vision has traditionally relied on convolutional neural networks (CNNs), which step through an image applying the same set of filters — or kernels — to each patch of an image. That way, the CNN can find the patterns it’s looking for — say, visual characteristics of dog ears — wherever in the image they occur.

“We've been successful in basically achieving the same accuracy as convolutional networks with these Transformers,” Tighe says. “And we maintain that locality constraint by, for instance, feeding in patches of images, because with a patch, you have to be local. Or we start out with a CNN and then feed mid-level features from the CNN into the Transformer, and then you let the Transformer go and relate any patch to any other patch.

“But I don't think what Transformers are going to bring to our field is higher accuracy for just embedding images. What they are incredibly good at — and we’re already seeing strong results — is processing structured data.”

For instance, Tighe explains, Transformers can more naturally infer object permanence — determining that a collection of pixels in one frame of video designate the same object as a different collection of pixels in a different frame.

This is crucial to a number of video applications. For instance, determining the semantic content of a film or TV show requires recognizing the same characters across different shots. Similarly, Amazon Go — the Amazon service that enables checkout-free shopping in physical stores — needs to recognize that the same customer who picked up canned peaches on aisle three also picked up raisin bran on aisle five.

“To understand a movie, we can't just send in frames,” Tighe says. “One of the things my group is doing — as well as a lot of different groups — is using Transformers to take in audio information, take in text, like subtitles, and take in the visual information, the movie content, into one framework. Because what you see is only half of it. What you hear is as, if not more, important for understanding what's going on in these movies. I see Transformers as a powerful tool to finally not have ad hoc ways to combine audio, text, and video together.”

Contrastive learning

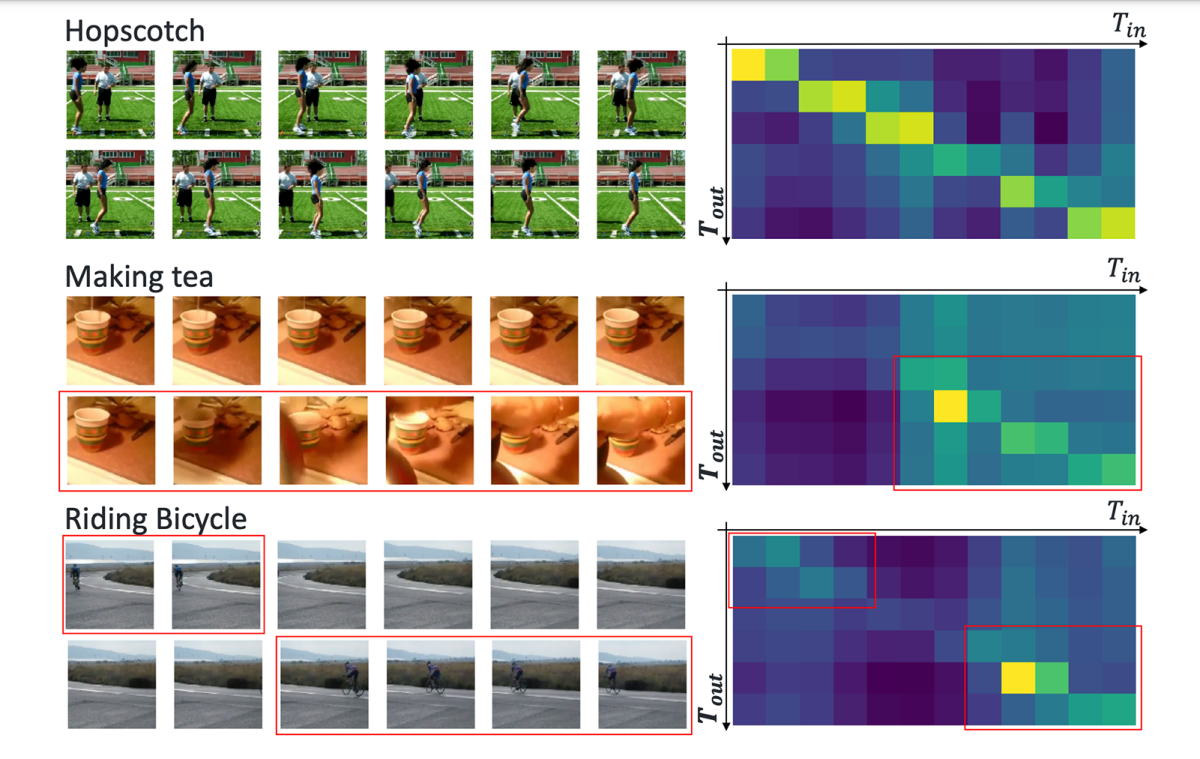

On the topic of unsupervised and self-supervised learning, Tighe says, the most interesting recent development has been the exploration of contrastive learning. With contrastive learning, a neural network is fed pairs of inputs, some from the same class and some from different classes, and it learns to produce embeddings — vector representations — that cluster instances of the same class together and separate instances of different classes. The trick is to do this with unlabeled data.

“If you take an image, and then you augment it, you change its color, you take a really aggressive crop, you add a bunch of noise, then you have two examples,” Tighe explains. “You put those both through the network and you say, These two things are the same thing. You can be very aggressive with your augmentations. So when you get, say, a crop of a dog's head and a crop of a dog's tail, you're telling the network these are semantically the same object. And so it needs to learn high-level semantics of dog parts.

“But you also need to push them apart from something else. It’s easy to find examples that are far away already, but that doesn’t help the network learn. What we really need is to find the closest example and push away from that. So I think one of the key innovations here is that you have this large bank of image embeddings that you should push against. The network is going to pick out the really hard examples, the ones that it naturally is embedding very close together. It's going to try and push those apart, and that's how this embedding is learned very well.

“Then at the end, when you're going to test how well it does, you just train a single linear layer with all your labeled data. The idea is, if this works, we should be able to throw the world of images at one of these systems, train the ultimate embedding that can describe the entire world, and then, with our specific task in mind, just with a little bit of data, train that last layer and have very high performance.”

Action recognition

In his own papers at WACV, Tighe and his colleagues are exploring both attention mechanisms and semi-supervised learning — although not exactly Transformers and contrastive learning.

“One WACV paper is looking at how we use the Transformer mechanism of self-attention to aggregate temporal information,” he explains. “It's actually a CNN, but then we use that self-attention mechanism to aggregate information across the whole video. So we get the ability to share information globally inside this network as well.

“The other one is looking at, if you have a dictionary of actions, how can you predict the different actions that are occurring by looking at a bunch of events? One of the datasets we look at is gymnastics. So if we look at the floor plan for a gymnastics event, and you have a number of examples of that, we predict the fine-grain actions like a flip or turnover that happened without supervision of those fine-grain actions.”

As for what the future may hold, “what's really missing from video research is around how you model the temporal dimension,” Tighe says. “And I'm not claiming to know what that means yet. But it's inherently a different signal; it can't just be treated like another space dimension.”