At Amazon, we constantly seek ways to optimize software development tools, processes, and practices in order to improve outcomes and experiences for our customers. Internally, Amazon has the variety of businesses, team sizes, and technologies to enable research on engineering practices that span a wide variety of circumstances. Recently, we've been exploring how generative artificial intelligence (genAI) affects our cost-to-serve-software (CTS-SW) metric. This post delves into the research that led to CTS-SW’s development, how various new AI-powered tools can lower CTS-SW, and our future plans in this exciting area.

Understanding CTS-SW

We developed cost to serve software as a metric to quantify how investments in improving the efficiency of building and supporting software enable teams to easily, safely, and continually deploy software to customers. It bridges the gap between our existing framework, which tracks many metrics (similar to DORA and SPACE), and the quantifiable bottom-line impact on the business. It allows developer experience teams to express their business benefits in either effective capacity (engineering years saved) or the monetary value of those savings. In a recent blog post on the AWS Cloud Enterprise Strategy Blog, we described how CTS-SW can evaluate how initiatives throughout the software development lifecycle affect the ability to deliver for customers.

At a high level, CTS-SW tracks the dollars spent per unit of software reaching customers (i.e., released for use by customers). The best unit of software to use varies based on the software architecture. Deployment works well for microservices. Code reviews or pull requests that are shipped to a customer work well for monolith-based teams or software whose release is dictated by a predetermined schedule. Finally, commits that reach customers make sense for teams that contribute updates to a central code “trunk”. We currently use deployments, as it fits our widespread use of service-oriented architecture patterns and our local team ownership.

CTS-SW is based on the same theory that underlies the cost-to-serve metric in Amazon’s fulfillment network, i.e., that the delivery of a product to a customer is the result of an immeasurably complex and highly varied process and would be affected by the entirety of any changes to it. That process is so complex, and it changes so much over time, that the attempt to quantify each of its steps and assign costs to them, known as activity-based costing, is likely to fail. This is especially true of software engineering today, as new AI tools are changing the ways software engineers do their jobs.

Cost to serve simplifies this complex process by modeling only the input costs and the output units. We can then work backwards to understand drivers and opportunities for improvement.

In the context of software development, working backwards means that we investigate changes that could affect the metric, beyond the core coding experience of working in an IDE and writing logic. We also include continuous integration/continuous delivery (CI/CD) practices, work planning, incident management practices, maintenance of existing systems, searching for information, and many other factors that characterize software development at Amazon. By working backwards, we look across the collective software builder experience and investigate how changes in different areas, such as reducing the number of alarms engineers receive, affects developers’ ability to build new experiences for customers. We have used a variety of research methods to explore these relationships, but we have primarily relied on mathematical models.

From a science perspective, Amazon is an interesting place in which to build these models because of our established culture of small software teams that manage their own services. A longstanding Amazon principle is that these teams should be small enough to be fed by two pizzas, so we refer to them as “two-pizza teams”. This local-ownership model has led to the creation of thousands of distinct services solving customer problems across the company.

Amazon’s practice of working backwards from the best possible customer experience means software teams choose the optimal combination of tooling and technology to enable that experience. These choices have led to the implementation of many different software architectures at Amazon. That variety offers an opportunity to explore how different architectures affect CTS-SW.

The Amazon Software Builder Experience (ASBX) team, our internal developer experience team, has access to rich telemetry data about these architectures and different ways of working with them. Using this data, we created a panel dataset representing the work of thousands of two-pizza teams over the past five years and including features we thought could affect CTS-SW. We model CTS-SW using the amount of developer time — the largest component of CTS-SW — per deployment. This data offers an opportunity for modeling the complete process from inception to delivery at a scale rarely seen in developer experience research.

Last year, as a first exploration of this dataset, we fit a set of linear mixed models to CTS-SW, to identify other metrics and behaviors that are highly correlated with it. Within ASBX, we were looking for input metrics that teams could optimize to lower CTS-SW. Correlations with linear mixed models can also help establish causal links between factors in the linear mixed models and CTS-SW. Linear mixed models are a good fit for this sort of problem because they have two components, one that captures the underlying relation between the outcome variable and the predictors, irrespective of team, and one that captures differences across teams.

Once we’d fit our models, we found that the following input metrics stood out as being the largest potential drivers of CTS-SW after a sensitivity analysis:

- Team velocity: This measures how many code reviews (CRs) a software team merges each week per developer on the team. Teams that check in more code have a lower CTS-SW. Our science validates that software is a team sport, and framing this as a team-level outcome instead of an individual one prevents using CR flow as a performance metric for individual engineers. Having strong engineering onboarding and deployment safety helps teams reach and sustain high velocity. This was our largest single predictor of CTS-SW.

- Delivery health (interventions per deploy, rollback rates): We find that teams that have implemented CI/CD with automation and change safety best practices have better CTS-SW outcomes. Our data demonstrates that when you spend less time wrestling with deployment friction and more time creating value, both productivity and job satisfaction improve.

- Pages per on-call builder: This measures how many pages a team gets per week. We find that an increase in paging leads to lower CTS-SW, as paging can result in a deployment to production. However, we believe that work done in this reactive way may not be the most useful to customers in the long term. Understanding how this urgent, unplanned work interacts with new-feature delivery is an area for future research.

Our research has shown strong relationships between development factors and CTS-SW, making it an effective tool for measuring software development efficiency. We are working to expand the data we use in these models to better capture the ways in which teams build and operate their services. With this data, we will investigate the effects of software architecture decisions, informing architecture recommendations for teams across Amazon.

Validating linear mixed models with causal inference

Once we found that model fitting implied a correlation between team velocity and CTS-SW, we started looking for natural experiments that would help us validate the correlation with causal evidence. The rapidly emerging set of generative AI-powered tools provided that set of natural experiments.

The first of these tools adopted at scale across Amazon was Amazon Q Developer. This tool automatically generates code completions based on existing code and comments. We investigated the tool’s effect on CR velocity by building a panel regression model with dynamic two-way fixed effects.

This model uses time-varying covariates based on observations of software builder teams over multiple time periods during a nine-month observation window, and it predicts either CR velocity or deployment velocity. We specify the percentage of the team using Q Developer in each week and pass that information to the model as well.

We also evaluate other variables passed to the model to make sure they are exogenous, i.e., not influenced by Q Developer usage, to ensure that we can make claims of a causal relationship between Q Developer usage and deployment or CR velocity. These variables include data on rollbacks and manual interventions in order to capture the impact of production and deployment incidents, which may affect the way builders are writing code.

Here’s our model specification:

yit = ai + λt + βyi,t-1 + γXit + εit

In this equation, 𝑦𝑖𝑡 is the normalized deployments per builder week or team weekly velocity for team 𝑖 at time 𝑡, 𝑎𝑖 is the team-specific fixed effect, 𝜆𝑡 is the time-specific fixed effect, 𝑦𝑖,𝑡―1 is the lagged normalized deployments or team velocity, 𝑋𝑖𝑡 is the vector of time-varying covariates (Q Developer usage rate, rollback rate, manual interventions), 𝛽𝑖𝑡 is the persistence of our dependent variable over time (i.e., it shows how much of the past value of 𝑦 carries over into the current period), and 𝜀𝑖𝑡 is the error term.

Early evidence shows that Q Developer has accelerated CR velocity and deployment velocity. More important, we found causal evidence that the launch of a new developer tool can lower CTS-SW for adopting teams and that we can measure that impact. As agentic AI grows, there will be agents for a range of tasks that engineers perform, beyond just writing code. That will require a unit of measurement that can capture their contributions holistically, without overly focusing on one area. CTS-SW enables us to measure the effects of AI across the software development lifecycle, from agents giving feedback on design docs to agents suggesting fixes to failed builds and deployments.

The road ahead

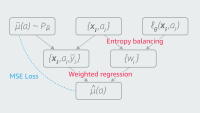

We recognize that combining experimental results can sometimes overstate an intervention’s true impact. To address this, we're developing a baseline model that we can use to normalize our tool-based approach to ensure that our estimates of AI impact are as accurate as possible.

Looking ahead, we plan to expand our analysis to include AI's impact on more aspects of the developer experience. By leveraging CTS-SW and developing robust methodologies for measuring AI's impact, we're ensuring that our AI adoption is truly customer obsessed, in that it makes Amazon’s software development more efficient. As we continue to explore and implement AI solutions, we remain committed to using data-driven approaches to improve outcomes and experiences for our customers. We look forward to sharing them with you at a later date.