Amazon researchers have nine new papers at this year’s International Conference on Machine Learning (ICML), one of the top conferences in AI. Matthias Seeger, a principal applied scientist with Amazon Web Services (AWS), is a coauthor on one of them, which reports work led by AWS applied scientist Cuong Nguyen.

But it’s a paper that Seeger cowrote ten years ago that’s one of the conference highlights. On July 1, the ICML awards committee announced that Seeger and his colleagues’ 2010 paper “Gaussian process optimization in the bandit setting: no regret and experimental design” had won the conference’s Test of Time Award, which honors “a paper from ICML ten years ago that has had substantial impact on the field of machine learning, including both research and practice.”

The citation from the award committee begins, “This paper brought together the fields of Bayesian optimization, bandits, and experimental design,” and it “has since cross-fertilized these separate research domains,” Seeger adds.

Bayesian-optimization and bandit problems have the same general structure, Seeger explains, but “Bayesian optimization is generally done over continuous input spaces and more complicated functions,” he says. “Multi-armed bandits would normally assume finite spaces and linear or otherwise strongly restricted payoff functions. Maybe because Bayesian optimization is more flexible in this sense, it comes with a lot less solid theory. Multi-armed bandits is a more theoretically grounded area.”

Seeger and his colleagues’ 2010 paper generalized theoretical findings from the multi-armed bandit setting to Bayesian optimization (BO), providing strong performance bounds given particular choices of statistical models. This gave machine learning practitioners greater confidence in techniques they’d arrived at empirically and helped them identify circumstances in which those techniques might be less successful.

In the context of deep learning — which now dominates the field of artificial intelligence — BO is used for hyperparameter tuning, or optimizing structural features of the deep-learning model and parameters of the learning algorithm to maximize the efficacy of training on particular data.

To prove their result for BO, Seeger and his colleagues extended techniques borrowed from a third related field, experimental design. The tools they devised to bridge the related disciplines of BO, multi-armed bandits, and experimental design have proved useful to researchers working in all three; the paper has more than 1,000 citations on Google Scholar, which have helped make Seeger the fourth most highly cited researcher in the field of Bayesian optimization.

Seeger’s coauthors on the 2010 paper are Niranjan Srinivas, now a computational biologist at 10xGenomics; Andreas Krause, now a professor of computer science at ETH Zurich; and Sham Kakade, now a professor in the departments of computer science and statistics at the University of Washington.

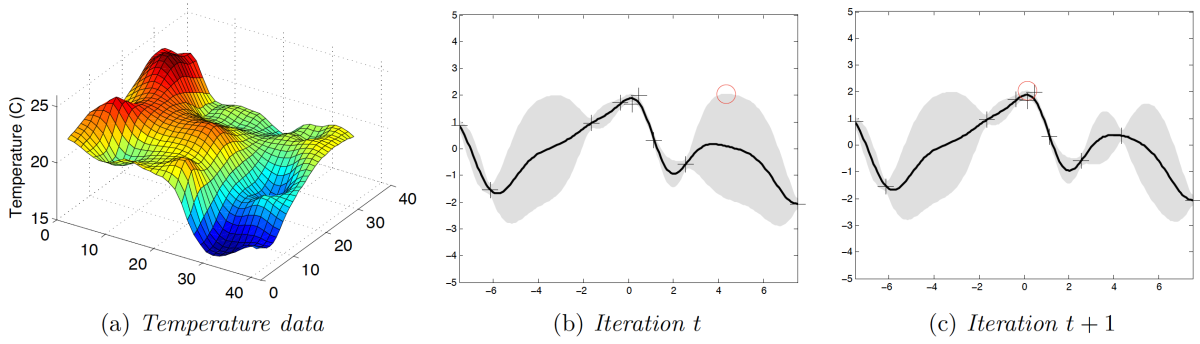

With Bayesian optimization, Seeger explains, “you are essentially optimizing a function over some search space without actually knowing what this function looks like. You have to learn about that function as you sample it. But your real goal is finding the function’s maximum, or to sample it nearby.”

“If you sample forever, at some point you will find its optima” he adds. “But since sampling is expensive and takes time, you want to finish as rapidly as possible. So what you are really interested in is to spend as few samples as possible before you converge to something useful, very close to the optimum.”

Seeger and his colleagues proved that, under conditions that frequently hold for machine learning problems, the sampling process is guaranteed to converge. But they also showed that the convergence rate depends on specific problem parameters.

In BO, the function that you’re trying to optimize is a random function, Seeger explains. “Every time you plug in a point x, you get a random value f(x),” he says. A standard way to do BO is to model the outputs of the function using a probability distribution. If that distribution is Gaussian — the standard bell curve — then Bayesian optimization is said to use a Gaussian process as a surrogate model.

One of the parameters of the surrogate model is its covariance function, which describes the correlation between changes to function inputs (the x’s) and the resulting changes to the outputs (the f(x)’s). There are several families of covariance function, with an infinite range of functions within each family.

Seeger and his colleagues’ paper quantitatively relates the convergence rate of the function-sampling procedure to the specific choice of covariance function.

“Some choices of covariance function imply smooth functions, which can faithfully be interpolated from measurements nearby,” Seeger says. “Others result in rough functions, for which interpolating even across short distances is an uncertain exercise.”

From theory to practice

Machine learning researchers had conjectured that rougher covariance functions, although they may model reality better, imply slower convergence on an optimum. Seeger and his colleagues’ paper quantified this trade-off precisely. It enabled researchers to decide how much roughness they were willing to sacrifice for faster convergence.

To bound convergence rates, Seeger and his colleagues borrowed techniques from experimental design. “Here you are interested in learning about a function, but not just where its maximum is — learning about it globally, or learning about a model everywhere,” Seeger explains.

A central concept in experimental design is information gain, a quantification of how much information about a function each new sample — each new experiment — confers. Seeger and his colleagues quantified the convergence rate in BO by framing the question in terms of information gain.

“It’s pretty theoretical work,” Seeger says. “It led to a lot of follow-up work because of the links that we could show. There is quite a bit of Gaussian-process theory being brought together here, which partly fuses the different themes together.”

At Amazon, Seeger says, his work on meta-learning and automated machine learning is less theoretical, but his training still stands him in good stead. “I do think that the background that I have from back then is quite useful for me to plan ahead, in a way, and to see whether something looks right or not,” Seeger says. “I think this is quite important, because due to the explosive growth of our field, there are now so many options you can pursue. You really cannot implement all of them and try them out. You need to have some guiding principles.”

Seeger’s transition from more theoretical to more applied work mirrors that of the field itself. In the ten years since he cowrote this award-winning paper, he says, “there’s been a huge change, with the size of the field, the practical applications, the size of the models, the size of the data sets.”

“Back then, maybe because it was smaller, there were ideas being followed that wouldn’t immediately have an application, and you were actually looking into theory quite a bit,” he says. “These days, it’s a lot more driven by empirical applications and empirical work. The field has matured and is very successful in some applications, so obviously you want to focus more on whether an idea is useful in the current context or not. And that’s certainly something that I’ve learned to do a lot more since I joined Amazon.”