The first Echo Show represented an entirely new way to interact with Alexa; she could show you things on a screen controlled by voice. Being able to easily see your favorite recipe, watch your flash briefing, or video call with a friend is delightful — but we thought we could add even more to the experience. Our screens are stationary, but we are not. So with Echo Show 10, we asked ourselves: how can we keep the screen in view, no matter where you are in the room? The answer: it has to move.

Creating a device that can move intelligently in a way that improves the Alexa experience and is not distracting was no easy task. We had to consider when, where, and how to incorporate motion into Echo Show to make it feel like a natural extension of how customers experience Alexa.

Combining audio and computer vision algorithms

When you say “Alexa” to any Echo Show device today, you’ll see a blue light bar on screen. The lighter part of that blue light bar approximates the direction the device chooses to focus; we call this beam selection. Echo devices try to select the beam that gives the best accuracy for recognizing what was said.

However, what works for beam selection doesn’t work best for guiding motion. Noises, multiple speakers, or sound reflections from walls and other surfaces can prevent these algorithms from selecting the beam that best represents the direction of the talker. And with audio-only output, it doesn’t matter if Echo’s input system has selected a different beam: the user still hears Alexa’s response. But a screen that’s constantly moving around to avoid these echoes and noises would be a severe distraction.

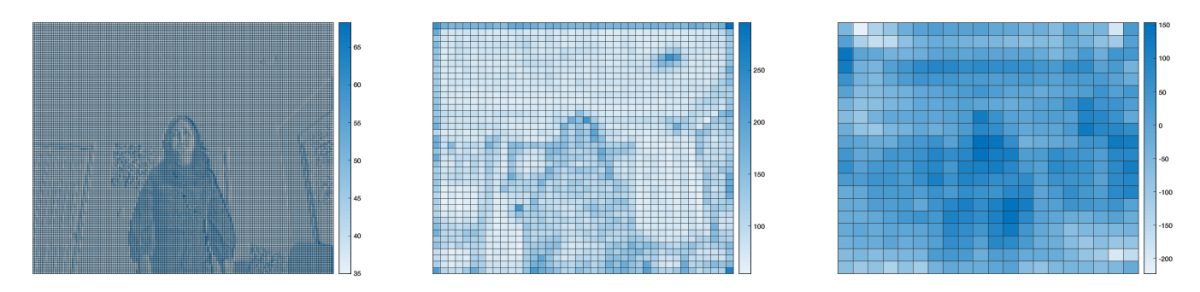

With Echo Show 10, we solve this problem by combining sound source localization (SSL) with computer vision (CV). Our implementation of SSL uses acoustic-wave-decomposition and machine-learning techniques to determine the direction in which the user is most probably located. Then, the raw SSL measurements are fused with our CV algorithms.

The intersection of design and science

Learn how a team of designers, scientists, and engineers worked together to overcome challenges and create Echo Show 10.

The CV algorithms can identify objects and humans in the field of view, enabling the device to differentiate between sounds coming from people and those coming from other sources and reflections off walls. Sometimes audio can reflect from behind the device, so we added a setup step in which customers set the device’s range of motion. If the device can ignore sounds originating outside its range of motion, it’s better able to avoid reflections and narrow down the direction of the wake word.

The CV algorithms turn the camera image into hundreds of data points representing shapes, edges, facial landmarks, and general coloring; then the image is deleted permanently. These data points cannot be reverse-engineered to the original input, and no facial-recognition technology is used. All of this processing happens in a matter of milliseconds, entirely on-device.

The device’s computer vision service (CVS) can dynamically vary the frame rate (the number of frames per second), and it operates with over 95% precision at distances of up to 10 feet. The CVS uses spatiotemporal filtering to suppress ephemeral false positives caused by camera motion and blur. In a multiuser environment, engagement detection — determining which user is facing the device — helps us further target the screen to the relevant user or users.

Defining the experience

With our algorithms built, the next step was to orchestrate the ideal customer experience. We started with capturing data from internal beta participants and product teams. Amazon employees tested Echo Show 10 in their homes, and before the hardware was even ready, we used virtual-reality to gather early input on what movements felt most natural, preferred speed of motion, and so on. What we learned was invaluable.

First, knowing when not to move is just as important as knowing when to move. We wanted customers to be able to manually redirect the screen. But that meant distinguishing between the pressure applied by someone scrolling through a recipe while making dinner and someone physically trying to move the device. The device also needed to know that if it turned in one direction and hit something — a wall, cabinet, etc. — it should not continue to go in that direction.

This required a motor resistance — or “back drive” — that could kick in, or not, depending on the user’s movement. A lot of fine-tuning went into getting that distinction and timing right.

We also had to determine a speed and acceleration that felt natural. The motor allows us to accelerate at up to 360 degrees/second2 to a speed of up to 180 degrees/second. However, at that speed, in a typical, in-home environment, you risk knocking over a glass or a picture frame that might be near the device. Move too slowly, on the other hand, and you might try the customer’s patience — and even risk spurious stall detection. We settled on a speed that was quick but also allowed the device to stop short if it bumped an object.

Lastly, we needed to define the types of movements that Echo Show 10 will make. As humans, we have an innate ability to know when to respond with our eyes versus a full move of the head. Echo Show 10, while not quite as adaptive as a human, tries to approximate this distinction with three zones of perception, defined by the camera’s field of view.

Within the “dead” zone, the center of the field of view, the device doesn’t move, even if the customers do. Within the “holding” zone, the regions of the field of view outside the center, the device turns only if the customer settles into a new position for long enough. And when the customer enters the “motion” zone, the edges of the field of view, the device moves, ensuring that the screen always remains visible.

The range of these zones, their dependency on your distance from the device, and the device’s speed and acceleration are tuned based on thousands of hours of lab and user testing. There are also certain situations where Echo Show 10 will not move — for instance, if the built-in camera shutter is closed or if SSL cannot differentiate between sounds in two very different directions.

Applications

After solving these scientific challenges came the fun part: what are some of the first features that will use motion? Video calling is a hugely popular feature for Echo Show customers, so the use of auto-framing and motion in calling was obvious. Customers also tend to place Echo Show devices in kitchens and use Alexa for recipes, so not requiring a busy cook to strain to see a recipe on-screen was also top of mind.

And because customers love Alexa Guard for helping keep their homes safe while they are away, remote access to the camera was high on the list as well. When Away Mode is turned on, Echo Show 10 will periodically pan the room and send a Smart Alert if someone is detected in its field of view. You can also remotely check in on your home for added peace of mind if you are on a trip or to see if your dog has snuck onto the couch while you’re at the grocery store.

In developing Echo Show 10, I have come to appreciate how complex, evolved, and adaptive we are as a species; the things we communicate with nonverbal cues are incredibly complex yet somehow globally understood. We believe that the potential of motion as a response modality is enormous, and we’re just scratching the surface of all the ways we can delight customers with Echo Show 10. For that reason, we’re inviting developers to build experiences for Echo Show 10, with motion APIs that they can use to unleash their creativity. To learn more about these new APIs, visit our developer blog.