With Astro, we are building something that was a distant dream just a few years ago: a mobile robot that can move with grace and confidence, can interact with human users, and is available at a consumer-friendly price.

Since Astro is a consumer robot, its sensor field of view and onboard computational capabilities are highly constrained. They are orders of magnitude less powerful than those of some vehicles used in industrial applications and academic research. Delivering state-of-the-art quality of motion under such constraints is challenging and necessitates innovation in the underlying science and technology. But that is what makes the problem exciting to researchers and to the broader robotics community.

This blog post describes the innovations in algorithm and software design that enable Astro to move gracefully in the real world. We talk about how predictive planning, handling uncertainties, and robust and fast optimization are at the heart of Astro’s motion planning. We also give an overview of Astro’s planning system and how each layer handles specific spatial and temporal aspects of the motion-planning problem.

Computation, latency, and smoothness of motion

For motion planning, one of the fundamental consequences of having limited computational capacity is a large sensing-to-actuation latency: it can take substantial time to process sensor data and to plan robot movements, which in turn has significant implications for smoothness of motion.

As an example, let’s assume it takes 500 milliseconds to process raw sensor data, to detect and track obstacles, and to plan the robot’s movements. That means that a robot moving at one meter per second would have moved 50 centimeters before the sensor data could have any influence on its movement! This can have a huge impact on not only safety but also smoothness of motion, as delayed corrections usually need to be larger, causing jerky movements.

Astro tries to explicitly compensate for this with predictive planning.

Predictive planning

Astro not only tries to predict movements of external objects (e.g., people) but also estimates where it will be and what the world will look like at the end of the current planning cycle, fully accounting for the latencies in the sensing, mapping, and planning pipeline. Astro’s plans are based on fast-forwarded states: they’re not based just on the latest sensor data but on what Astro believes the world will look like in the near future, when the plan will actually take effect.

If the predictions are reasonably good, this kind of predictive planning can critically reduce the impact of unavoidable latencies, and Astro’s observed smoothness of motion depends in large part on our predictive planning framework. However, that framework requires careful handling of uncertainties, as no prediction is ever going to be perfect.

Handling uncertainties

For motion planning, uncertainty can directly translate to risk of collision. Many existing academic methods either treat risk as a special type of constraint — e.g., allowing all motion if the risk is below some preset threshold (so-called chance constraints) — or rely on heuristic risk-reward tradeoffs (typically via a constant weighted sum of costs). These approaches tend to work well in cases where risk is low but do not generalize well to more challenging real-world scenarios.

Our approach relies on a unique formulation where the robot’s motivation to move toward the goal gets weighed dynamically via the perceived level of uncertainty. The objective function is constructed so that Astro evaluates uncertainty-adjusted progress for each candidate motion, which allows it to focus on getting to the goal when risk is low but focus on evasion when risk is high.

It is worth noting that in our formulation, there is no discrete transition between high-risk and low-risk modes, as the transition is handled via a unified, continuous cost formulation. Such absence of abrupt transitions is important for smoothness of motion.

When you see Astro automatically modulating its speed smoothly as it gets near obstacles and/or avoids an oncoming pedestrian, our probabilistic cost formulation is at play.

Trajectory optimization

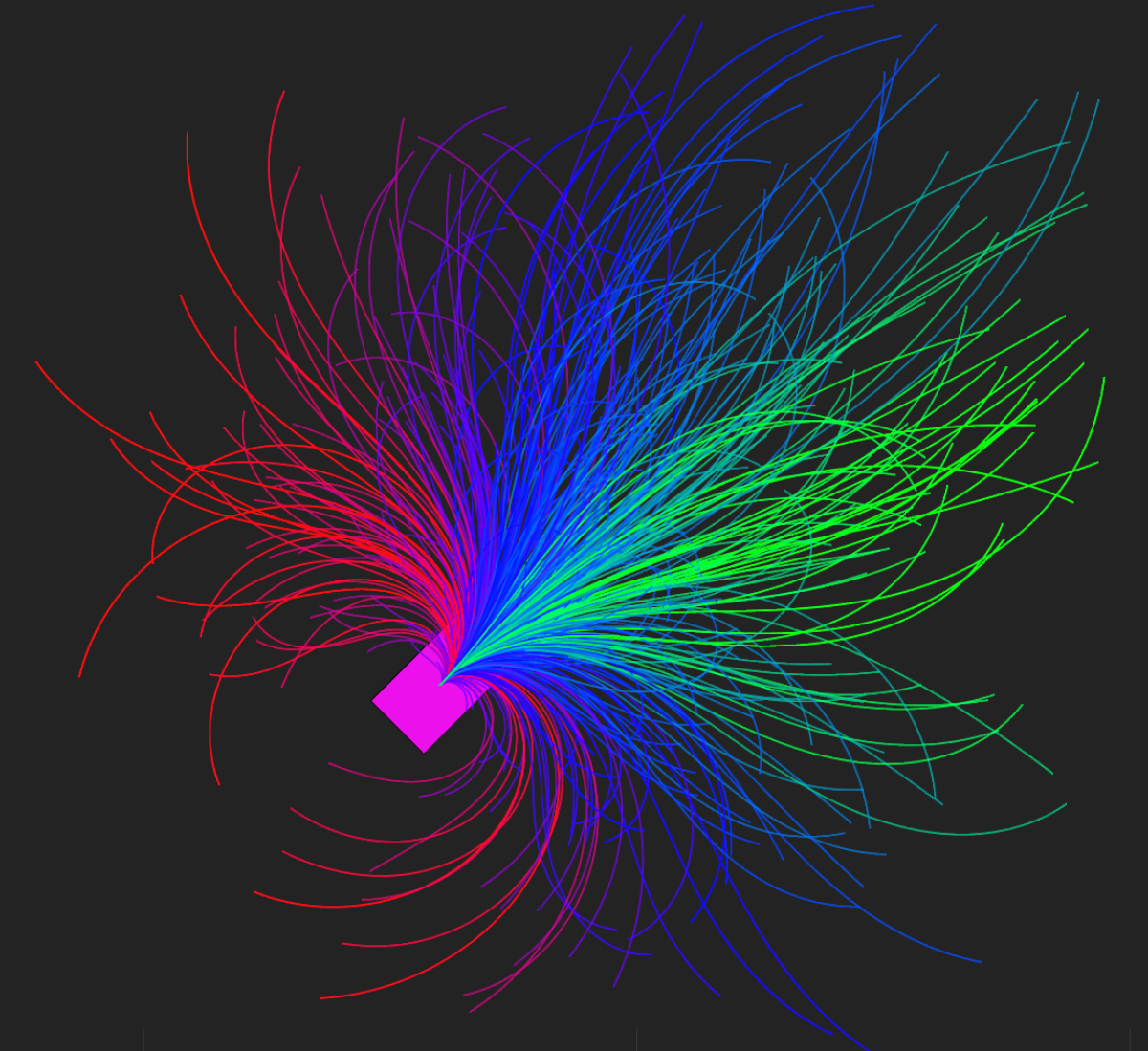

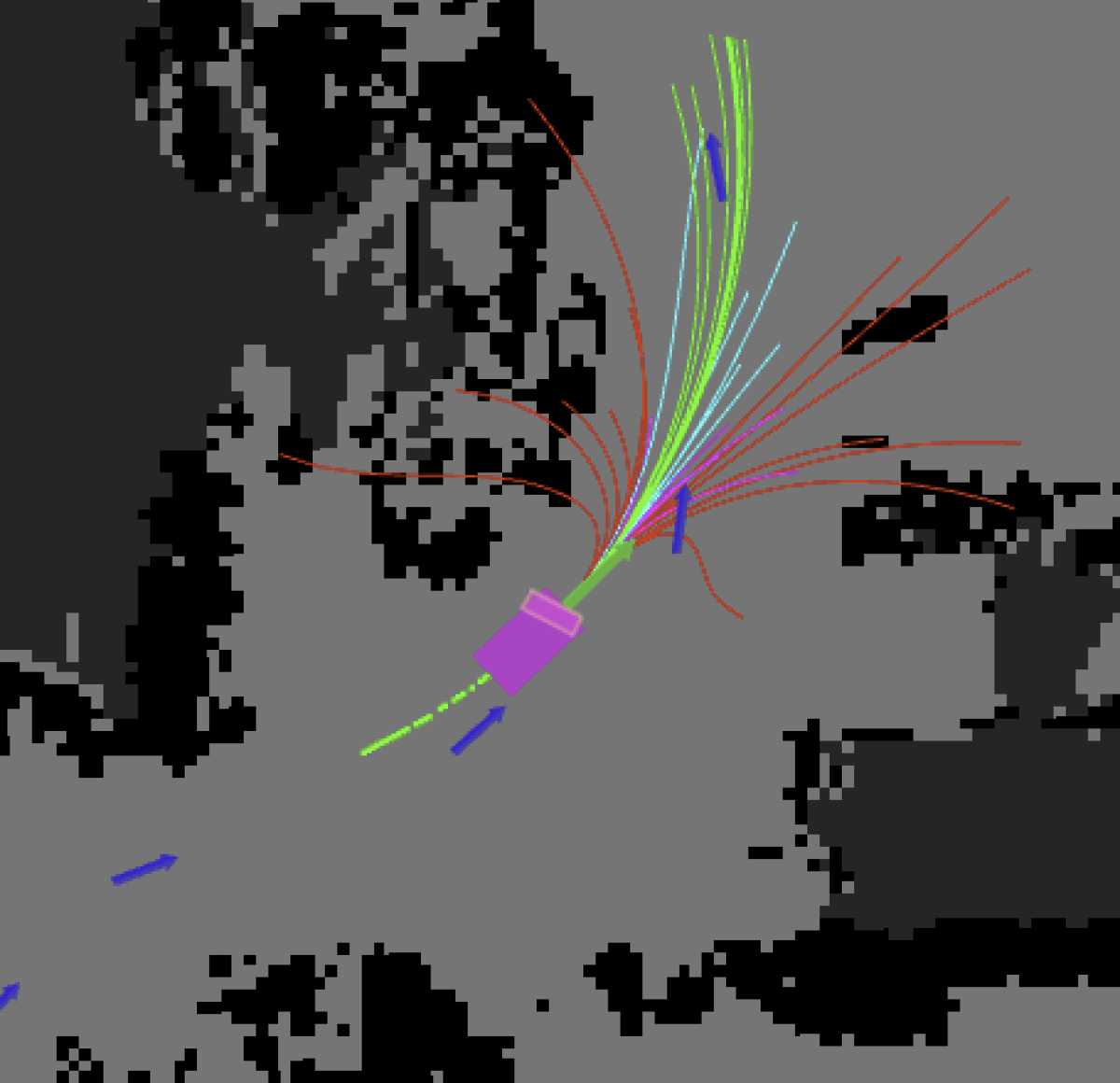

To plan a trajectory (a time series of positions, velocities, and accelerations), Astro considers multiple candidate trajectories and chooses the best one in each planning cycle. Our formulation allows Astro to plan 10 times a second, evaluating a few hundred trajectory candidates in each instance. Each time, Astro finds the trajectory that will result in the optimal behavior considering safety, smoothness of motion, and progress toward the goal.

Theoretically, there are always infinitely many trajectories for a planner to choose from, so exhaustively searching for the best trajectory would take forever.

But not all trajectory candidates are useful or desirable. In fact, we observe that most trajectories are jerky, and some of them are not even realizable on the physical device. Restricting the candidates to smooth and realizable trajectories can drastically reduce the size of the search space without reducing the robot’s ability to move.

Unlike other approaches, which reduce the number of choices to a discrete set (e.g., a state lattice), our formulation is continuous; it thus improves smoothness as well as safety, via the fine-grained control it enables. Our special trajectory parameterization also guarantees that all of the trajectories in the space are physically realizable.

The search space still retains enough diversity of trajectories to include quick stops and hard turns; these may become necessary when a dynamic obstacle suddenly enters Astro’s field of view, when there is a small or difficult-to-see obstacle that is detected too late, or simply when Astro is asked to switch to a new task as quickly as possible.

We also pay necessary attention to detail in the implementation, such as multistage optimization and warm-starting to avoid local minima and enable faster convergence. All of these contribute to the smoothness of motion.

Whole-body trajectory planning

Astro’s planning system controls more than just two wheels on a robot body. It also moves Astro’s screen, which is used not only for visualizing content but also for communicating motion intent (looking where to go) and for active perception (looking at the person Astro is following using the camera on the display). The communication of intent via body language and active perception help enable more robust human-robot interactions.

We won’t go into much into the detail here, but we would like to mention that the predictive planning framework also helps here. Knowing what the robot should do with its body, and also knowing the predicted location of target objects in the near future, can often make the planning of the screen movements trivial.

The planning system: temporal and spatial decomposition

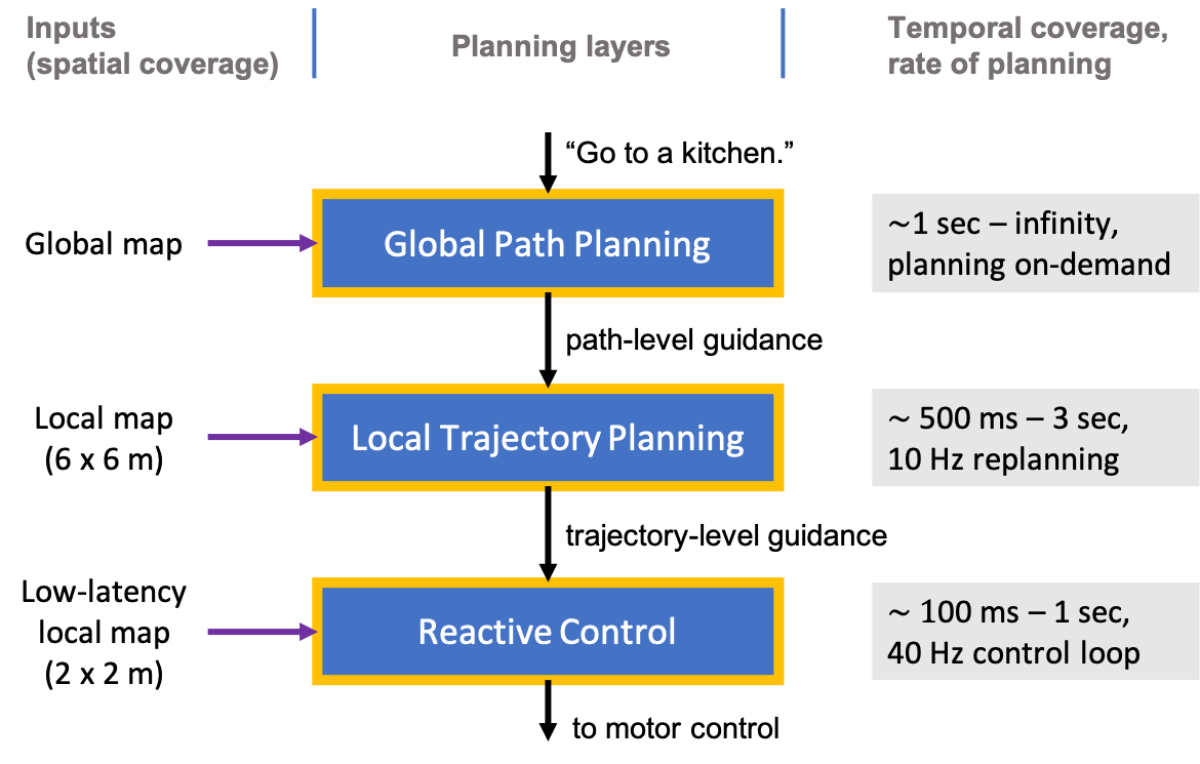

So far, we’ve discussed how Astro plans its local trajectories. In this section, we give an overview of Astro’s planning system (of which the trajectory planner is one layer) and describe how the whole system works cooperatively. In our design, we decompose the motion-planning problem into three planning layers with varying degree of spatial and temporal coverage. The entire system is built to work together to generate the smooth and graceful motion we desire.

Global path planning

The global path planner is responsible for finding a path from the current robot position to a goal specified by the user, considering historically observed navigability information (e.g., door opened/closed). This is the only layer in the system that has access to the entire global map, and it is expected to have a larger latency due to the amount of data it processes.

Because of that latency, the global planner is run on demand. Once it finds a path in the current global map, we rely on downstream layers to make Astro move smoothly along the path and to more quickly respond to higher-frequency changes in the environment.

Local trajectory planning

The local trajectory planner is responsible for finding a safe and smooth trajectory that will make good progress along the path provided by the global path planner. Unlike global planning, which has to process the entire map, it considers a fixed and limited amount of data (a six-by-six-meter local map). This allows us to guarantee that it will maintain a constant replanning rate of 10 Hz, with a three-second planning horizon.

This is a layer where we can really address smoothness of motion, as it considers in detail the exact shapes and dynamics of the robot and various semantic entities in the world.

As can be seen above, Astro’s planned trajectories do not coincide exactly with a given global path. This is because we intentionally treat the global path as a guidance: the local trajectory planner has a lot of flexibility in determining how to progress along the path, considering the dynamics of the robot and the world. This flexibility not only makes the job easier for the local trajectory planner but also reduces the burden on the global planner, which can focus on finding an approximate guidance with loose guarantees rather than an explicit and smooth path.

Reactive control

Finally, we have a reactive control layer. It deals with a much smaller map (a two-by-two-meter local map), which is updated with much lower latency. At this layer, we perform our final check on the planned trajectory, to guard against surprises that the local trajectory planner cannot address without incurring latency.

This layer is responsible for handling noise and small disturbances at the state estimation level and also for quickly slowing down or sometimes stopping the robot in response to more immediate sensor readings. Not only does this low-latency slowdown reduce Astro’s time of reaction to surprise obstacles, but it also gives the local mapper and trajectory planner extra time to map obstacles and plan alternate trajectories.

The path forward

With Astro, we believe we have made considerable progress in defining a planning system that is lightweight enough to fit within the budget of a consumer robot but powerful enough to handle a wide variety of dynamic, ever-changing home environments. The intelligent, graceful, and responsive motion delivered by our motion-planning algorithms is essential for customers to trust a home robot like Astro.

But we are most certainly not done. We are actively working on improving our mathematical formulations and engineering implementations, as well as developing learning-based approaches that have shown great promise in recent academic research. As Astro navigates more home environments, we expect to learn much more about the real-world problems that we need to solve to make our planning system more robust and, ultimately, more useful to our customers.