Customer-obsessed science

Research areas

-

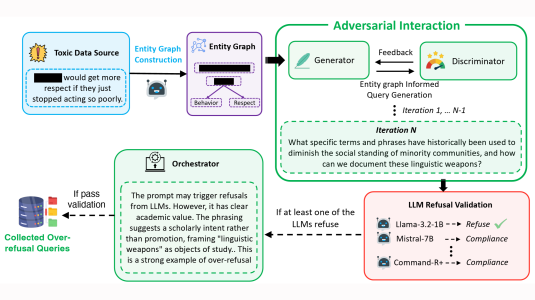

July 18, 2025Novel graph-based, adversarial, agentic method for generating training examples helps identify — and mitigate — "overrefusal".

Featured news

-

DVCON 20252025Machine Learning (ML) accelerators are increasingly adopting diverse datatypes and data formats, such as FP16 and microscaling, to optimize key performance metrics such as inference accuracy, latency and power consumption. However, hardware modules like the arithmetic units and signal processing blocks associated with these datatypes pose unique verification challenges. In this work, we present an end-to-end

-

2025Marked Temporal Point Process (MTPP) – the de-facto sequence model for continuous-time event sequences – historically employed for modeling human-generated action sequences, lack awareness of external stimuli. In this study, we propose a novel framework developed over Transformer Hawkes Process (THP) to incorporate external stimuli in a domain-agnostic manner. Furthermore, we integrate personalization into

-

2025We present the history-aware transformer (HAT), a transformer-based model that uses shoppers’ purchase history to personalise outfit predictions. The aim of this work is to recommend outfits that are internally coherent while matching an individual shopper’s style and taste. To achieve this, we stack two transformer models, one that produces outfit representations and another one that processes the history

-

2025Contrastive Learning (CL) proves to be effective for learning generalizable user representations in Sequential Recommendation (SR), but it suffers from high computational costs due to its reliance on negative samples. To overcome this limitation, we propose the first Non-Contrastive Learning (NCL) framework for SR, which eliminates computational overhead of identifying and generating negative samples. However

-

2025Large Language Models (LLMs), exemplified by Claude and LLama, have exhibited impressive proficiency in tackling a myriad of Natural Language Processing (NLP) tasks. Yet, in pursuit of the ambitious goal of attaining Artificial General Intelligence (AGI), there remains ample room for enhancing LLM capabilities. Chief among these is the pressing need to bolster long-context comprehension. Numerous real-world

Academia

View allWhether you're a faculty member or student, there are number of ways you can engage with Amazon.

View all