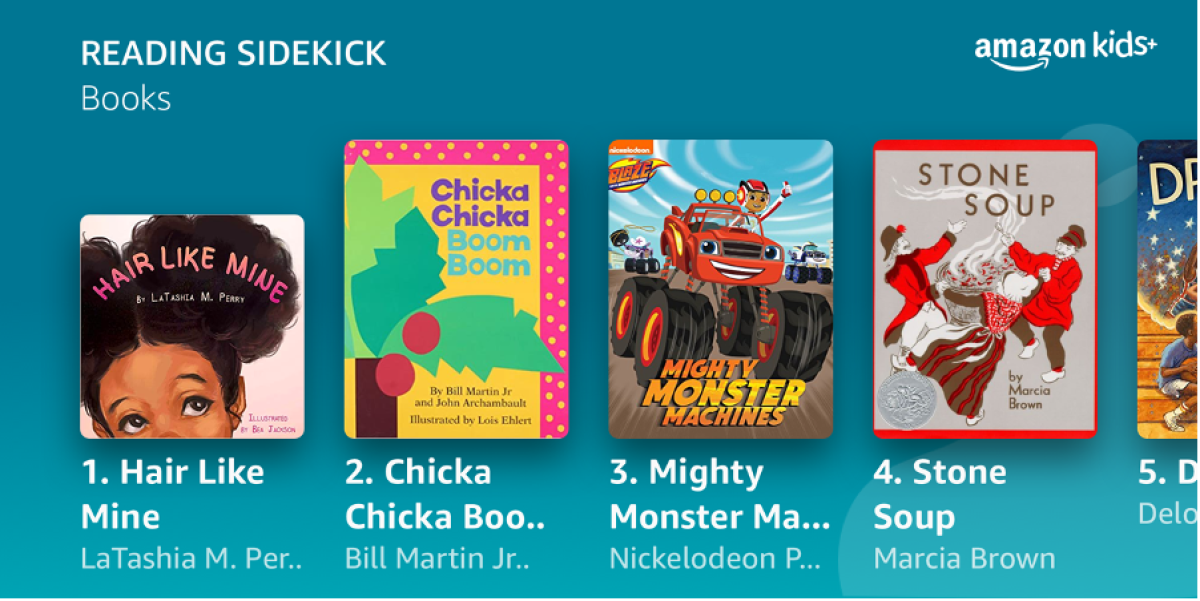

In June, Alexa announced a new feature called Reading Sidekick, which helps kids grow into confident readers by taking turns reading with Alexa, while Alexa provides encouragement and support. To make this an engaging and entertaining experience, the Amazon Text-to-Speech team developed a version of the Alexa voice that speaks more slowly and with more expressivity than the standard, neutral voice.

Because expressive speech is more variable than neutral speech, expressive-speech models are prone to stability issues, such as sudden stoppages or harsh inflections. To tackle this problem, model developers might collect data that represents a dedicated style; but that is costly and time consuming. They might deliver a model that is not based on attention — that is, it doesn’t focus on particular words of prior inputs when determining how to handle the current word. However, attentionless models are more complex, requiring more effort to deploy and often causing additional latency.

Our goal was to develop a highly expressive voice without increasing the burden of either data collection or model deployment. We did this in two ways: by developing new approaches for data preprocessing and by delivering models adapted to expressive speech. We also collaborated closely with user experience (UX) researchers, both before and after building our models.

To determine what training data to collect, we ran a UX study before the start of the project, in which children and their parents listened to a baseline voice synthesizing narrative passages. The results indicated that a slower speech rate and enhanced expressivity would improve customer experience. When recording training data, we actively controlled both the speaking rate and the expressivity level.

After we’d built our models, we did a second UX study and found that, for story reading, subjects preferred our new voice over the standard Alexa voice by a two-to-one margin.

Data curation

The instability of highly expressive voice models is due to “extreme prosody”, which is common in the reading of children’s books. Prosody is the rhythm, emphasis, melody, duration, and loudness of speech; adults reading to young children will often exaggerate inflections, change volume dramatically, and extend or shorten the duration of words to convey meaning and hold their listeners’ attention.

Although we want our dataset to capture a wide range of expressivity, some utterances may be too extreme. We developed a new approach to preprocessing training data that removes such outliers. For each utterance, we calculate the speaker embedding — a vector representation that captures prosodic features of the speaker’s voice. If the distance between a given speaker embedding and the average one is too large, we discard the utterance from the training set.

Next, from each speech sample, we remove segments that cannot be automatically transcribed from audio to text. Since most such segments are dead air, removing them prevents the model from pausing too long between words.

Modeling

On the modeling side, we use regularization and data augmentation to increase stability. A neural-network-based text-to-speech (NTTS) system consists of two components: (1) a mel-spectrogram generator and (2) a vocoder. The mel-spectrogram generator takes as input a sequence of phones — the shortest phonetic units — and outputs the amplitude of a signal at audible frequencies. It is responsible for the prosody of the voice.

The vocoder adds phase information to the mel-spectrogram, to create the synthetic speech signal. Without the phase information, the speech would be robotic. Our team previously developed a universal vocoder that works well for this application.

During training, we apply an L2 penalty to the weights of the mel-spectrogram generator; that is, weights that deviate from the average are assessed a penalty during training, and the penalty varies with the square of the deviation. This is a form of regularization, which reduces overfitting on the recording data.

We also use data augmentation to improve the output voice. We add neutral recordings to the training recordings, providing less extreme prosodic trajectories for the model to learn from.

As an additional input, for both types of training data, we provide the model with a style id, which helps it learn to distinguish the storytelling style from other styles available through Alexa. The combination of recording, processing, and regularization makes the model stable.

Evaluation

To evaluate the Reading Sidekick voice, we asked adult crowdsourced testers which voice they preferred for reading stories to children. The standard Alexa voice was our baseline. We tested 100 short passages with a mean duration of around 15 seconds, each of which was evaluated 30 times by different crowdsourced testers. The testers were native speakers of English; no other constraint was imposed on the tester selection.

The results favor the Reading Sidekick voice by a large margin (61.16% Reading Sidekick vs 30.46% baseline, with P<.001), particularly considering the very noisy nature of crowdsourced evaluations and the fact that we did not discard any of the data received.

Thanks to Marco Nicolis and Arnaud Joly for their contributions to this research.