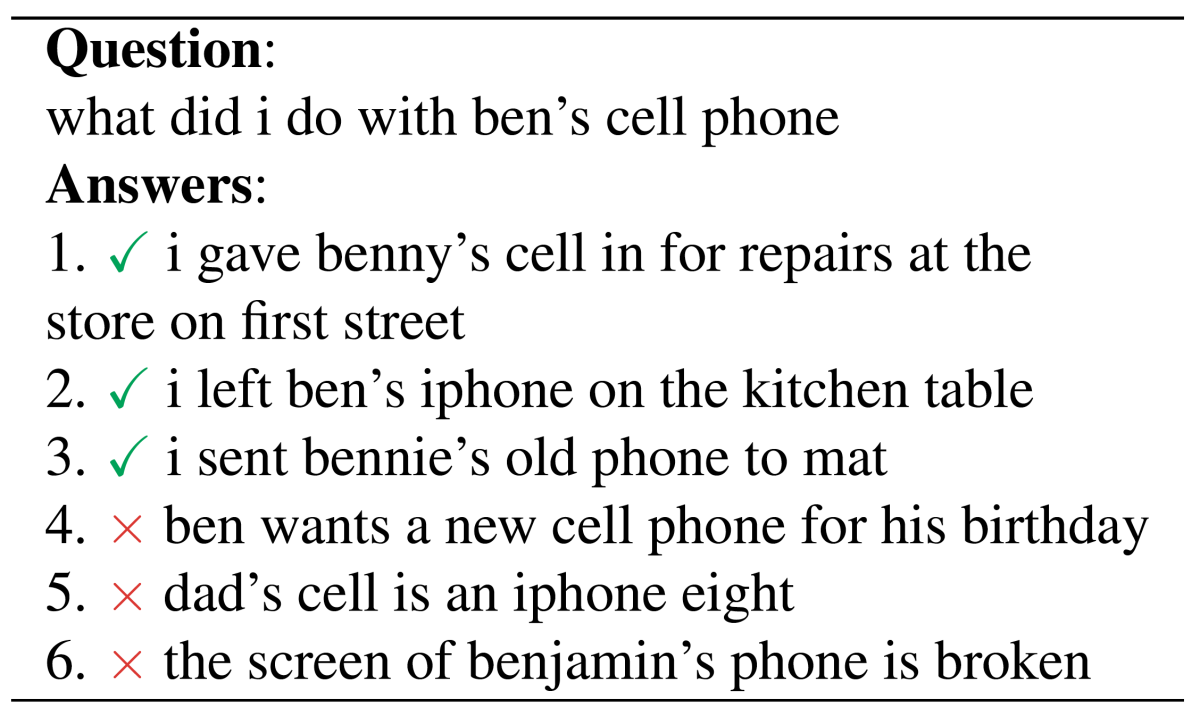

In May 2018, Amazon launched Alexa’s Remember This feature, which enables customers to store “memories” (“Alexa, remember that I took Ben’s watch to the repair store”) and recall them later by asking open-ended questions (“Alexa, where is Ben’s watch?”). At this year’s IEEE Spoken Language Technologies conference, we presented a paper relating to the technology behind this feature.

Most memory retrieval services depend on machine learning systems trained on sample questions and answers. But they often suffer from the same problem: the machine learning systems are trained using one criterion of success — a loss function — but evaluated using a different criterion — the F1 score, which is a cumulative measure of false positives and false negatives.

In our paper, we use a reinforcement-learning-based model to directly train a memory retrieval system using the F1 score. While the model is not currently in production, our experiments show that it can deliver significant improvements in F1 score over methods that use other criteria during training.

Typically, a machine learning system is trained to minimize some loss function, which describes how far the system is from perfect accuracy. After every pass through the training data, a learning algorithm estimates the shape of the loss function and modifies the system’s settings, in an attempt to find a lower value for the function. It’s a process called gradient descent, because, essentially, the algorithm tries to determine which way the function slopes and to move down the slope.

But frequently, the same machine learning systems are evaluated on F1 score. Calculating the F1 score involves simply counting false positives and false negatives (and then taking the harmonic mean of the totals). Since a count is a number, not a function, it is not differentiable: its slope will always be zero.

In order to use F1 score to directly train a memory retrieval system, we model the problem as a reinforcement-learning-based problem. Reinforcement learning envisions the learning experience as a series of encounters between an agent and the world. At each encounter, the agent takes some action, in an attempt to maximize some reward. Over a series of encounters, it constructs a set of policies that dictate what actions to take in which circumstances.

In our system, the agent’s reward is an F1 score. Since the F1 score factors in both false positives and false negatives, the agent needs to apply its policies to multiple data items at once. This differs from conventional neural-network training, in which data items are fed to the network one at a time.

Our data set consists of about 26,000 questions, which have an average of 24 associated memories each, all labeled as either relevant or irrelevant. The data is a mix of utterances collected from customer interactions and generated by annotators.

We used the same neural-network architecture for both our reinforcement-learning-based system and the supervised learning system with which we compared it. The reinforcement-learning-based system, however, was trained on batches of data, each of which consisted of one question and all the associated answers, relevant and irrelevant. The supervised-learning system was trained on individual question-answer pairs.

Reinforcement-learning-based systems can be difficult to initialize: if the agent begins with entirely random policies, it can take a long time to discover a useful solution. So we bootstrap our reinforcement-learning system using supervised learning and a loss function that is designed to approximate the F1 score.

Because we were using a neural network to instantiate our learning agent, we also needed to tinker with the raw F1 score. Neural networks generally learn through a process called back-propagation: errors in a network’s final output are tracked back through the network, and the settings of individual network nodes are adjusted to reduce their contribution to the overall error.

With the F1 score, this approach can cause problems. If all of the agent’s classifications for a given batch of samples are wrong, for instance, the F1 score is zero. But a zero means that no error will propagate back through the network, so we instead assign the classifications a negative score. We introduce some additional adjustments for other extreme cases where the F1 score breaks down.

We also experimented with different ways of representing the inputs to our systems. In one set of experiments, we used off-the-shelf word embeddings, which represent the words of an input as points in a high-dimensional space, such that words with similar meanings are grouped together.

In another set of experiments, we also used character embeddings, which are learned during training and factor in all the substrings of letters that compose a given word. Character embeddings are often useful because they enable natural-language-understanding systems to make educated guesses about the meanings of unfamiliar words on the basis of their roots, prefixes, and suffixes

To our surprise, the systems that used character embeddings — both the reinforcement-learning-based system and the baseline system — fared worse than the ones that used word embeddings only. That could be because the answers in our data set were short and fairly uniform, and with more heterogenous data, character embeddings will begin to confer advantages. This could bode well for the reinforcement-learning approach, as it was in the experiments that used character embeddings that our system offered the greatest improvement — up to 5% over baseline in F1 score.

Acknowledgments: Amanjit Kainth, Siamak Shakeri, Christopher Winestock, Abdel-rahman Mohamed, Ruhi Sarikaya