Growing up in Estill, South Carolina, De’Aira Bryant didn’t know she was interested in computer science until she was persuaded to explore the field by her mother, who noted that computer scientists have good career prospects and get to do interesting work.

“I was handy with making flyers and doing the programs for church, that type of thing,” Bryant says. “She somehow convinced me that was computer science and I had no way to know better.”

In her first class as a computer science major at the University of South Carolina (UofSC), she realized that she didn’t really know what computer science entailed. “I was completely out of my league, coming from a small town with no computer science or robotics background at all.”

Bryant immediately wanted to change her major, but Karina Liles — the graduate teaching assistant and the only female TA in the program at that time — convinced her to stay. “We were doing that ‘Hello, World!’ program and I was like: Do you want me to type it on Word? What do you mean, I'm writing a program?” Bryant remembers Liles looked at her in astonishment and set out to help her.

After the initial shock, Bryant started to thrive.

“It actually worked out for me, because I've always been really good at math, I also got a minor in math. And later I realized that what I actually like is logic, which was perfect for a computer science student at UofSC, because a lot of courses focused on the principles of logic.”

It turned out her mother was right after all.

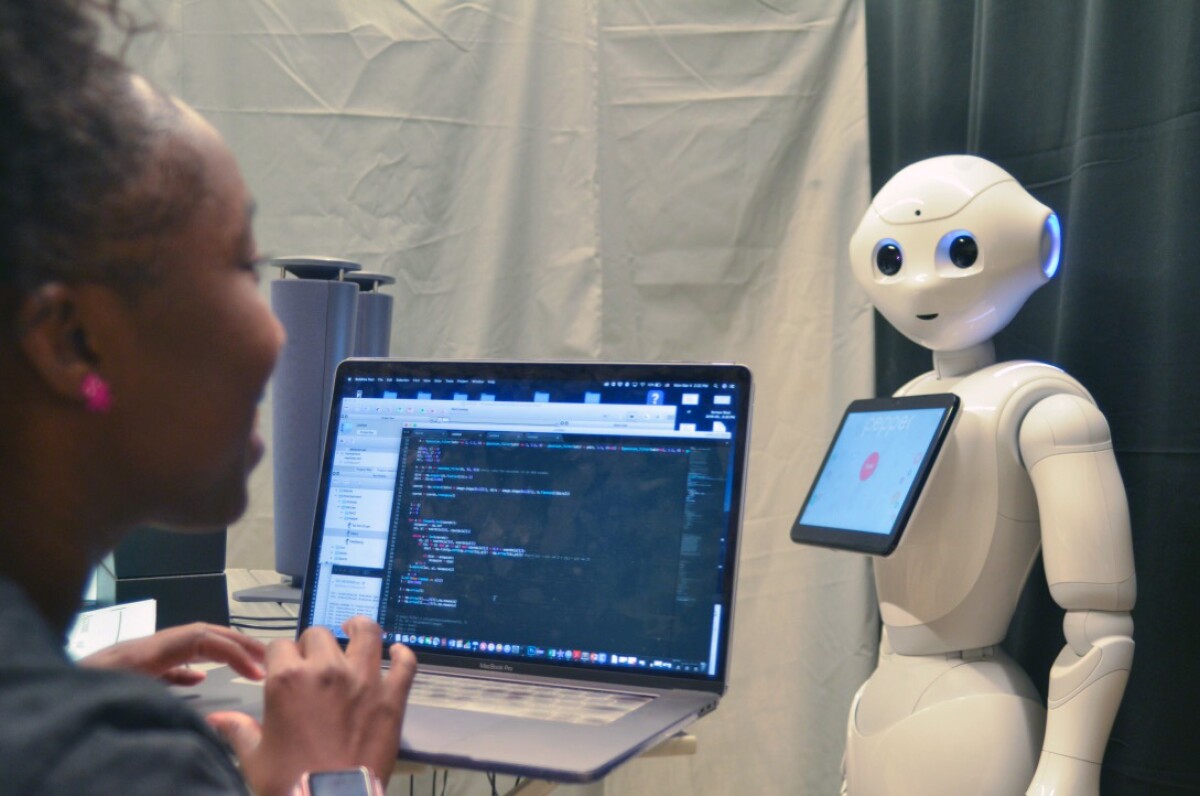

Today, she’s a fourth-year computer science PhD student at the Georgia Institute of Technology, where her research focuses on the application of robotics in health care and rehabilitation. Over the years, Bryant has received research awards, given a TEDx Talk, and even programmed a robot that starred in a movie. Having recently completed her second internship at Amazon Web Services (AWS), she still finds time to think about fun and exciting ways to make computer science more accessible to diverse populations.

Making robots dance (and act)

Right after her first class, Bryant was invited by Liles, the TA, to do an internship at Assistive Robotics and Technology Lab (ART lab), headed by Jenay Beer, who was Liles’ advisor at the time and also played a crucial role in Bryant’s education at UofSC. (Currently, Liles is a professor at Claflin University and Beer is a professor at the University of Georgia.) Bryant didn’t think twice before accepting.

I spent Juneteenth in my hometown doing what I love 🥺🤖👩🏾💻 pic.twitter.com/EWnnQORllG

— De'Aira Bryant (@DeeGotRobots) June 20, 2021

“I have my own desk, and I’m getting paid? Sign me up! What better job could there be?” she remembers thinking. She worked on designing systems for children in schools that did not have computer science curriculums, using robots as a method of engagement and exposure.

Initially, she would prepare the robots for studies, take them in the field, and watch kids interact with them. Later, she got to take crash courses to learn how to program them. “I don't think I was interested in robotics until I got to see to see how they were used, their application in the real world,” she says. The fact that she loved seeing them in action made her want to learn how to make them work.

As an undergrad, she started to program these robots to do short dance moves. She posted those clips to her social media, which piqued the curiosity of kids who followed her.

“I thought, ‘I'm going to trick them into asking more questions and I'm going to recruit more computer scientists by posting robots dancing,’” she says. “That kind of turned into a thing. Now I have a whole social media presence on making robots dance and do cool stuff.”

Bryant is deeply interested in changing the way computer science is taught.

From a culturally relevant perspective, a lot of the ways that we teach these concepts can miss the mark with a lot of students, especially students who come from minority backgrounds.

“From a culturally relevant perspective, a lot of the ways that we teach these concepts can miss the mark with a lot of students, especially students who come from minority backgrounds.” She says that throughout her computer science curriculum, a lot of the examples and problems proposed by the professors were not relevant to her. “I would completely rewrite the problem and that was how I was able to make it through my undergrad and graduate education.”

Currently, her main research at the Georgia Institute of Technology is focused on the applications of robotics on rehabilitation for children who have motor and cognitive disabilities.

“That kind of attracted me and now we have more robots and more resources and we’re linked with rehabilitative therapy centers in Atlanta and getting to work in those places as well,” she said.

Bryant still uses the expertise she acquired with the dancing robots. When HBO Max was filming the movie Superintelligence on Georgia Tech’s campus in 2019 and wanted to add cool futuristic robot scenes, Bryant’s adviser, Ayanna Howard, who today is dean and professor in the College of Engineering at Ohio State University, said she would be the right person for the job.

She had two weeks to prepare.

By the time she got to the set, the script had changed and she ended up having to redo the work on the set. “I was programming in real-time. And I think the movie people were so excited about that. They were standing over my shoulders saying, 'You’re actually coding.'” Bryant got to meet Melissa McCarthy, the star of the movie, and teach her kids how to make the robot move. “They all wanted pictures with the robot. I felt like my robot was the biggest star on the set.”

Interning at Amazon

Bryant then met Nashlie Sephus, a machine learning technology evangelist for AWS, at the National GEM Consortium Fellowship conference in 2019 (Bryant is a current GEM fellow and Sephus is an alum). After Bryant presented her research during a competition, Sephus approached her. “She said, ‘The work you're doing is very similar to what my team is doing at Amazon, and I think it would be really awesome if you came to work with us’,” Bryant recalls.

😆 Excited for day one all over again! https://t.co/76Byp8z9UB

— De'Aira Bryant (@DeeGotRobots) May 24, 2021

Sephus focuses on fairness and identifying biases in artificial intelligence, areas that Bryant was beginning to explore. She applied to the 2020 summer internship, went through the interview process, and got to work directly with Sephus.

During Bryant’s first AWS internship, she worked on bias auditing of services that estimate the expression of faces in images, an active area of research within academia and industry. In Bryant’s robotics healthcare research at Georgia Tech, the robots utilize emotion estimation to help identify what the patient they're working with is feeling in order to inform what they should do or say next.

This summer, during her second AWS internship, Bryant researched how to potentially improve the way the emotion being expressed on a person’s face is estimated. Other research within Amazon on emotion estimation entails making a determination of the physical appearance of a person's face. It is not a determination of the person’s internal emotional state. Currently, the way researchers generally train machine learning models for that type of estimation is by annotating numerous face images. Each image is labeled with a single emotion — happiness, sadness, surprise, disgust, or anger.

“We see that a lot of people disagree in their interpretations of the expressions on some faces. And what normally happens if a face has too many people disagreeing on the emotion it is expressing is that we throw it out of the dataset. We say it's not a good way to teach our models about emotion,” Bryant says. She thinks that maybe that’s exactly what the system should be learning. “We should be teaching it ambiguity just as much as we are teaching it about things of which we are absolutely sure.”

To that end, the team she was on explored letting people rate a series of emotions on a scale for each image, instead of labeling it with a single emotion. “Instead of throwing out the images, we can model that into a distribution that tells us: most people see this image as happy, but there is a significant amount of people who also see it as surprise.”

Even after the end of her internship, Bryant continues to work with her team to write a paper to describe some of the work they did over the last two summers.

“It's been a big project, but we have enough now that we're ready to put out a paper. So, I'm excited about that.”

Bryant recently got a return offer to come back to Amazon next summer, possibly to work on a partnership between Sephus’s team and the robotics team. “I haven't done anything with robotics at Amazon yet so I would actually love to see what they're doing over there, so the offer is very appealing.”

What robots should look like

Another area of research for Bryant is understanding how people conceptualize a robot based on its perceived abilities. There is an ongoing debate in robotics circles about whether developing humanoid robots is a good thing. Among other aspects, the controversy has to do with the fact that they are expensive to build and deploy.

“A lot of people are questioning: 'Do we even really need to be designing humanoids?’,” she says.

Bryant, along with colleagues at Georgia Tech who are interested in robots that are capable of perceiving emotions, designed an experiment to investigate how people imagine a robot’s appearance based on what it can do. The study’s participants worked on an emotion annotation activity with the assistance of an expert artificial intelligence system that followed a set of rules. The participants were told that “a robot is available to assist you in completing each task using its newly developed computer vision algorithm.”

But the researchers did not tell them what the robot looked like. The robot’s predictions were provided via text. At the end of the study, participants were asked to describe how they envisioned it in their heads. Half of the people envisioned the robot with human-like qualities, with a head, arms, legs and the ability to walk, for example.

For that work – described in the article “The Effect of Conceptual Embodiment on Human-Robot Trust During a Youth Emotion Classification Task” — Bryant and her colleagues won the best paper award in the IEEE International Conference on Advanced Robotics and its Social Impacts (ARSO2021).

The goal of the research: investigate factors that influence human-robot trust when the embodiment of the robot is left for the user to conceptualize.

“In that paper, we presented the method of trying to gauge how humans expect a robot to look based on what it can do. That was one of the contributions,” says Bryant. The other contribution: demonstrate that it can be beneficial for a robot to look a certain way depending on its function. The study found that the participants who imagined the robot with human-like characteristics reported higher levels of trust than those who did not.

“For the robots that are emotionally perceptive, if we fail to meet the expectations of most people, then we could already be losing some of the effect that we intend to have,” says Bryant. “People expect that a robot that can perceive emotions will be human-like and if we don't design robots in that way, people could be less willing to depend on that robot.”

Future career plans

Bryant says that her long-term career plans are constantly changing. She was set on being a professor, but her experience at Amazon has redefined what industry research is for her. “On the last team I was on, I was actually working with a lot of professors. And I think it’s so cool to have the ability to bridge that gap.”

When she was about to start her first AWS internship, she expected she would be given a project, a few tasks, a deadline to complete them, and wouldn’t have a lot of say in that. “But when I first got there I actually did have a lot of say. They were interested in what I was doing at Georgia Tech, they wanted to know more about my research and made a strong effort to make the internship experience mine,” she says.

One of her ideas of a perfect job is being an Amazon Scholar. “I would get to work with students in a university and still work with Amazon. That is the perfect goal.”