One of the ways Amazon continues to work towards its mission of being the “Earth’s most customer-centric company” is through a commitment to world-class customer service. To help our customer service agents provide support in new regions and with new customers, we’ve begun testing two neural-network-based systems, one that can handle common customer service requests automatically and one that helps customer service agents respond to customers even more easily.

Most text-based online customer service systems feature automated agents that can handle simple requests. Typically, these agents are governed by rules, rather like flow charts that specify responses to particular customer inputs. If the automated agent can’t handle a request, it refers the request to a human customer service representative.

On amazon.com, we’ve started phasing in automated agents that use neural networks rather than rules. These agents can handle a broader range of interactions with better results, allowing our customer service representatives to focus on tasks that depend more on human judgment.

In randomized trials, we’ve been comparing the new neural agents to our existing rule-based systems, using a metric called automation rate. Automation rate combines two factors: whether the automated agent successfully completes a transaction (without referring it to a customer service representative) and whether the customer contacts customer service a second time within 24 hours. According to that metric, the new agents significantly outperform the old ones.

At the same time that we’re testing a customer-facing neural agent, we’re also testing a variation on the system that suggests possible responses to our customer service representatives, saving them time.

Nuts 'n' bolts

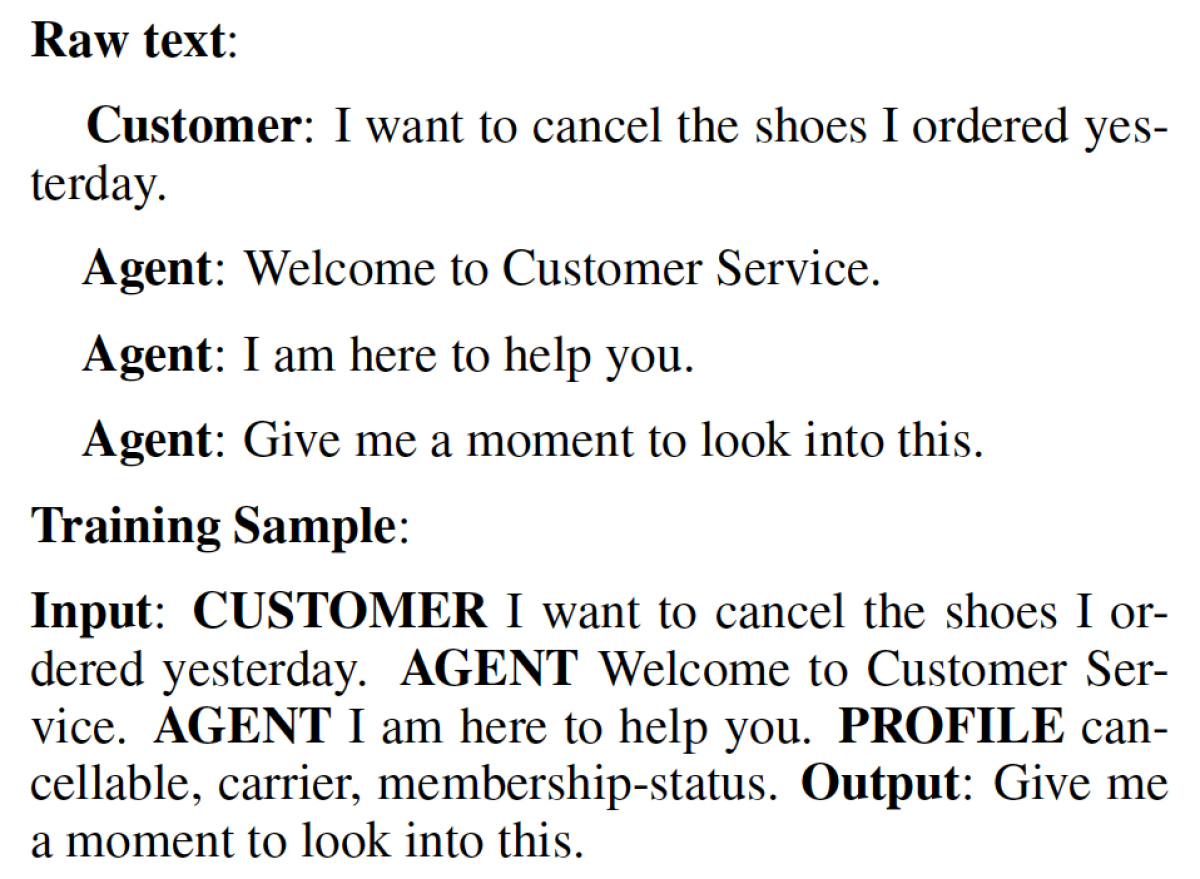

We described the basic principles behind both agents in a paper we presented last year at the annual meeting of the North American Chapter of the Association for Computational Linguistics (NAACL). In that paper, we compare two approaches. One uses a neural network to generate responses to customer utterances from scratch, and the other uses a neural network to choose among hand-authored response templates.

In our internal system, we’re testing both approaches. In the customer-facing system, we’re using the template ranker, which allows us to control the automated agent’s vocabulary. But as we test and refine the generative model internally, we plan to begin introducing it into the customer-facing system as well.

The templates in the template ranker are general forms of sentences, with variables for things like product names, dates, delivery times, and prices. In the natural-language-understanding literature, those variables are referred to as slots and their values as slot values.

Rule-based systems use templates too, but the neural template ranker has an advantage because it can incorporate new templates with little additional work. That’s because the template ranker — like the generative model — is pretrained on a large data set of interactions between customers and customer service representatives. The template ranker has seen many responses that do not fit its templates, so it has learned general principles for ranking arbitrary sentences. Adding a new template to a rule-based system, on the other hand, requires a researcher or team of researchers to reconceive and redesign the dialogue structure (the “flow chart”).

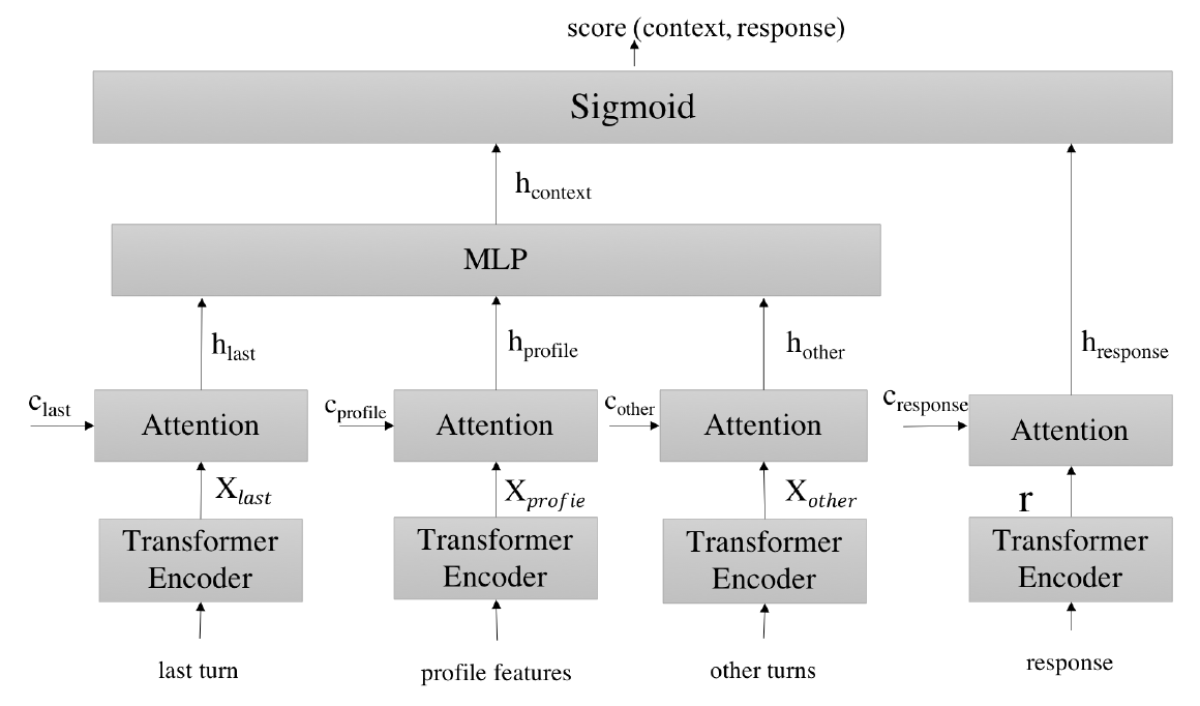

The two neural models we describe in our NAACL paper have different structures, but in determining how to respond to a given customer utterance, they both factor in the content of the preceding turns of dialogue (known as the dialogue context).

We trained separate versions of each model for two types of interactions: return refund status requests and order cancellations. As an input, the order cancellation model receives not only the dialogue context but also some information about the customer’s account profile — information that might be useful to a human agent, too.

In addition to the context and the profile information, the response ranker receives a candidate response as input. It also uses an attention mechanism to determine which words in which previous utterances are particularly useful for ranking the response.

It is difficult to determine what types of conversational models other customer service systems are running, but we are unaware of any announced deployments of end-to-end, neural-network-based dialogue models like ours. And we are working continually to expand the breadth and complexity of the conversations our models can engage in, to make customer service queries as efficient as possible for our customers.