Customer-obsessed science

Research areas

-

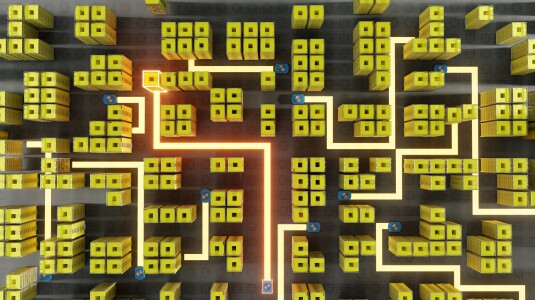

August 11, 2025Trained on millions of hours of data from Amazon fulfillment centers and sortation centers, Amazon’s new DeepFleet models predict future traffic patterns for fleets of mobile robots.

-

Featured news

-

2024Deep learning-based Natural Language Processing (NLP) models are vulnerable to adversarial attacks, where small perturbations can cause a model to misclassify. Adversarial Training (AT) is often used to increase model robustness. However, we have discovered an intriguing phenomenon: deliberately or accidentally miscalibrating models masks gradients in a way that interferes with adversarial attack search

-

ACL Findings 20242024Large language models (LLMs) have demonstrated remarkable open-domain capabilities. LLMs tailored for a domain are typically trained entirely on a domain corpus to excel at handling domain-specific tasks. In this work, we explore an alternative strategy of continual pre-training as a means to develop domain-specific LLMs over an existing open-domain LLM. We introduce FinPythia-6.9B, developed through domain-adaptive

-

EDM 20242024Bayesian Knowledge Tracing (BKT) is a probabilistic model of a learner’s state of mastery for a knowledge component. The learner’s state is a “hidden” binary variable updated based on the correctness of the learner’s responses to questions corresponding to that knowledge component. The parameters used for this update are inferred/learned from historical ground truth data. For this, BKT is often represented

-

2024An important requirement for the reliable deployment of pre-trained large language models (LLMs) is the well-calibrated quantification of the uncertainty in their outputs. While the likelihood of predicting the next token is a practical surrogate of the data uncertainty learned during training, model uncertainty is challenging to estimate, i.e., due to lack of knowledge acquired during training. Prior efforts

-

2024In many real-world applications, it is hard to provide a reward signal in each step of a Reinforcement Learning (RL) process and more natural to give feedback when an episode ends. To this end, we study the recently proposed model of RL with Aggregate Bandit Feedback (RL-ABF), where the agent only observes the sum of rewards at the end of an episode instead of each reward individually. Prior work studied

Academia

View allWhether you're a faculty member or student, there are number of ways you can engage with Amazon.

View all