Customer-obsessed science

Research areas

-

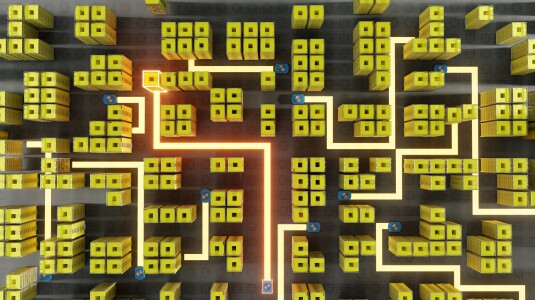

August 11, 2025Trained on millions of hours of data from Amazon fulfillment centers and sortation centers, Amazon’s new DeepFleet models predict future traffic patterns for fleets of mobile robots.

-

Featured news

-

2025Customized video editing aims at substituting the object in a given source video with a target object from reference images (Fig. 1). Existing approaches often rely on fine-tuning pre-trained models by learning the appearance of the objects in the reference images, as well as the temporal information from the source video. These methods are however not scalable as fine-tuning is required for each source

-

Large Language Models (LLMs) pruning seeks to remove unimportant weights for inference speedup with minimal accuracy impact. However, existing methods often suffer from accuracy degradation without full-model sparsity-aware fine-tuning. This paper presents Wanda++, a novel pruning framework that outperforms the state-of-the-art methods by utilizing decoder-block-level regional gradients. Specifically, Wanda

-

UAI 20252025Continual learning (CL) has gained increasing interest in recent years due to the need for models that can continuously learn new tasks while retaining knowledge from previous ones. However, existing CL methods often require either computationally expensive layer-wise gradient projections or large-scale storage of past task data, making them impractical for resource-constrained scenarios. To address these

-

ISWC 20252025In large-scale maintenance organizations, identifying subject matter experts and managing communications across complex entities relationships poses significant challenges – including information overload and longer response times – that traditional communication approaches fail to address effectively. We propose a novel framework that combines RDF graph databases with LLMs to process natural language queries

-

Large Language Models (LLMs) are increasingly deployed in interactive systems where understanding user intent precisely is paramount. A key capability for such systems is effective question clarification, especially when user queries are ambiguous or underspecified. This paper introduces a novel tri-agent framework for the robust evaluation of an LLM’s ability to engage in clarifying dialogue. Our framework

Academia

View allWhether you're a faculty member or student, there are number of ways you can engage with Amazon.

View all