Today Amazon Web Services (AWS) announced it is providing a $700,000 gift to the University of Pennsylvania School of Engineering and Applied Science to support research on fair and trustworthy AI. The funds will be distributed to 10 PhD engineering students who are conducting research in that area.

The students are conducting their research under the auspices of the ASSET (AI-Enabled Systems: Safe, Explainable and Trustworthy) Center, part of Penn Engineering’s Innovation in Data Engineering and Science (IDEAS) Initiative. ASSET’s mission is to advance “science and tools for developing AI-enabled data-driven engineering systems so that designers can guarantee that they are doing what they designed them to do and users can trust them to do what they expect them to do.”

“The ASSET Center is proud to receive Amazon’s support for these doctoral students working to ensure that systems relying on artificial intelligence are trustworthy,” said Rajeev Alur, the director of ASSET and the Zisman Family Professor in the Department of Computer and Information Science (CIS). “Penn’s interdisciplinary research teams lead the way in answering the core questions that will define the future of AI and its acceptance by society. How do we make sure that AI-enabled systems are safe? How can we give assurances and guarantees against harm? How should decisions made by AI be explained in ways that are understandable to stakeholders? How must AI algorithms be engineered to address concerns about privacy, fairness, and bias?”

“It’s great to collaborate with Penn on such important topics as trust, safety and interpretability,” said Stefano Soatto, vice president of applied science for Amazon Web Services (AWS) Artificial Intelligence (AI). “These are key to the long-term beneficial impact of AI, and Penn holds a leadership position in this area. I look forward to seeing the students’ work in action in the real world.”

The funded research projects are centered around themes of machine learning algorithms with fairness/privacy/robustness/safety guarantees; analysis of artificial intelligence-enabled systems for assurance; explainability and interpretability; neurosymbolic learning; and human-centric design.

“This gift from AWS comes at an important time for research in responsible AI,” said Michael Kearns, an Amazon Scholar and the National Center Professor of Management & Technology. “Our students are hard at work creating the knowledge that industry requires for commercial technologies that will define so much of our lives, and it’s essential to invest in talented researchers focused on technically rigorous and socially engaged ways to use AI to our advantage.”

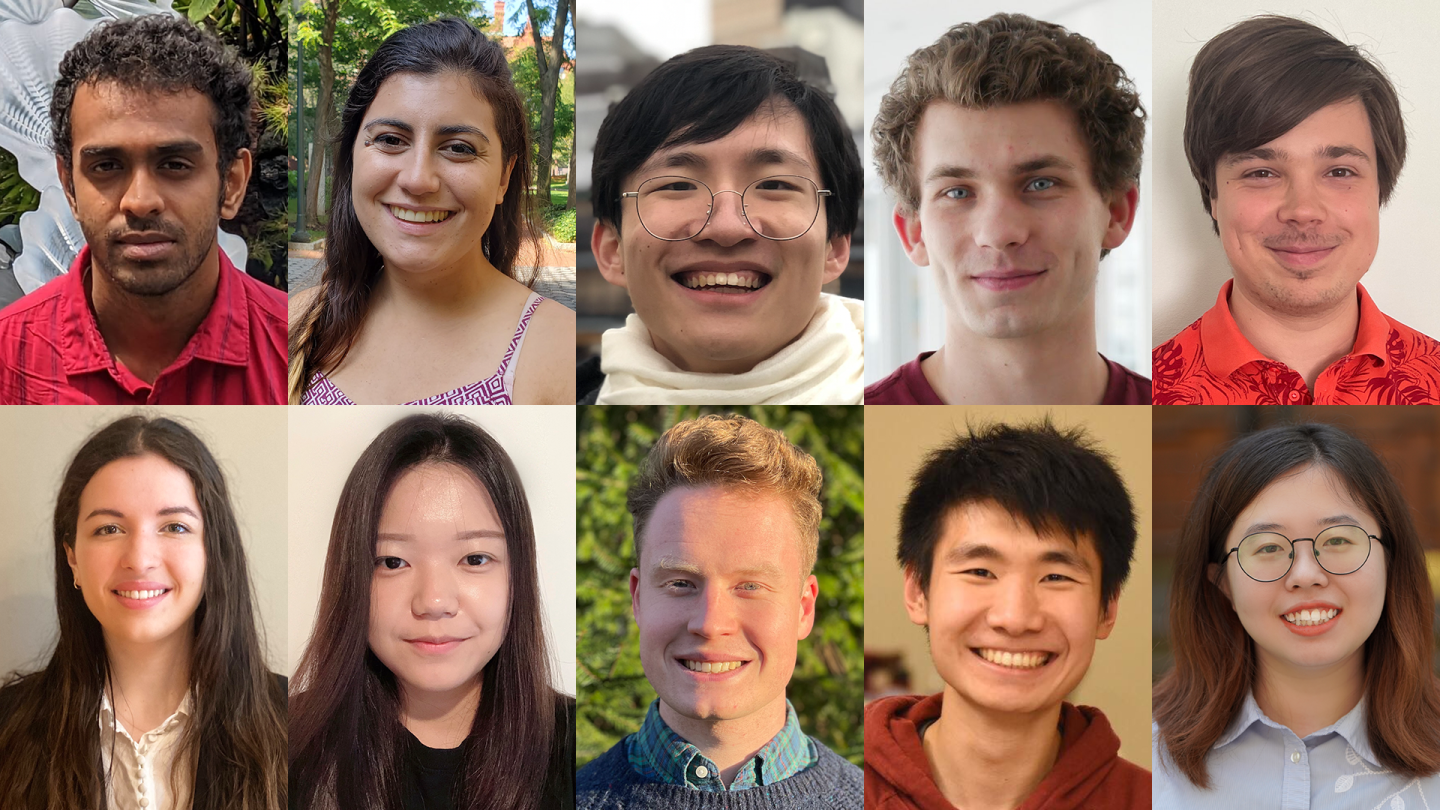

Below are the 10 students receiving funding and details on their research.

- Eshwar Ram Arunachaleswaran is a second-year PhD student, advised by Sampath Kannan, the Henry Salvatori Professor in the Department of Computer and Information Science, and Anindya De, assistant professor of computer science. Arunachaleswaran’s research is focused on fairness notions and fair algorithms when individuals are classified by a network of classifiers, possibly with feedback.

- Natalie Collina is a second-year PhD student, advised by Kearns and Aaron Roth, the Henry Salvatori Professor of Computer and Cognitive Science, who, like Kearns, is also an Amazon Scholar. Collina is investigating models for data markets, in which a seller might choose to add noise to query answers for both privacy and revenue purposes. Her goal is to put the study of markets for data on firm algorithmic and microeconomic foundations.

- Ziyang Li is a fourth-year PhD student, advised by Mayur Naik, professor of computer science. Li is developing a programming language and open-source framework called Scallop for developing neurosymbolic AI applications. Li sees neurosymbolic AI as an emerging paradigm which seeks to integrate deep learning and classical algorithms in order to leverage the best of both worlds.

- Stephen Mell is a fourth-year PhD student, advised by Osbert Bastani, an assistant professor at CIS, and Steve Zdancewic, Schlein Family President's Distinguished Professor and associate chair of CIS. Mell is currently studying how to make machine learning algorithms more robust and data efficient by leveraging neurosymbolic techniques. His goal is to design algorithms that can learn from just a handful of examples in safety-critical settings.

- Georgy Noarov is a third-year PhD student, advised by Kearns and Roth. Noarov is studying means for uncertainty quantification of black-box machine learning models, including strong variants of calibration and conformal prediction.

- Artemis Panagopoulou is a second-year PhD student, advised by Chris Callison-Burch, an associate professor in CIS, and Mark Yatskar, an assistant professor in CIS. Panagopoulou is designing explainable models for image classification using large language models to generate concepts used in classification. The goal of Panagopoulou's research is to produce more trustworthy AI systems by creating human-readable features that are faithfully used by the model during classification.

- Jianing Qian is a third-year PhD student, advised by Dinesh Jayaraman, an assistant professor in CIS. Qian's research is focused on acquiring hierarchical object-centric visual representations that are interpretable to humans, and learning structured visuomotor control policies for robots that exploit these visual representations, through imitation and reinforcement learning.

- Alex Robey is a third-year PhD student, advised by George Pappas, the UPS Foundation Professor and chair of the Department of Electrical and Systems Engineering, and Hamed Hassani, an assistant professor in the Department of Electrical and Systems Engineering. Robey is working on deep learning that is robust to distribution shifts due to natural variation, e.g. lighting, background changes, and weather changes.

- Anton Xue is a fourth-year PhD student, advised by Alur. Xue’s research is focused on robustness and interpretability of deep learning. He is currently researching techniques to compare and analyze the effectiveness of methods for interpretable learning.

- Yahan Yang is a third-year PhD student advised by Insup Lee, the Cecilia Fitler Moore Professor in the Department of Computer and Information Science and the director of PRECISE Center in the School of Engineering and Applied Science. Yang has been researching a two-stage classification technique, called memory classifiers, that can improve robustness of standard classifiers to distribution shifts. Her approach combines expert knowledge about the “high-level” structure of the data with standard classifiers.