Customer-obsessed science

Research areas

-

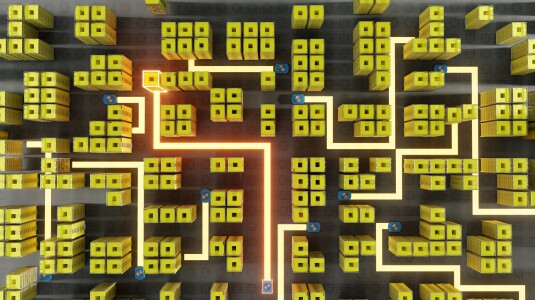

August 11, 2025Trained on millions of hours of data from Amazon fulfillment centers and sortation centers, Amazon’s new DeepFleet models predict future traffic patterns for fleets of mobile robots.

-

Featured news

-

In this new LLM-world where users can ask any natural language question, the focus is on the generation of answers with reliable information while satisfying the original intent. LLMs are known to generate multiple versions of answers for the same question, some of which may be better than others. Identifying the most suitable response that adequately addresses the question is non-trivial. In order to tackle

-

2024The question-answering (QA) capabilities of foundation models are highly sensitive to prompt variations, rendering their performance susceptible to superficial, non-meaning-altering changes. This vulnerability often stems from the model’s preference or bias towards specific input characteristics, such as option position or superficial image features in multi-modal settings. We propose to rectify this bias

-

2024In this position paper, we argue that human evaluation of generative large language models (LLMs) should be a multidisciplinary undertaking that draws upon insights from disciplines such as user experience research and human behavioral psychology to ensure that the experimental design and results are reliable. The conclusions from these evaluations, thus, must consider factors such as usability, aesthetics

-

ACL Findings 20242024We show that content on the web is often translated into many languages, and the low quality of these multi-way translations indicates they were likely created using Machine Translation (MT). Multi-way parallel, machine generated content not only dominates the translations in lower resource languages; it also constitutes a large fraction of the total web content in those languages. We also find evidence

-

Transactions on Machine Learning Research2024Model miscalibration has been frequently identified in modern deep neural networks. Recent work aims to improve model calibration directly through a differentiable calibration proxy. However, the calibration produced is often biased due to the binning mechanism. In this work, we propose to learn better-calibrated models via meta-regularization, which has two components: (1) gamma network (γ-Net), a meta

Academia

View allWhether you're a faculty member or student, there are number of ways you can engage with Amazon.

View all