In recent years, mitigating bias in machine learning models has become a major topic of research, and that’s as true in natural-language processing as in any other field.

“I think it's finally becoming obvious how important it is to deal with this, and I'm very happy to see it,” says Georgiana Dinu, an applied scientist with Amazon Web Services and an area chair at this year’s Conference on Empirical Methods in Natural Language Processing (EMNLP), which starts next week. “Leaving aside the fact that we have a duty to quantify and address bias, the problems are really difficult and fascinating in themselves.”

Dinu’s own area of research is machine translation, where, she says, the problem of quantifying bias is particularly acute. “It's incredibly difficult, because there are multiple acceptable translations for an input, and it's not easy to identify when a translation is biased or it's a variation,” she says.

One clear area of bias in machine translation, however, is gender stereotyping when translating from a language with ungendered nouns to one with gendered nouns.

“An example of this is ‘My friend is a nurse,’” Dinu explains. “Stereotyping comes in when ‘nurse’ gets translated as female, but on the other hand, in ‘My friend is a doctor,’ ‘doctor’ is translated as male.

“At least one of the causes of this is imbalance in the training data. In machine translation, we use parallel sentences as training data, and that training data is very imbalanced with respect to gender. In Europarl, which is one of the most-used parallel corpora, only 30% of the data has female speakers. Other public datasets have close to three times as much masculine-specific data as feminine-specific data.”

But while gender bias in translation is a known problem, it can be difficult to resolve in specific cases, Dinu explains.

“We sometimes have input that is ambiguous in the source language,” Dinu explains. “For these scenarios, without any additional information, we simply can't know the correct translation. In these cases, our space of solutions becomes different. We could try to rephrase the translation such that it underspecifies the gender, but that might just be impossible in some cases. Other options are to disambiguate such a sentence by context, if the customer provides it. If it's a conversation, we might be able to infer the gender of the person. Or we could expand translation to allow customers to tell us the desired gender in ambiguous cases.”

Anti-stereotypical translation

Even in unambiguous cases, however, translation models may be so biased that they still produce erroneous translations.

“Models go to great lengths just to avoid generating anti-stereotypical outputs,” Dinu says. “It's really unbelievable, sometimes, what we see. If you try to translate a sentence such as ‘My sister takes pride in being a great surgeon,’ in certain languages, the model will change the meaning of the sentence to basically mean, ‘My sister takes great pride in me, a man, being a great surgeon.’ In other cases, it simply generates ungrammatical output, where ‘surgeon’ is male.”

At EMNLP, Dinu and her colleagues are presenting a paper that tackles exactly this problem.

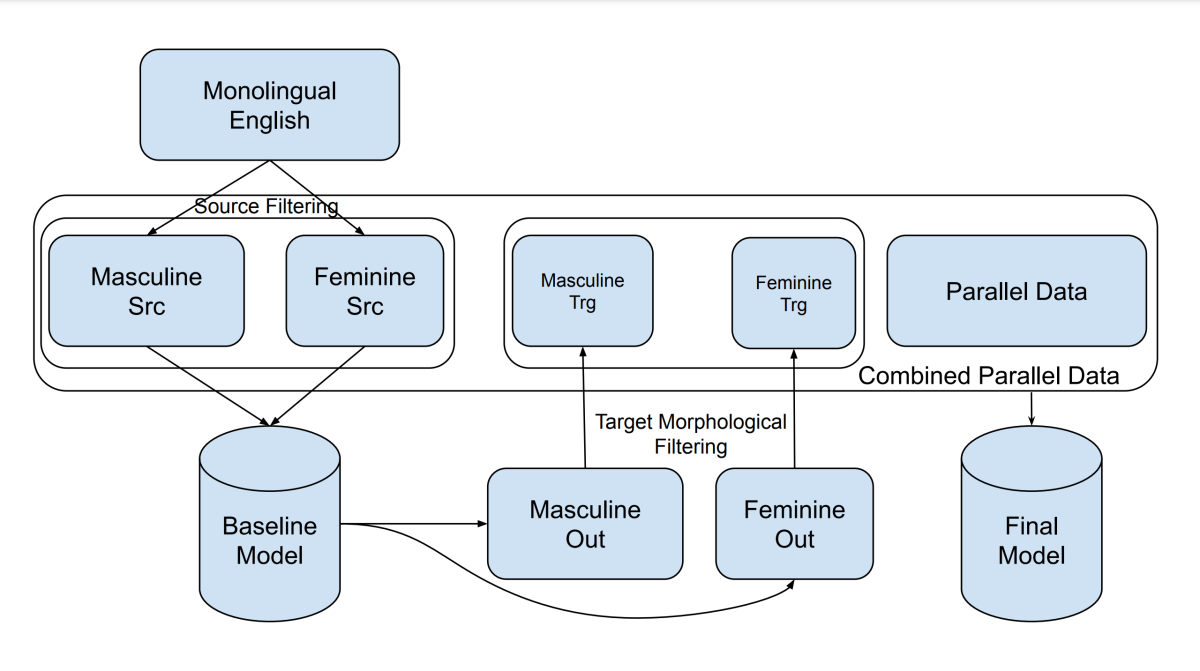

“We basically proposed data augmentation as a solution to address imbalances in the training data,” she says. “What is particularly nice about our approach is that we are using only monolingual data. It's a self-training approach, where the models themselves translate more feminine-gendered data. We have a step to remove sentences that are translated wrongly, and the resulting data is added to the training data to create more balance. In a couple of public data sets, this improved accuracy in feminine-referring sentences without degradation in masculine gender accuracy.

But even for this relatively narrow subset of machine translation problems, Dinu says, much work remains to be done.

“In this work, we consider only two genders, masculine and feminine,” she says. “Obviously, we need to expand this to other underrepresented genders. And there are other types of bias that can occur in machine translation. There are many forms of representational bias, where, basically, you have lower quality for one group, in a protected class, versus another group. For example, if we have a sentence such as ‘She met her spouse while giving her French lessons’, you want that to be translated just as accurately as ‘She met her spouse while giving him French lessons.’

“Another topic is just generating denigrating and offensive language in translation. In general, we have a long way to go, because biases are expressed so diversely in language.”

Getting closer to the user

For the Conference on Machine Translation, which is a two-day EMNLP workshop, Dinu helped organize a shared task on “translation using terminologies”, in which a machine translation engine has access to a database of preferred translations for particular terms.

“To give an example, if you have in English something like ‘order’ in the retail domain, you would have a customer saying that they want that to be translated as ‘commande’ in French.

“These might vary from customer to customer,” Dinu says. “They might change every year, so they have a very dynamic nature. An established task in translation is how to make machine translation models comply with these terminologies.

“We released data for five language pairs in the medical domain, and we invited participants to submit models for this task. We received 43 submissions from 19 teams total.

“The solution space for this task has changed in recent years. We're finally making use of the power of machine learning models and have models that don't just translate but can also apply 'instructions' on how to translate certain phrases. So just to give an example, normally in machine translation, the input is just a sentence in English that needs to be translated in French. But now the input is a sentence with an annotation, indicating how to translate a certain term in that sentence, which is something you can retrieve automatically from your terminology database. And neural networks are so powerful that they can just learn this behavior. They learn to translate but also to apply terminology constraints.

“In machine translation, we're seeing more and more the need to do things beyond translation. For example, text that contains HTML markup. Say you have an input sentence with, let's say, bold markup from an html page. What you have here is not a simple translation task but the task of translating and correctly transferring the markup from the source into the target. Or maybe you're translating a table in a document, and you want the translated text to fit in the table.

“Ultimately, it's just getting closer to the user of the translation technology. It's really just bridging the gap between translation in its simplest form, which is what we had to address first, and what the users actually need, which is often translation integrated with something else.”