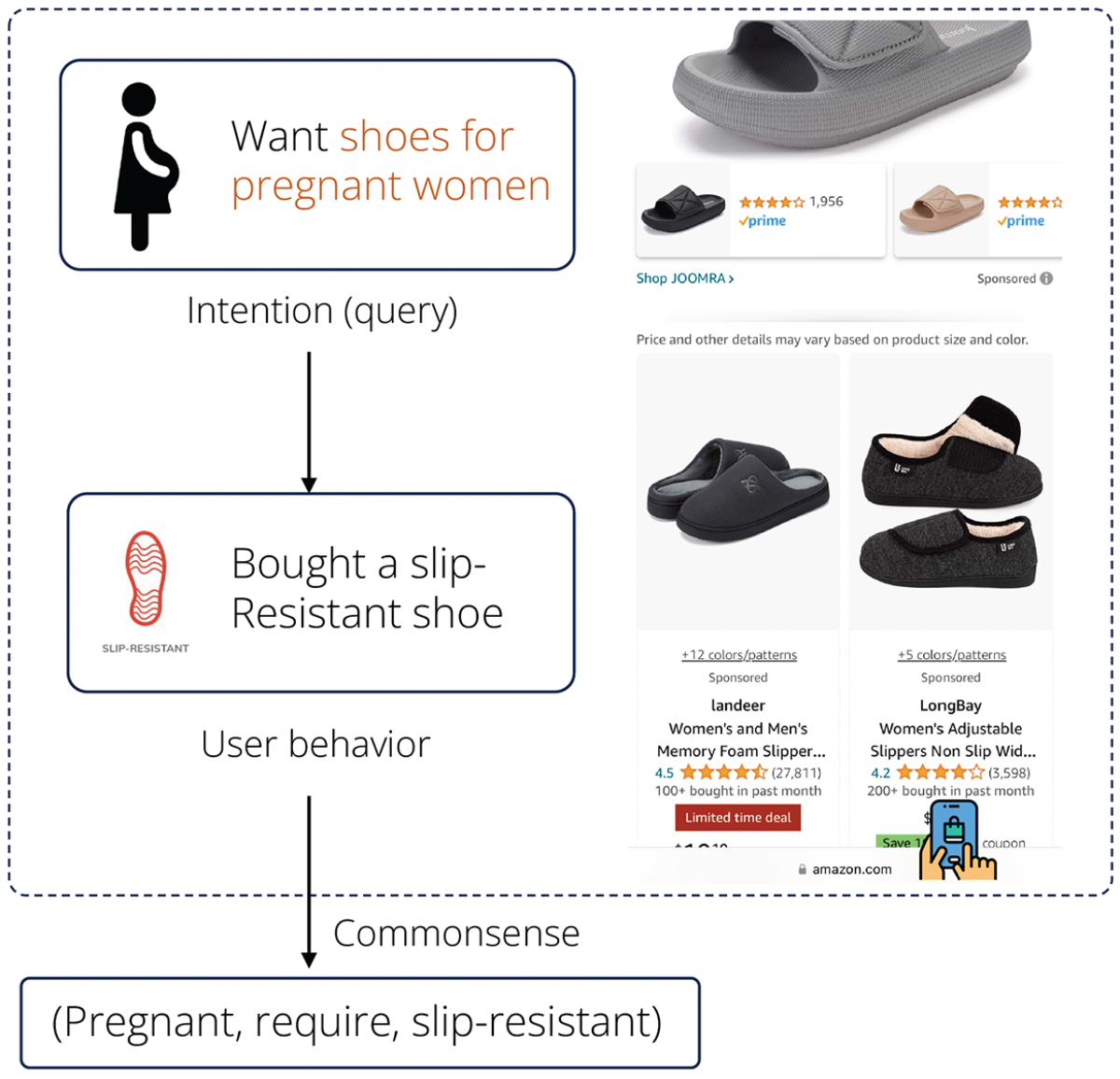

At the Amazon Store, we strive to deliver the product recommendations most relevant to customers’ queries. Often, that can require commonsense reasoning. If a customer, for instance, submits a query for “shoes for pregnant women”, the recommendation engine should be able to deduce that pregnant women might want slip-resistant shoes.

To help Amazon’s recommendation engine make these types of commonsense inferences, we’re building a knowledge graph that encodes relationships between products in the Amazon Store and the human contexts in which they play a role — their functions, their audiences, the locations in which they’re used, and the like. For instance, the knowledge graph might use the used_for_audience relationship to link slip-resistant shoes and pregnant women.

In a paper we’re presenting at the Association for Computing Machinery’s annual Conference on Management of Data (SIGMOD) in June 2024, we describe COSMO, a framework that uses large language models (LLMs) to discern the commonsense relationships implicit in customer interaction data from the Amazon Store.

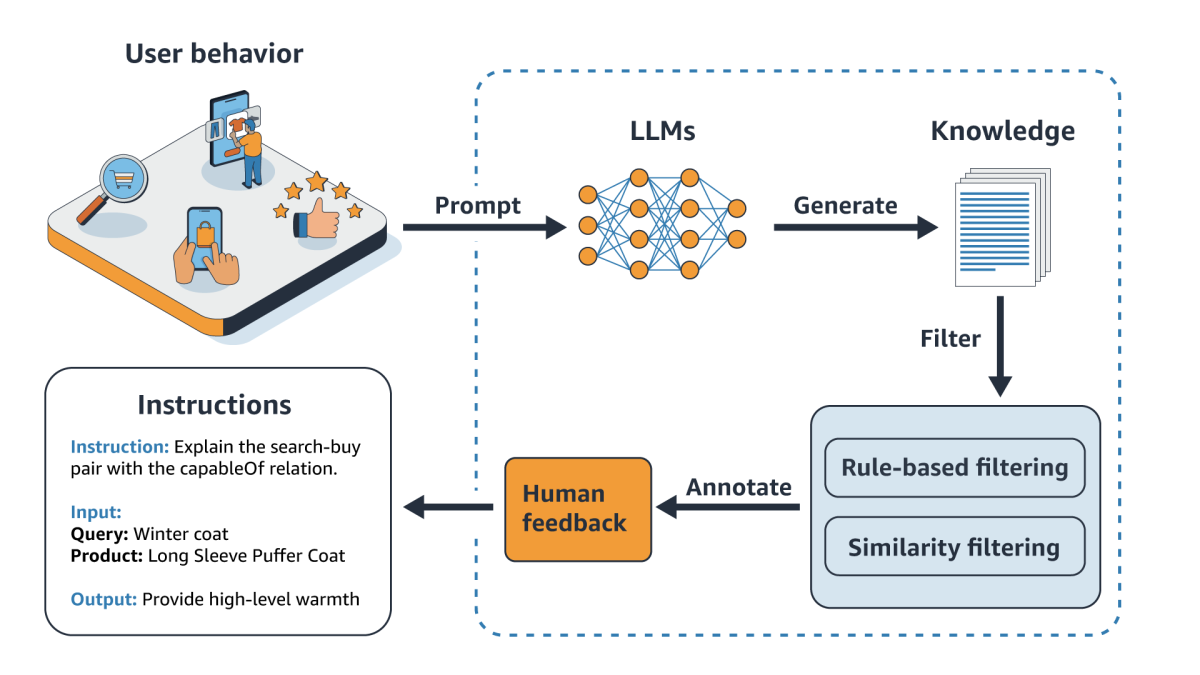

COSMO involves a recursive procedure in which an LLM generates hypotheses about the commonsense implications of query-purchase and co-purchase data; a combination of human annotation and machine learning models filters out the low-quality hypotheses; human reviewers extract guiding principles from the high-quality hypotheses; and instructions based on those principles are used to prompt the LLM.

To evaluate COSMO, we used the Shopping Queries Data Set we created for KDD Cup 2022, a competition held at the 2022 Conference on Knowledge Discovery and Data Mining (KDD). The dataset consists of queries and product listings, with the products rated according to their relevance to each query.

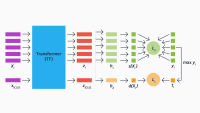

In our experiments, three models — a bi-encoder, or two-tower model; a cross-encoder, or unified model; and a cross-encoder enhanced with relationship information from the COSMO knowledge graph — were tasked with finding the products most relevant to each query. We measured performance using two different F1 scores: macro F1 is an average of F1 scores in different categories, and micro F1 is the overall F1 score, regardless of categories.

When the models’ encoders were fixed — so the only difference between the cross-encoders was that one included COSMO relationships as inputs and the other didn’t — the COSMO-based model dramatically outperformed the best-performing baseline, achieving a 60% increase in macro F1 score. When the encoders were fine-tuned on a subset of the test dataset, the performance of all three models improved significantly, but the COSMO-based model still held a 28% edge in macro F1 and a 22% edge in micro F1 over the best-performing baseline.

COSMO

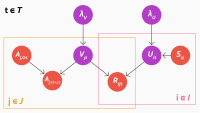

COSMO’s knowledge graph construction procedure begins with two types of data: query-purchase pairs, which combine queries with purchases made within a fixed span of time or a fixed number of clicks, and co-purchase pairs, which combine purchases made during the same shopping session. We do some initial pruning of the dataset to mitigate noise — for instance, removing co-purchase pairs in which the product categories of the purchased products are too far apart in the Amazon product graph.

We then feed the data pairs to an LLM and ask it to describe the relationships between the inputs using one of four relationships: usedFor, capableOf, isA, and cause. From the results, we cull a finer-grained set of frequently recurring relationships, which we codify using canonical formulations such as used_for_function, used_for_event, and used_for_audience. Then we repeat the process, asking the LLM to formulate its descriptions using our new, larger set of relationships.

LLMs, when given this sort of task, have a tendency to generate empty rationales, such as “customers bought them together because they like them”. So after the LLM has generated a set of candidate relationships, we apply various heuristics to winnow them down. For instance, if the LLM’s answer to our question is semantically too similar to the question itself, we filter out the question-answer pair, on the assumption that the LLM is simply paraphrasing the question.

From the candidates that survive the filtering process, we select a representative subset, which we send to human annotators for assessment according to two criteria: plausibility, or whether the posited inferential relationship is reasonable, and typicality, or whether the target product is one that would commonly be associated with either the query or the source product.

Using the annotated data, we train a machine-learning-based classifier that assigns plausibility and typicality scores to the remaining candidates, and we keep only those that exceed some threshold. From those candidates we extract syntactic and semantic relationships that can be encoded as instructions to an LLM, such as “generate explanations for the search-buy behavior in the domain 𝑑 using the capableOf relation”. Then we reassess all our candidate pairs, prompting the LLM with the applicable instructions.

The result is a set of entity-relation-entity triples, such as <co-purchase of camera case and screen protector, capableOf, protecting camera>, from which we assemble a knowledge graph.

Evaluation and application

The bi-encoder model we used in our experiments had two separate encoders, one for a customer query and one for a product. The outputs of the two encoders were concatenated and fed to a neural-network module that produced a relevance score.

In the cross-encoder, all the relevant features of both the query and the product description pass to the same encoder. In general, cross-encoders work better than bi-encoders, so that’s the architecture we used to test the efficacy of COSMO data.

In the first stage of experiments, with frozen encoders, the baseline models received query-product pairs; a second cross-encoder received query-product pairs, along with relevant triples from the COSMO knowledge graph, such as <co-purchase of camera case and screen protector, capable_of, protecting camera>. In this case, the COSMO-seeded model dramatically outperformed the cross-encoder baseline, which outperformed the bi-encoder baseline on both F1 measures.

In the second stage of experiments, we fine-tuned the baseline models on a subset of the Shopping Queries Data Set and fine-tuned the second cross-encoder on the same subset and the COSMO data. The performance of all three models jumped dramatically, but the COSMO model maintained an edge of more than 20% on both F1 measures.