At this year’s Conference on Knowledge Discovery and Data Mining (KDD), Amazon hosted a workshop in which we announced the results of our ESCI Challenge for Improving Product Search, which we launched under the auspices of the KDD Cup, an annual group of competitions at KDD.

The goal of the challenge was to simultaneously improve the ranking of the products retrieved by a product query — their relevance to customers — and to suggest appealing alternatives (i.e., substitutable products).

The competition took place between March 15, 2022, and July 20, 2022 and drew more than 1,600 participants from 65 countries, who submitted more than 9,200 solutions. Throughout the course of the challenge, participants submitted more than 2.5 terabytes of code and models, an unprecedented volume of submissions.

The challenge had a cash prize pool of $21,000, with an additional $10,500 in AWS credits for the top-performing teams. As part of the challenge, we introduced the Shopping Queries Data Set, a large dataset of difficult search queries, published with the aim of fostering research in the area of query-product semantic matching.

The challenge

Despite recent advances in machine learning, correctly classifying the results of product queries remains challenging. Noisy information in the results, the difficulty of understanding the query intent, and the diversity of the items available all contribute to the complexity of this problem.

The primary objectives of participants in our competition were to build new ranking strategies and identify interesting categories of results (e.g., substitutes products) that can be used to improve the customer experience. Past research has relied on the notion of binary relevance (whether an item is relevant or not to a given query), which limits the customer experience.

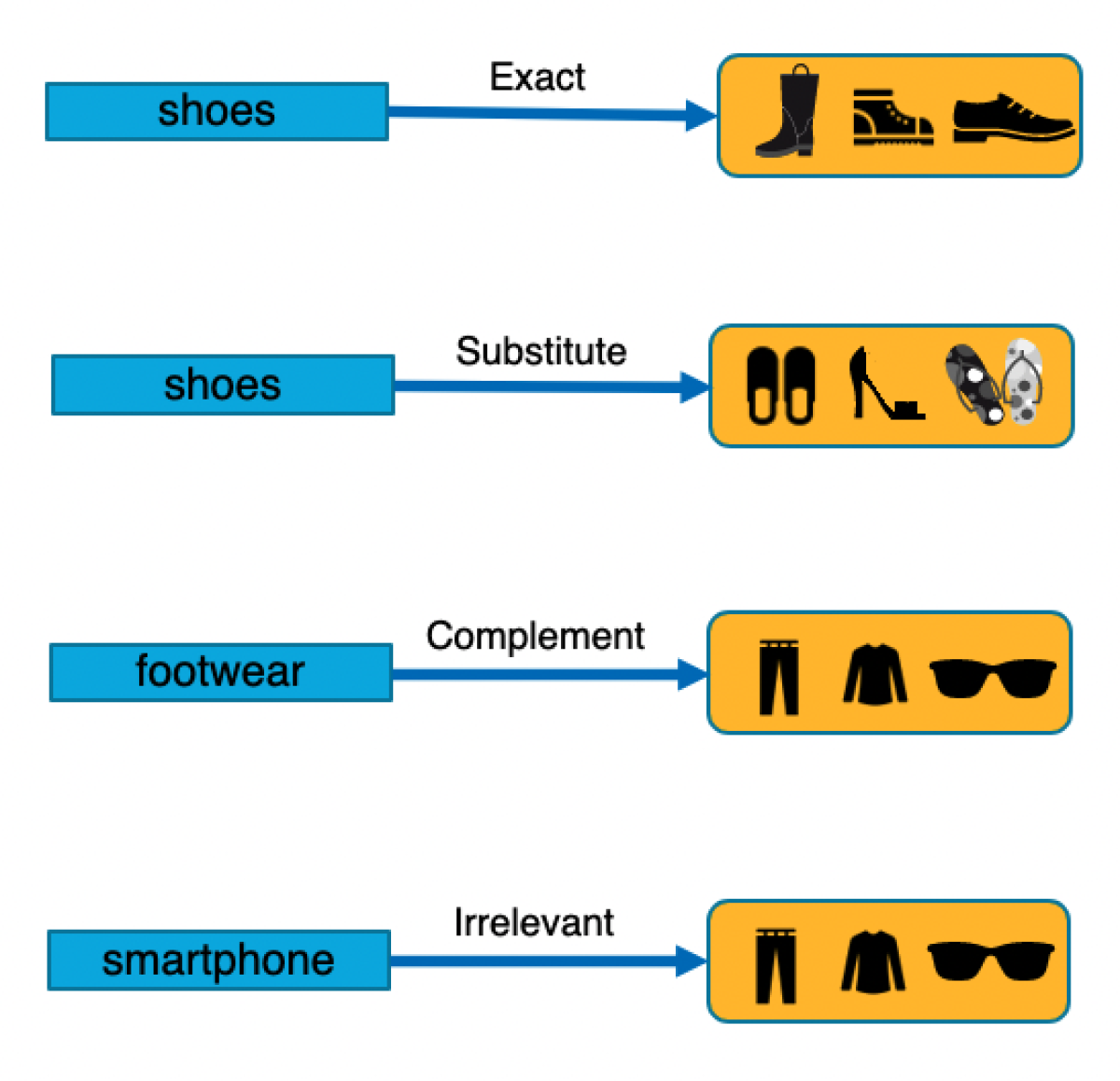

So in our challenge, we broke relevance down into four classes: exact (E), substitute (S), complement (C), and irrelevant (I). Hence the name “ESCI Challenge”.

The three tasks for this KDD Cup competition, using the Shopping Queries Dataset, were:

- Task 1: Query-product ranking: Given a user-specified query and a list of matched products, rank the relevant products above the non-relevant ones (as measured by normalized discounted cumulative gain (nDCG));

- Task 2: Multi-class product classification: Given a query and a list of matched products, classify each product as being an exact match, a substitute, a complement, or irrelevant to the query (as measured by accuracy); and

- Task 3: Product substitute identification: Measure the ability of the systems to identify substitute products in the list of results for a given query (as measured by accuracy).

We have publicly released the Shopping Queries Data Set, in the hope that it will become the ImageNet of product search, due to its representativeness in capturing the complexity of real-world customer queries. In an arXiv paper, we present more details of the data collection and cleaning process, along with the basic statistics.

Uniqueness of the dataset

Some important characteristics of this dataset:

- It is derived from real customers searching for real products online. Products are linked to an online catalogue.

- For each query, the dataset provides a list of up to 40 potentially relevant results, together with ESCI relevance judgements.

- The dataset is multilingual, as it contains queries in English, Japanese, and Spanish. It provides both breadth (a large number of queries) and depth (≈20 results per query), unlike other publicly available datasets.

- All results have been manually labeled with multi-valued relevance labels in the context of e-shopping.

- Queries are not randomly sampled, but rather, subsets of the queries have been sampled specifically to provide a variety of challenging problems (such as negation, attribute parsing, etc.).

- Each query-product pair is accompanied by some additional public catalogue information (including title, product description, and additional product-related bullet points).

Challenge results and workshop

The workshop featured presentations by the winning participants in the KDD Cup competition. When we released the dataset, we also released a strong baseline model, against which we benchmark the contestants’ entries.

The winning team for task 1, from the Interactive Entertainment Group of Netease, in Guangzhou, China, improved over baseline by 6.35%, as measured by nNDCG. (The team’s nDCG score was 0.9043, which is a significant improvement over the baseline of 0.8503.)

The winning team for tasks 2 and 3, from the Ant Group in Hangzhou, Zhejiang, China improved over the baseline by 12.36% (0.8326 versus 0.7410) and 5.66% (0.8790 versus 0.8319), respectively.