At Super Bowl LV, Tom Brady won his seventh title, in his first year as quarterback for the Tampa Bay Buccaneers, whose defense held the high-octane offense of the defending champion Kansas City Chiefs to only nine points.

At key points, the broadcast was augmented by real-time evaluations using the NFL’s Next Gen Stats (NGS) powered by AWS. Several of those stats, such as pass completion probability or expected yards after catch, use machine learning models to analyze the data streaming in from radio frequency ID tags on players’ shoulder pads and on the ball.

Since 2017, Amazon Web Services (AWS) has been the NFL’s official technology provider in every phase of the development and deployment of Next Gen Stats. AWS stores the huge amount of data generated by tracking every player on every play in every NFL game — nearly 300 million data points per season; NFL software engineers use Amazon SageMaker to quickly build, train, and deploy the machine learning (ML) models behind their most sophisticated stats; and the NFL uses the business intelligence tool Amazon QuickSight to analyze and visualize the resulting statistical data.

“We wouldn’t have been able to make the strides we have as quickly as we have without AWS,” says Michael Schaefer, the director of product and analytics for the NFL’s Next Gen Stats. “SageMaker makes the development of ML models easy and intuitive — particularly for those who may not have deep familiarity with ML.”

“And where we’ve needed additional ML expertise,” Schaefer adds, “AWS’s data scientists have been an invaluable resource.”

Secondary variance

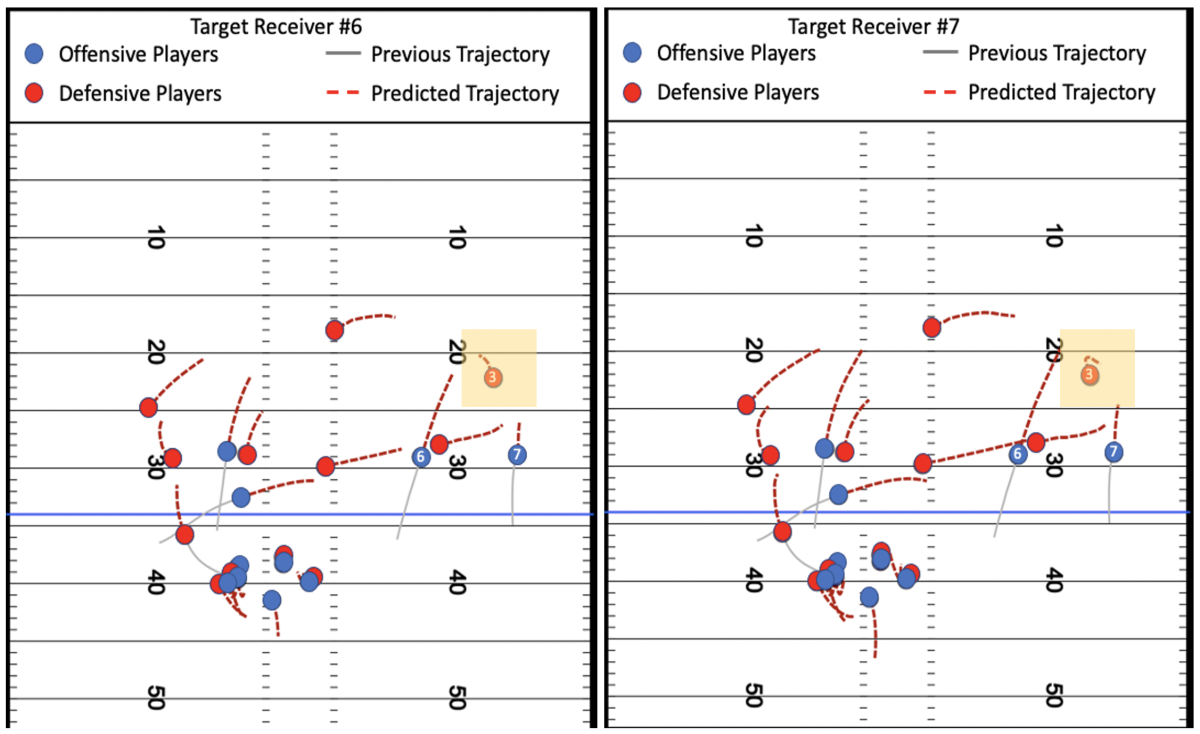

Take, for instance, the problem of defender ghosting, or predicting the trajectories of defensive backs after the ball leaves the quarterback’s hand.

Defender ghosting is not itself a Next Gen Stat, but it’s an essential component of stats under development. For instance, defender ghosting can help estimate how a play would have evolved if the quarterback had targeted a different receiver: would the defensive backs have reached the receiver in time to stop a big gain? Defender ghosting can thus help evaluate a quarterback’s decision making.

Using SageMaker, the NFL’s Next Gen Stats team has constructed some sophisticated machine learning models: the completion probability model, for instance, factors in 10 on-field measurements — including the distance of the pass, distance between the quarterback and the nearest pass rushers, and distance between the receiver and the nearest defenders — and outputs the (league-average) likelihood of completing a pass under those conditions.

But predicting the trajectories of defensive backs — the cornerbacks and safeties who defend against downfield plays — is a particularly tough challenge. Defensive backs tend to cover more territory than other defensive players, and they also tend to make more radical adjustments in coverage as a play develops.

So to build a defender ghosting model, the NFL engineers joined forces with AWS senior scientist Lin Lee Cheong and her team at the Amazon Machine Learning Solutions Lab.

The first thing the AWS-NFL team did was to filter anomalies out of the training data. In 99.9% of cases, the NFL player-tracking system is accurate to within six inches, but like all radio-based technology, it’s susceptible to noise that can compromise accuracy.

“We're scientists. We’re not football experts,” Cheong says. “So we worked closely with the folks from NFL to understand the gameplay. Basic anomaly detection, as well as cleaning of the data, helped tremendously.”

The research team excised player-tracking data that violated a few cardinal rules. For instance, players’ trajectories should never take them off the field, and their speed should never exceed 12.5 yards per second (NFL players’ measured speeds top out at around 11 yards per second).

Where we’ve needed additional ML expertise, AWS’s data scientists have been an invaluable resource.

Next, the team winnowed down the “feature set” for the model. Features are the different types of input data on which a machine learning model bases its predictions. For every player on the field, the NFL tracking system provides location, direction of movement, and speed, which are all essential for predicting defensive backs’ trajectories. But any number of other features — down and distance, distance to the goal line, elapsed game time, length of the current drive, temperature — could, in principle, affect player performance.

The more input features a machine learning model has, however, the more difficult it is to tease out each feature’s correlation with the phenomenon the model is trying to predict. Absent a huge amount of training data, it’s usually preferable to keep the feature set small.

To predict trajectories, the AWS researchers planned to use a deep-learning model. But first they trained a simpler model, called a gradient boosting model, on all the available features.

Gradient boosting models tend to be less accurate than neural networks, but they make it easy to see which input features make the largest contributions to the model output. The AWS-NFL team chose the features most important to the gradient boosting model, and just those features, as inputs to the deep-learning model.

That model proved quite accurate at predicting defensive backs’ trajectories. But the researchers’ job wasn’t done yet.

Quantifying the hypothetical

It was straightforward to calculate the model’s accuracy on plays that had actually taken place on NFL football fields: the researchers simply fed the model a sequence of three player position measurements and determined how well it predicted the next ten.

But one of the purposes of defender ghosting is to predict the outcomes of plays that didn’t happen, in order to assess players’ decision making. Absent the ground truth about the plays’ outcome, how do you gauge the model’s performance?

The researchers’ first recourse was to ask Schaefer to evaluate the predicted trajectories for hypothetical plays.

Next Gen Stats leaderboards

Read more about the NFL regular season's most remarkable performances, as measured by Next Gen Stats powered by AWS.

“He spent a week reviewing every trajectory our model predicted and pointed out all the ones that he thought were questionable, versus the ones that he thought were good,” Cheong says. “He also explained the thought process behind his evaluations, which was nuanced and complex. I thought, ‘Asking a director to spend a whole week reviewing our work after each model iteration is not scalable.’ I wanted to quantify his knowledge. So we created this composite metric that incorporates the know-how that a subject matter expert would use to evaluate trajectories.”

“By combining the NFL’s expertise in football with AWS’s ML experts, we’ve been able to develop and refine statistics for things never before quantified,” Schaefer says.

The core of Cheong and her colleagues’ composite metric is a measure of how quickly a defensive back’s trajectory diminishes his distance from the targeted receiver. Other factors include the distance the defender covers relative to the maximum distance he could have covered at top NFL speeds and whether the defender moves at superhuman speeds, which incurs a penalty in the scoring.

When the AWS researchers apply their metric to actual NFL trajectories, they get an average score of -0.1036; the score is negative because it indicates that the defender is closing the distance between himself and the receiver. When they apply their metric to the trajectories their model predicts, they get an average score of -0.0825 — not quite as good, but in the same ballpark.

When, however, they distort the input data so that the starting orientation and velocity of 25% of defenders are random — that is, 25% of players are totally out of the play to begin with — the score goes up to a positive 0.0425. That’s a further indication that their metric captures information about the quality of the defensive backs’ play.

NFL offenses are incredibly complex, with many moving parts, and getting a statistical handle on them is much more difficult than, say, characterizing the one-on-one confrontations between a pitcher and hitter in baseball. All over the Internet, for instance, debate is raging about whether Tom Brady’s success in Tampa Bay proves that his former coach, Bill Belichick, gets too much credit for the New England Patriots’ nine Super Bowl trips in 17 years.

These types of arguments will probably go on forever; they’re part of the fun of sports fandom. But at the very least, Next Gen Stats powered by AWS should help make them more coherent.

Editor's note: The opening paragraphs of this article were revised to reflect the outcome of Super Bowl LV.