Machine learning – a field of computer science that gives a computer the ability to learn – is changing the world. It’s being used to improve weather forecasting, deliver better healthcare, create self-driving cars, and much more. Amazon is a pioneer in the field, and uses machine learning to make product recommendations, detect fraud, forecast demand, power Alexa, run the Amazon Go Store, and more. And, of course, with Amazon SageMaker the company provides developers and data scientists with the ability to build, train, and deploy machine learning (ML) models quickly and at scale.

Dive into Deep Learning gets an update

The book now includes PyTorch and TensorFlow. We asked the authors why they decided to update their deep-learning book.

Demand is exploding for scientists, data scientists and developers proficient in machine learning, with demand far outstripping supply.

To help close that gap, over the past two years a team of Amazon scientists has compiled a book that is gaining wide popularity with universities that teach machine learning, as well as developers who want to up their machine learning game. The book is called Dive into Deep Learning, and it’s an open source, interactive book that teaches the ideas, the mathematical theory, and the code that powers deep learning, all through a unified medium.

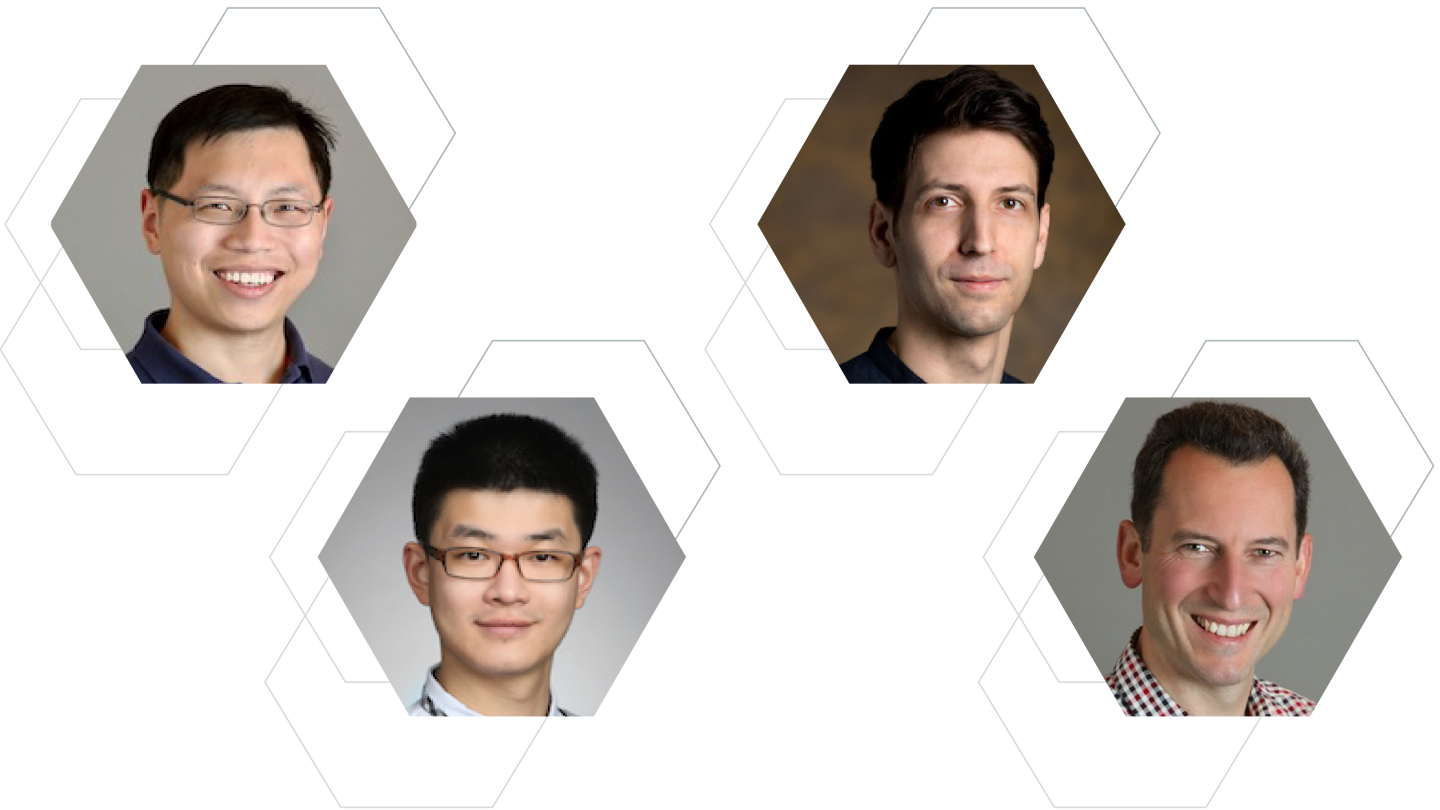

Its authors are Aston Zhang, an AWS senior applied scientist; Zachary Lipton, an AWS scientist and assistant professor of Operations Research and Machine Learning at Carnegie Mellon University; Mu Li, AWS principal scientist; and Alex Smola, AWS vice president and distinguished scientist.

“Dive into Deep Learning is a book I wish existed when I got started with machine learning,” says Smola. “It’s easy to become engrossed in the general theory of machine learning without the ability to build things. Dive into Deep Learning makes it easy for everyone to experiment and learn. Moreover, this publishing approach forces us, the book’s authors, to focus on effects that are significant in practice. After all, anything that is taught needs to be demonstrated with code and data.”

The book got its start in 2017, when the authors set about teaching the wider ML community how the then-new Gluon interface, an open source deep-learning interface that allowed developers to more easily and quickly build machine learning models.

At the time, there were a number of classic textbooks that taught the mathematics of machine learning and scattered open source implementations of popular deep learning models, but existing resources didn’t combine the qualities of a good textbook with the best parts of a hands-on tutorial. That’s especially problematic, for deep learning, which is largely an empirical discipline. In other words, really understanding how it works requires running experiments. So during an internship at Amazon, Lipton created an open-source project, a casual set of tutorials called Deep Learning: the Straight Dope (now deprecated).

While the project was initially created as source material for a set of hands-on tutorials, it rapidly gained wider traction and began to take the form of a book as an open-source community of contributors joined to refine and expand the offering. As Lipton embarked on a faculty position at CMU, Zhang and Li expanded the coverage of some of its foundational topics , and added many more topics to keep pace with the latest innovations in machine learning. They then created a series of video lectures on deep learning in Chinese, which proved popular with students in China.

“We got a lot of feedback from students who said our lectures were helping them ‘get their hands dirty’,” says Zhang, the book’s lead author. “They asked us to turn our lecture notes into something more like a textbook.”

The goal was to make machine learning more accessible to everyone, says Li. “We wanted to teach concepts ‘just in time,’ giving people concepts at the time they need them to accomplish a particular task,” he says. “We wanted people to have the satisfaction of creating their first model before worrying about more esoteric concepts.”

From the start, one key aspiration of the authors was to make the book enjoyable to read – not an endless trudge. Its writing is conversational and approachable, even for relative novices.

It’s easy to become engrossed in the general theory of machine learning without the ability to build things. Dive into Deep Learning makes it easy for everyone to experiment and learn.

Still, creating a book that combined accessibility, breadth, and hands-on learning wasn’t easy. To provide convenient access, Dive into Deep Learning is published on GitHub, which also allows GitHub users to suggest changes and new content. The book was created with Jupyter Notebooks, which allows interactive computing with many programming languages.

“One cool thing about Jupyter Notebooks,” says Lipton, “is not only can you write regular text (with Markdown) and code (here, Python), but you can also include clean mathematical typesetting – using the LaTeX plug-in, which allows you to write mathematical expressions cleanly.”

The book also employs the NumPy interface – a Python-based programming library familiar to most students.

Dive into Deep Learning was originally published in Chinese. Subsequently, the authors translated it into English, while also adding many new topics by incorporating feedback from users.

Perhaps the most interesting aspect of the book is its emphasis on learning by doing. Says Lipton: “I always think of computer science and engineering as autodidactic disciplines, and certainly one of the ideas behind the book is to let people try things out quickly. The book lends itself to self-study – you’re not likely to get stuck, even if you are going it alone.”

In a typical chapter, Computer Vision, for example, the authors begin with a discussion of topics such as altering images to enhance a computer’s ability to identify something (in the book’s example, a cat) even if the image is changed through cropping, color, or brightness. At the end, readers are asked to use a data set to help a computer identify 120 different dog breeds. They are walked through how to download the appropriate data set, organize it, and train the model to identify the breeds.

For the most part, the book’s chapters were written by different members of the team, depending on their own interests and expertise. All the authors then reviewed and edited each chapter.

Thus far the book has proven extremely popular and helped cement Amazon’s status as a center for machine learning excellence. Some 70 universities use the book in machine learning classes, a number that’s growing.

“This is a timely, fascinating book, providing not only a comprehensive overview of deep learning principles but also detailed algorithms with hands-on programming code, and moreover, a state-of-the-art introduction to deep learning in computer vision and natural language processing,” said Jiawei Han, Michael Aiken Chair Professor, University of Illinois at Urbana-Champaign, “Dive into this book if you want to dive into deep learning.”

Adds Jensen Huang, founder and CEO of NVIDIA, “Dive into Deep Learning is an excellent text on deep learning and deserves attention from anyone who wants to learn why deep learning has ignited the AI revolution: the most powerful technology force of our time.”

Right now, the authors’ focus is to keep updating and improving the book based on input from its many users. “It’s a two-way collaboration,” says Zhang. “We help its readers with machine-learning know-how, and they provide feedback to us to improve its quality and stay relevant.”

Video: Dive into Deep Learning lecture series

While working on the book, Aston Zhang and Mu Li edited some of its foundational topics, added additional topics, and created a series of video lectures on deep learning in Chinese, which proved popular with students in China. There are 20 videos in total, which you can watch from the playlist below.