In recent years, most commercial automatic-speech-recognition (ASR) systems have begun moving from hybrid systems — with separate acoustic models, dictionaries, and language models — to end-to-end neural-network models, which take an acoustic signal as input and output text.

End-to-end models have advantages in performance and flexibility, but they require more training data than hybrid systems. That can be a problem in situations where little training data is available — for example, when current events introduce new terminology (“coronavirus”) or when models are being adapted to new applications.

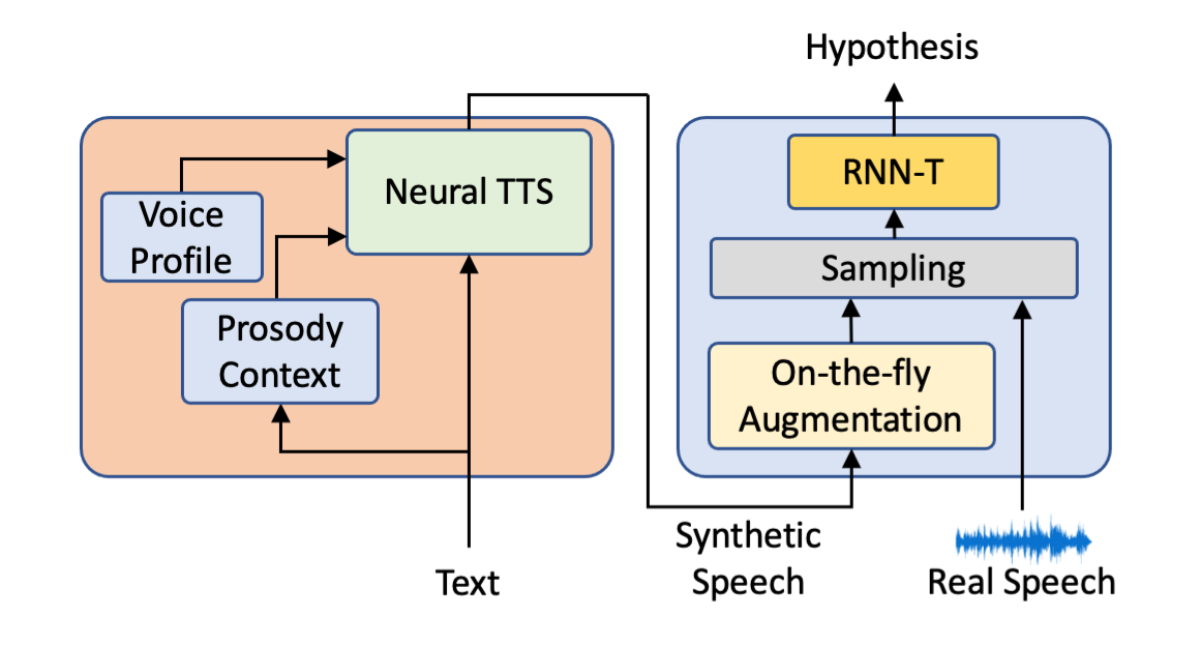

In such situations, using synthetic speech as supplemental training data can be a viable solution. In a paper we presented at this year’s Interspeech, we adopt this approach, using synthetic voice data — like the output speech generated by Alexa’s text-to-speech models — to update an ASR model.

In our experiments, we fine-tuned an existing ASR model to recognize the names of medications it hadn’t heard before and found that our approach reduced the model’s word error rate on the new vocabulary by 65%, relative to the original model.

It also left the model’s performance on the existing vocabulary unchanged, thanks to a continual-learning training procedure we used to avoid “catastrophic forgetting”. We describe that procedure in the paper, along with the steps we took to make the synthetic speech data look as much like real speech data as possible.

Synthetic speech

One key to building a robust ASR model is to train it on a range of different voices, so it can learn a variety of acoustic-frequency profiles and different ways of voicing phonemes, the shortest units of speech. We synthesized each utterance in our dataset 32 times, by randomly sampling 32 voice profiles from 500 we’d collected from volunteers in the lab.

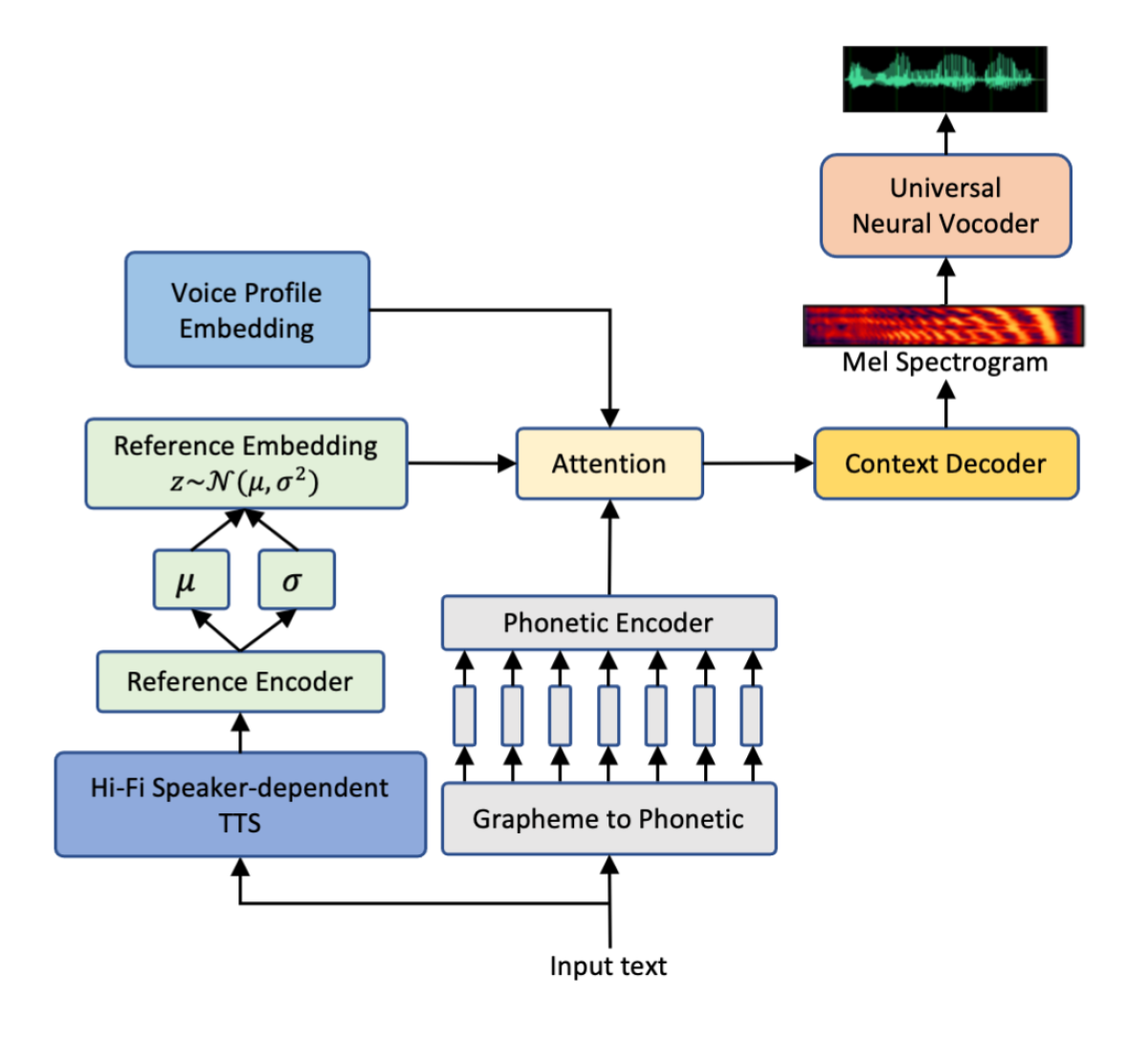

Like most TTS model, ours has an encoder-decoder architecture: the encoder produces a vector representation of the input text, which the decoder translates into an output spectrogram, a series of snapshots of the frequency profile of the synthesized speech. The spectrogram passes to a neural vocoder, which adds the phase information necessary to convert it into a real speech signal.

For each speaker, we used a speaker identification system to produce a unique voice profile embedding — a vector representation of that speaker’s acoustic signature. This embedding is a late input to the TTS model, right before the decoding step.

We also train the TTS model to take a reference spectrogram as input, giving it a model of output prosody (the rhythm, emphasis, melody, duration, and loudness of the output speech). The architecture of the model allows us to vary both the voice profile embedding and the prosody embedding for the same input text, so we can produce multiple versions of the same utterance with different voices and prosodies.

Next, to make our synthesized speech look more like real speech, we manipulate it in a variety of ways: we apply different types of reverberation to it, based on chirp sound samples collected in the lab; we add noise; we attenuate certain frequency bands; and we mask parts of the signal to simulate interruptions. We apply these manipulations randomly according to certain probabilities (a 60% chance of added background noise, for instance) to ensure a good mix of different types of samples.

Continual learning

When a neural-network model is updated to reflect new data, it can run the risk of catastrophic forgetting: changing the model weights to handle new data can compromise the model’s ability to handle the types of data it was originally trained on. In our paper, we describe a few of the techniques we use to prevent this from happening when we fine-tune an existing ASR model on synthetic data.

Our baseline model is an ASR model trained on 50,000 hours of data. We update it for a new vocabulary of medication names in four stages. In the first stage, we add 5,000 hours of synthetic data to the original dataset and fine-tune the model on both, but we freeze the encoder settings, so that only the decoder’s weights change.

In stage two, we again fine-tune the model on the combined dataset, but this time, we allow updates to the encoder weights.

In stage three, we fine-tune the model on only the original data, but we add a new term to the loss function, which penalizes the model if the weights of its connections change too dramatically. Finally, we fine-tune the model on only the original data, allowing all the weights to be updated in an unconstrained way.

In experiments, we found that, after the second stage of training — when the dataset includes the synthetic data, and all weights are free to change — the error rate on the new vocabulary dropped by more than 86% relative to baseline. The error rate on the existing vocabulary, however, rose slightly, by just under 1% relative to baseline.

In some cases, such as the adaptation of a model to a new application, that may be acceptable. But in an update to an already deployed model, it may not be. The third and fourth fine-tuning stages brought the error rate on the original vocabulary below baseline, while still cutting the error rate on the new vocabulary by 65%. Our experiments thus point toward a training methodology that can be adapted to different use cases.