Geospatial technologies have rapidly ascended to a position of paramount importance across the globe. By providing a better understanding of Earth's ever-evolving landscape and our intricate interactions with the environment, these technologies help us navigate complex global challenges. As the volume of geospatial data increases, researchers are exploring ways to bring the full power of deep learning to bear on its analysis.

In the area of artificial intelligence (AI), foundation models have emerged as a transformative technology, offering unparalleled performance in domains such as computer vision and natural-language processing. However, when existing image-embedding models are adapted to the geospatial domain, they tend to fall short because of the inherent differences between natural images and remote sensing data. On the other hand, training geospatial-specific models from the ground up is resource intensive, time consuming, and environmentally costly.

In our recent work “Towards geospatial foundation models via continual pretraining”, published at the 2023 International Conference on Computer Vision (ICCV), we show how to craft more-powerful geospatial foundation models while keeping resource demands in check. Rather than following the usual playbook, we explore the potential of continual pretraining, which involves further refining existing foundation models for specific domains through a secondary pretraining phase. A refined model can then be fine-tuned for various downstream tasks within its domain.

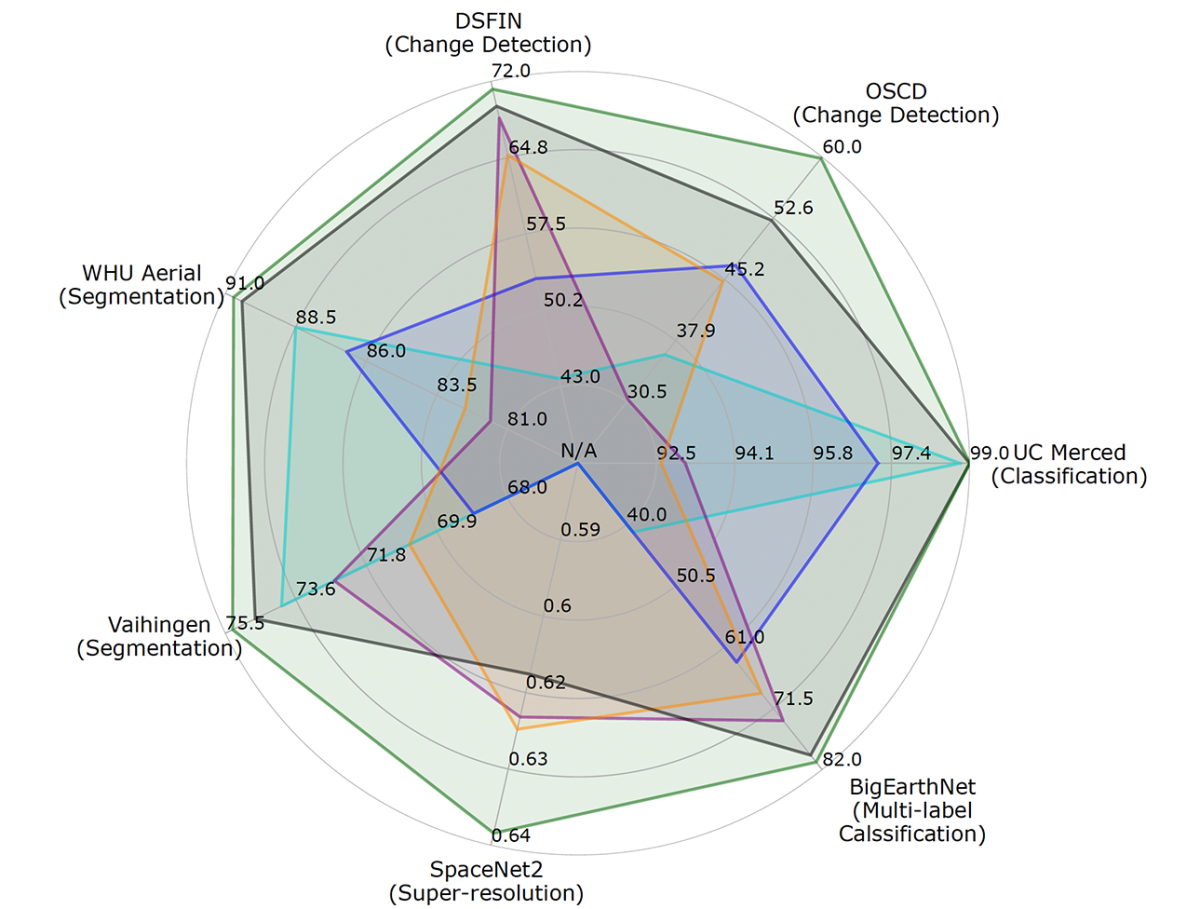

In tests, we compared our approach to six baselines on seven downstream datasets covering tasks such as change detection, classification, multilabel classification, semantic segmentation, and super-resolution. Across all seven tasks, our approach significantly outperformed the baselines.

Our approach has the potential to enhance performance by using large-scale ImageNet representations as a foundation upon which robust geospatial models can be constructed. The computer vision community continuously improves natural-image models, offering a consistent source of better-performing baseline models. Our approach opens the door for geospatial models to harness these advances with minimal resource consumption, ultimately leading to sustainable benefits for the geospatial community.

GeoPile

Building an effective foundation model begins with data selection. A common choice for pretraining geospatial models is data from the Sentinel-2 satellite. However, simply having a large corpus of such imagery isn't enough.

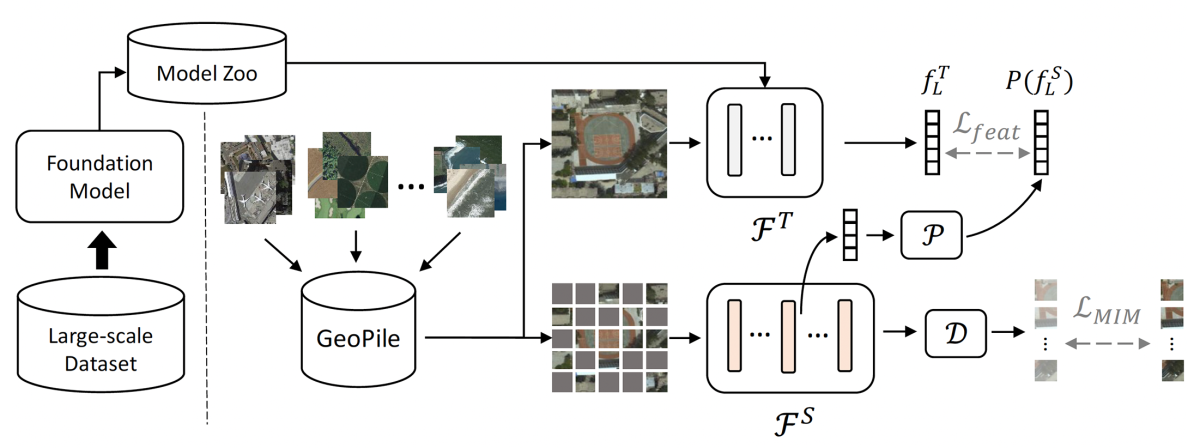

To pretrain our geospatial model, we use the type of self-supervision that’s become standard for foundation models: in a process known as masked image modeling (MIM), we mask out elements of the input images, and the model learns to fill them in. But in this context, the lack of complexity and variability in the Sentinel-2 data can make the reconstruction task too straightforward.

To address this challenge, we combined data from five open-source datasets — with both labeled and unlabeled images — to produce a diverse set of geospatial pretraining data, which we call GeoPile. For textural detail, we ensure a variety of ground sample distances (GSDs), including images with much higher resolution than those captured by Sentinel-2 (which has a GSD of 10 meters). Furthermore, the labeled datasets encompass a wide variety of image classes from general remote sensing scenes, ensuring visual diversity across samples.

Continual pretraining for geospatial foundation models

Much previous research on geospatial foundation models (GFMs) has disregarded existing natural-image models. We, on the other hand, reason that leveraging the knowledge encoded in those models should produce strong performance with minimal overhead. To this end, we propose an unsupervised, multi-objective training paradigm for effective and efficient pretraining of geospatial models.

Our GFM continual-pretraining paradigm is a teacher-student approach that uses two parallel model branches. The teacher (FT) is equipped with the weighty knowledge of ImageNet-22k initialization and serves as a guiding force during training. The student (FS) starts from a blank slate and evolves into the final geospatial foundation model.

This paradigm enables an ideal two-fold optimization. Distillation from the intermediate features of the teacher ensures that the student can benefit from the teacher’s diverse knowledge, learning more in less time. At the same time, the student is given freedom to adapt to in-domain data through its own MIM pretraining objective, gathering new features to improve performance.